Skip to main content![Tyson Condie]()

![Processing Data in Apache Kafka with Structured Streaming in Apache Spark 2.2]()

![Diagram showing the breakdown of various types of data sources and formats]()

![Data Intelligence Platforms]()

Tyson Condie

Tyson Condie's posts

Open Source

April 26, 2017/13 min read

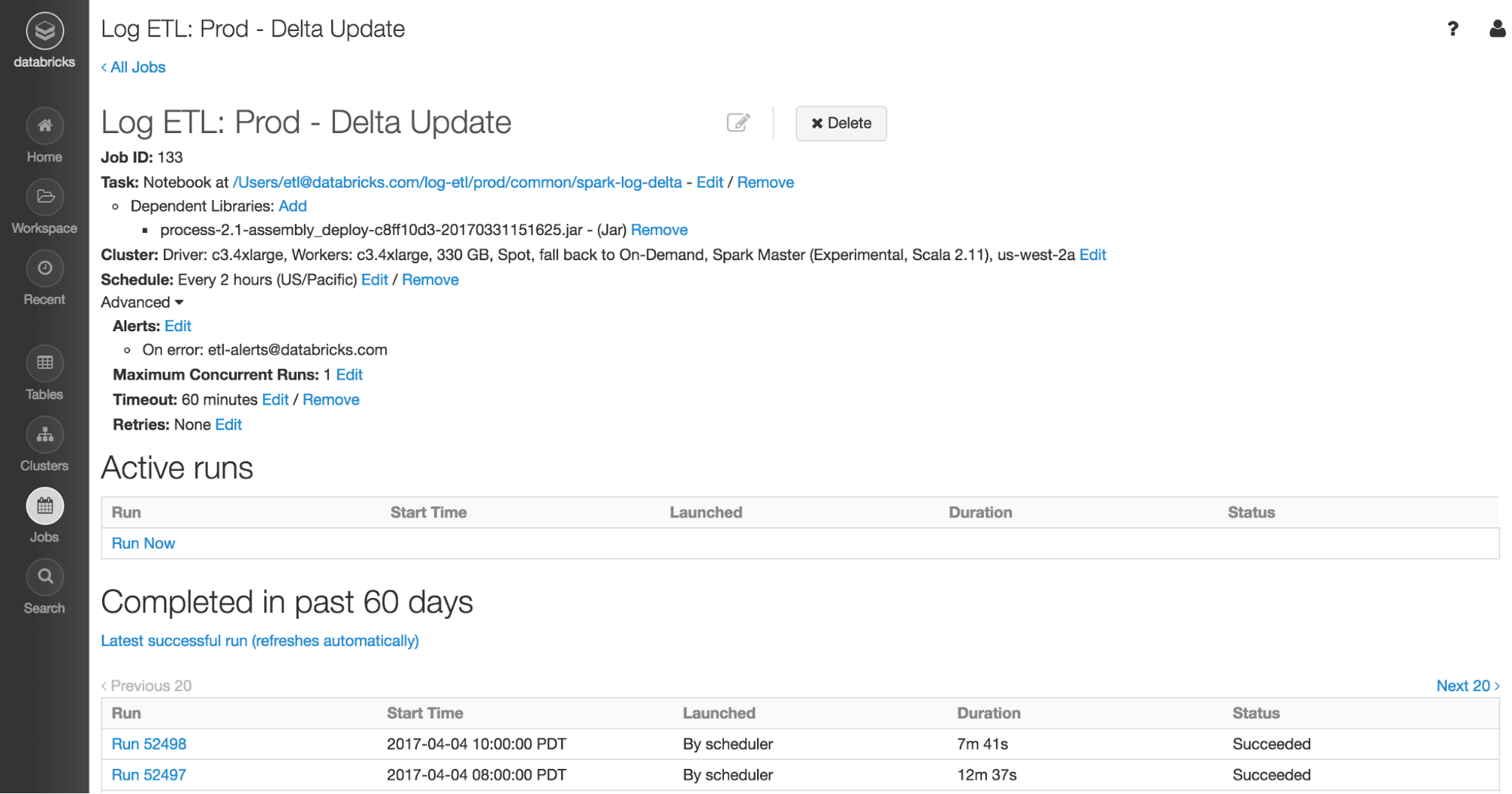

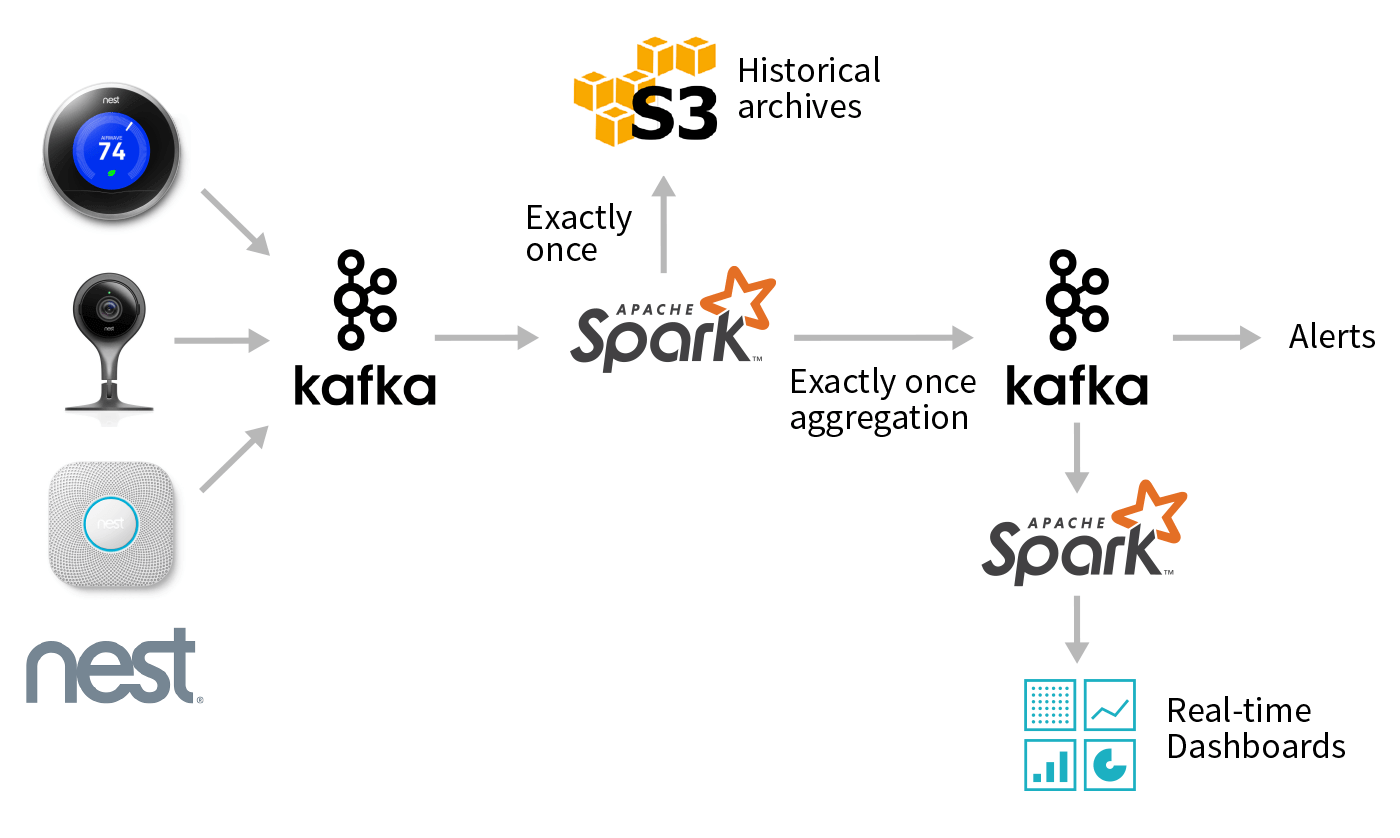

Processing Data in Apache Kafka with Structured Streaming in Apache Spark 2.2

Open Source

February 23, 2017/12 min read

Working with Complex Data Formats with Structured Streaming in Apache Spark 2.1

Open Source

January 19, 2017/10 min read