DeepSeek R1 on Databricks

Published: January 31, 2025

by Dan Pechi, Jai Behl, Michael Shtelma, Austin Zaccor and Hanlin Tang

Summary

- Deploy DeepSeek-R1 with Mosaic AI Model Serving

- Boost Efficiency with distilled models for cost-effective reasoning.

- Govern DeepSeek-R1 seamlessly alongside OpenAI, Amazon Bedrock, and other models.

Deepseek-R1 is a state-of-the-art open model that, for the first time, introduces the ‘reasoning’ capability to the open source community. In particular, the release also includes the distillation of that capability into the Llama-70B and Llama-8B models, providing an attractive combination of speed, cost-effectiveness, and now ‘reasoning’ capability. We are excited to share how you can easily download and run the distilled DeepSeek-R1-Llama models in Mosaic AI Model Serving, and benefit from its security, best-in-class performance optimizations, and integration with the Databricks Data Intelligence Platform. Now with these open ‘reasoning’ models, build agent systems that can even more intelligently reason on your data.

Deploying Deepseek-R1-Distilled-Llama Models on Databricks

To download, register, and deploy the Deepseek-R1-Distill-Llama models on Databricks, use the notebook included here, or follow the easy instructions below:

1. Spin up the necessary compute¹ and load the model and its tokenizer:

This process should take several minutes as we download 32GB worth of model weights in the case of Llama 8B.

2. Then, register the model and the tokenizer as a transformers model. mlflow.transformers makes registering models in Unity Catalog simple – just configure your model size (in this case, 8B) and the model name.

1 We used ML Runtime 16.0 and a r5d.16xlarge single node cluster for the 8B model and a r5d.24xlarge for the 70B model. You don’t need GPU’s per-se to deploy the model within the notebook as long as the compute used has sufficient memory capacity.

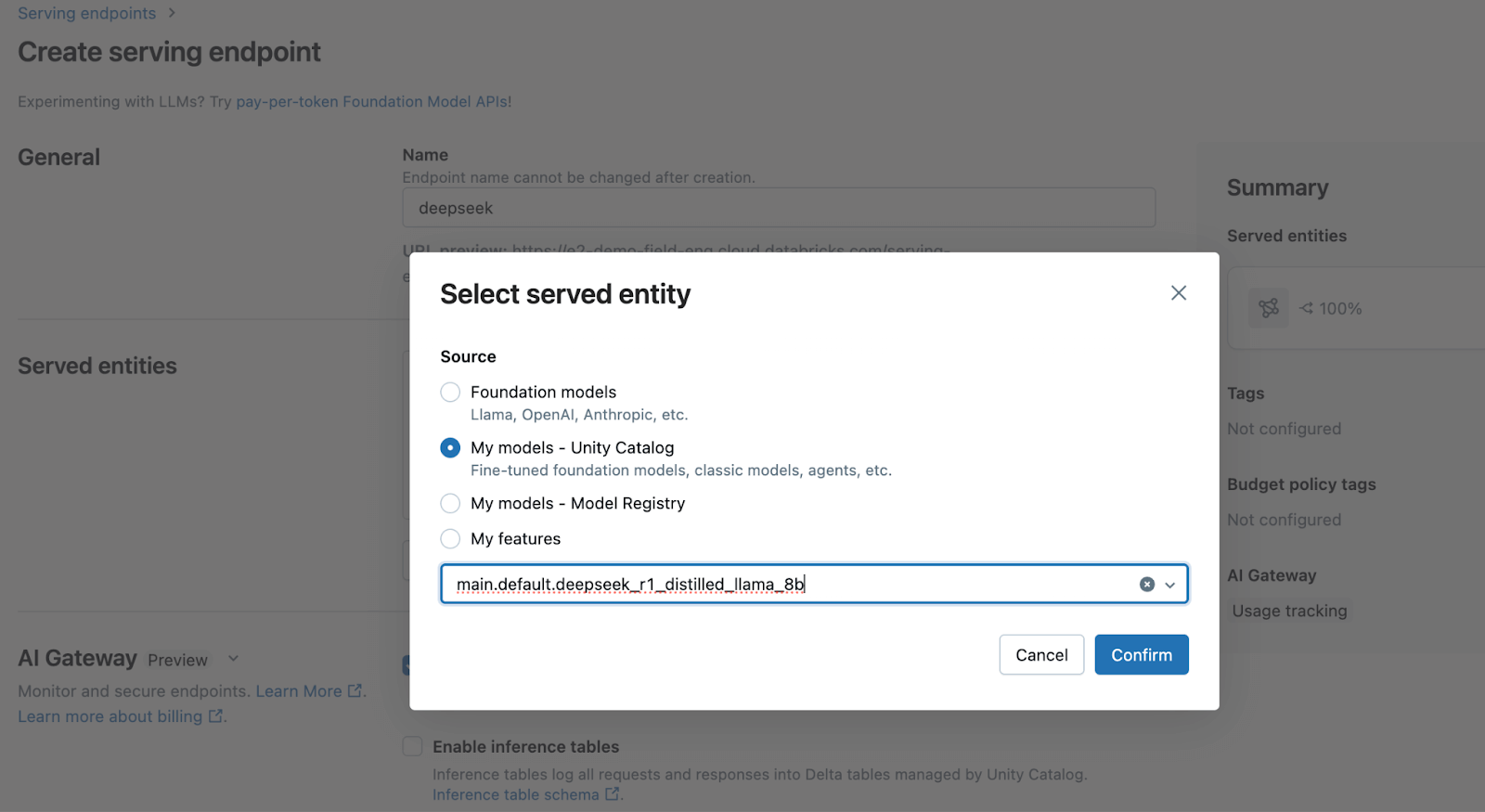

3. To serve this model using our highly optimized Model Serving engine, simply navigate to Serving and launch an endpoint with your registered model!

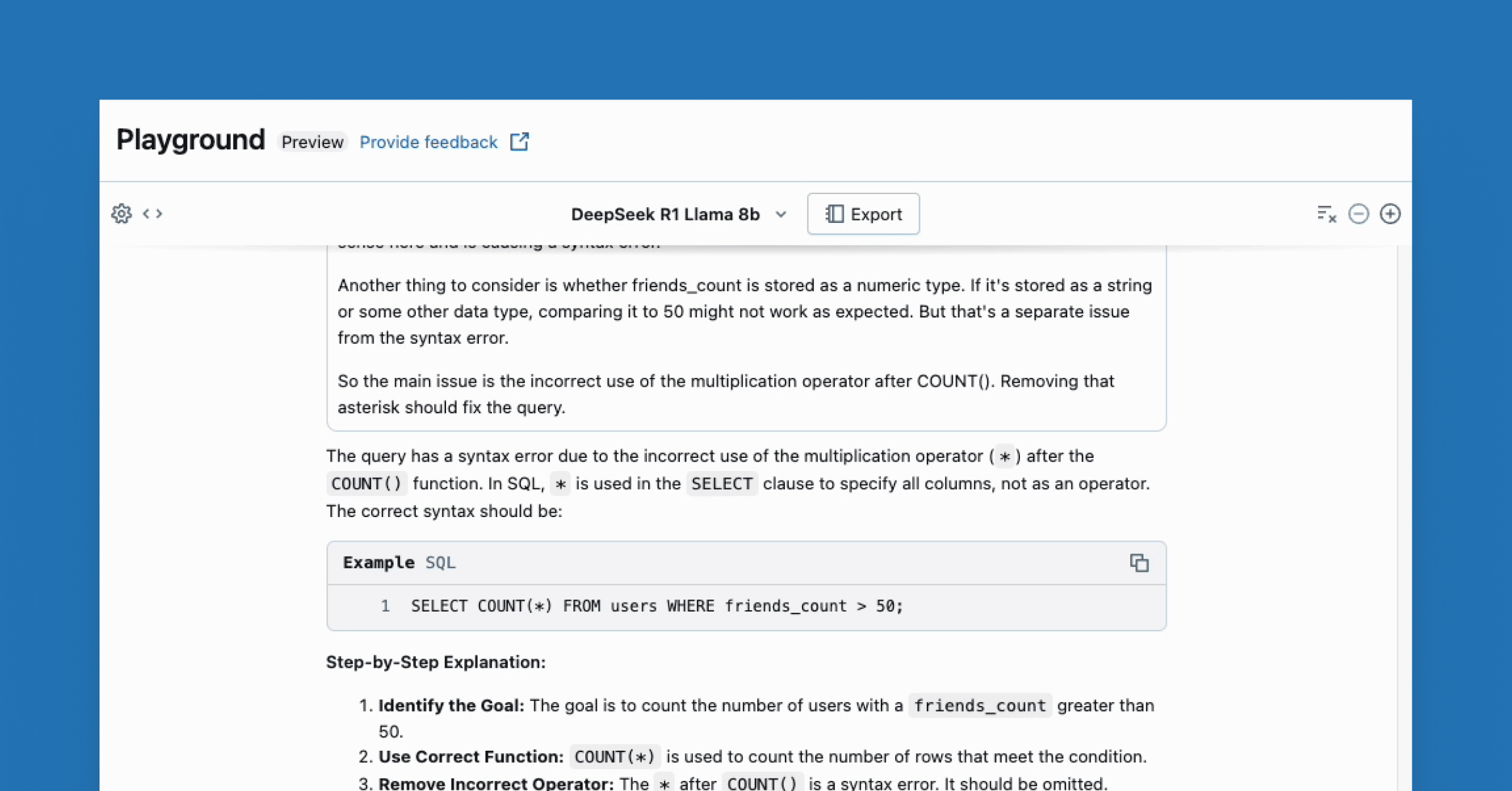

Once the endpoint is ready, you can easily query the model via our API, or use the Playground to start prototyping your applications.

With Mosaic AI Model Serving, deploying this model is both simple, but powerful, taking advantage of our best-in-class performance optimizations as well as integration with the Lakehouse for governance and security.

When to use reasoning models

One unique aspect of the Deepseek-R1 series of models is their ability for extended chain-of-thought (CoT), similar to the o1 models from OpenAI. You can see this in our Playground UI, where the collapsible “Thinking” section shows the CoT traces of the model’s reasoning. This could lead to higher quality answers, particularly for math and coding, but at the result of significantly more output tokens. We also recommend users follow Deepseek’s Usage Guidelines in interacting with the model.

These are early innings in knowing how to use reasoning models, and we are excited to hear what new data intelligence systems our customers can build with this capability. We encourage our customers to experiment with their own use cases and let us know what you find. Look out for additional updates in the coming weeks as we dive deeper into R1, reasoning, and how to build data intelligence on Databricks.

Resources

- Learn more about Mosaic AI Model Serving

- Apply Deepseek-R1-distilled-Llama models on large batches of data with Batch LLM inference

- Build Production-quality Agentic and RAG Apps with Agent Framework and Evaluation

Never miss a Databricks post

What's next?

Data Science and ML

June 12, 2024/8 min read