Announcing Public Preview of AI/BI Genie Conversation APIs

Get insights from your data using natural language within any collaboration tool

Summary

- AI/BI Genie lets you talk to your data to explore any question using natural language

- Integrate AI/BI Genie into Databricks Apps, Slack, Teams, Sharepoint, custom-built applications and more

- Put Genie Conversation APIs into practice with a step-by-step example

As part of our Week of AI agents initiative, we’re introducing new capabilities to help enterprises build and govern high-quality AI agents. To that end, we are excited to announce the Public Preview of the Genie Conversation APIs, available on AWS, Azure, and GCP. With this API suite, your users can now leverage AI/BI Genie to self-serve data insights using natural language from any surface, including Databricks Apps, Slack, Teams, Sharepoint, custom-built applications and more. Additionally, the Conversation APIs enable you to embed AI/BI Genie in any AI agent, with or without Agent Framework.

Using the Genie Conversation API suite, you can programmatically submit natural language prompts and receive data insights just as you would in the Genie user interface. The API is stateful, allowing Genie to retain context as you ask follow-up questions within a conversation thread.

In this blog, we review the key endpoints available in the Public Preview, explore Genie’s integration with Mosaic AI Agent Framework, and highlight an example of embedding Genie into a Microsoft Teams channel.

Genie Conversation APIs in Practice

Let's walk through a practical example to understand how the Genie Conversation APIs work. The first thing to note is that the Conversation APIs need to interact with a Genie space that has already been created. We recommend starting with our product documentation to set up your Genie space and then following these best practices to configure it optimally.

Imagine you’ve already created, configured, and shared a Genie space designed to answer questions about your marketing data. Now, you want your marketing team to use this space to ask questions and explore insights—but instead of accessing it through the Genie UI, you want them to do so from within an external application.

To begin, suppose you want your marketing team to ask a straightforward question: “Which customers did we contact via email yesterday?”. To ask this question using the Genie Conversation APIs we will need to send a POST request to the following endpoint:

/api/2.0/genie/spaces/{space_id}/start-conversation

This endpoint starts a new conversation thread, using your question as the initial prompt, just like in the Genie Space UI. Note that the request must include your host component, Genie Space ID, and an access token for authentication. You can find the space_id in the Genie Space URL, as shown below:

https://example.databricks.com/genie/rooms/12ab345cd6789000ef6a2fb844ba2d31

The following is an example of the correct POST request required:

|

POST /api/2.0/genie/spaces/{space_id}/start-conversation |

If the statement is submitted correctly, the API will return the created conversation and message in response to the POST request, as shown in the following example:

|

{

“conversation_id": "6a64adad2e664ee58de08488f986af3e", "conversation": { "created_timestamp": 1719769718, "conversation_id": "6a64adad2e664ee58de08488f986af3e", "last_updated_timestamp": 1719769718, "space_id": "3c409c00b54a44c79f79da06b82460e2", "title": "Which customers did we reach out to via email yesterday?", "user_id": 12345 }, “message_id": "e1ef34712a29169db030324fd0e1df5f", "message": { "attachments": null, "content": "Which customers did we reach out to via email yesterday?", "conversation_id": "6a64adad2e664ee58de08488f986af3e", "created_timestamp": 1719769718, "error": null, "message_id": "e1ef34712a29169db030324fd0e1df5f", "last_updated_timestamp": 1719769718, "query_result": null, "space_id": "3c409c00b54a44c79f79da06b82460e2", "status": "IN_PROGRESS", "user_id": 12345 } } |

Using the conversation_id and message_id, you can now poll to check the message’s generation status and retrieve the generated associated SQL statement and query description as follows:

|

GET /api/2.0/genie/spaces/{space_id}/conversations/{conversation_id}/messages/{message_id}

HOST= <WORKSPACE_INSTANCE_NAME> Authorization: Bearer <your_authentication_token> |

The following is an example of the response:

|

{

"attachments": [{ "query": { "description": "Query description in a human readable format", "last_updated_timestamp": 1719769718, "query": "SELECT * FROM customers WHERE date >= CURRENT_DATE() - INTERVAL 1 DAY", "title": "Query title", "statement_id": "9d8836fc1bdb4729a27fcc07614b52c4", "query_result_metadata": { "row_count": 10 }, } 'attachment_id': '01efddddeb2510b6a4c125d77ce176be' }], "content": "Which customers did we reach out to via email yesterday?", "conversation_id": "6a64adad2e664ee58de08488f986af3e", "created_timestamp": 1719769718, "error": null, "message_id": "e1ef34712a29169db030324fd0e1df5f", "last_updated_timestamp": 1719769718, "space_id": "3c409c00b54a44c79f79da06b82460e2", "status": "EXECUTING_QUERY", "user_id": 12345 } |

Once the message status field shows “COMPLETED”, it means the generated SQL statement has finished executing and query results are ready to be retrieved. You can now get the response as follows:

|

GET /api/2.0/genie/spaces/{space_id}/conversations/{conversation_id}/messages/{message_id}/attachments/{attachment_id}/query-result

HOST= <WORKSPACE_INSTANCE_NAME> Authorization: Bearer <your_authentication_token> |

Of course, you can also issue follow-up prompts for your conversation threads. For example, let's say the marketing team wants to ask the following question next: “Which of these customers opened and forwarded the email?”

To manage this you will send another POST request with the new prompt to the existing conversation thread as follows:

|

POST /api/2.0/genie/spaces/{space_id}/conversations/{conversation_id}/messages

HOST= <WORKSPACE_INSTANCE_NAME> Authorization: <your_authentication_token> { "content": "Which of these customers opened and forwarded the email?", } |

Should you want to refresh data from previous prompts, the API also allows you to re-execute SQL queries that were previously generated. For more details on the API endpoints, please refer to the product documentation.

Conversation APIs Best Practices

To ensure the best performance, we recommend the following API best practices:

- Poll the API every 5-10 seconds until a conclusive message status is received, but limit polling to a maximum of 10 minutes for most typical queries

- If no response is received within 2 minutes, implement exponential backoff to improve reliability

- Ensure you create new conversation threads for each user session; reusing the same conversation thread for multiple sessions can negatively impact Genie's accuracy.

Integrating Genie into Mosaic AI Agent Framework

The Conversation APIs also seamlessly integrate into your Mosaic AI Agent Framework with the databricks_langchain.genie wrapper.

Let’s say my marketing managers needed to answer questions across three topics:

- Promotion event engagement (structured data stored in a Unity Catalog view)

- Email ads (structured data stored in a Unity Catalog table)

- User text reviews (unstructured PDFs stored in Unity Catalog volumes)

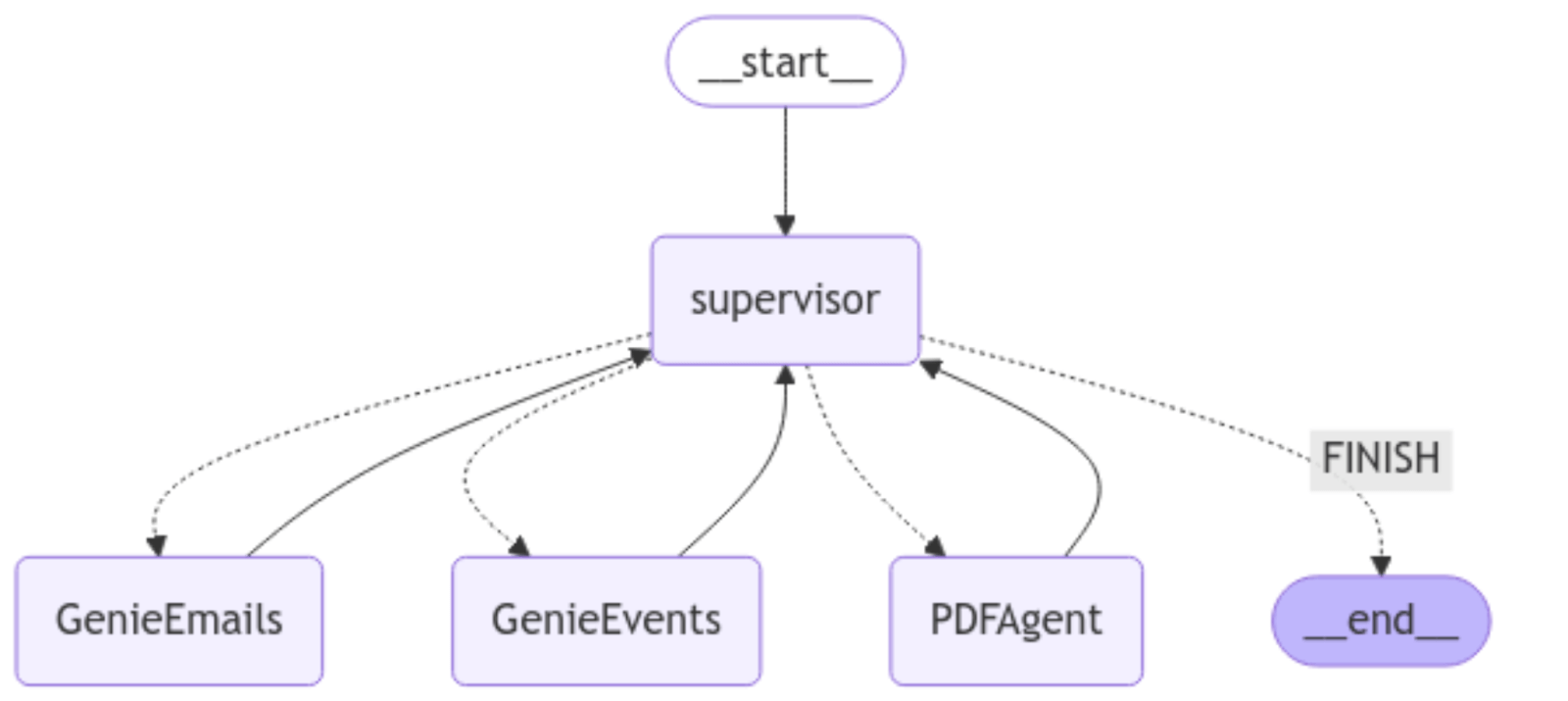

You can build a multi-agent framework to answer questions on both structured and unstructured data. For example, you can define the following Langgraph agent framework:

The agent framework graph would look like the following:

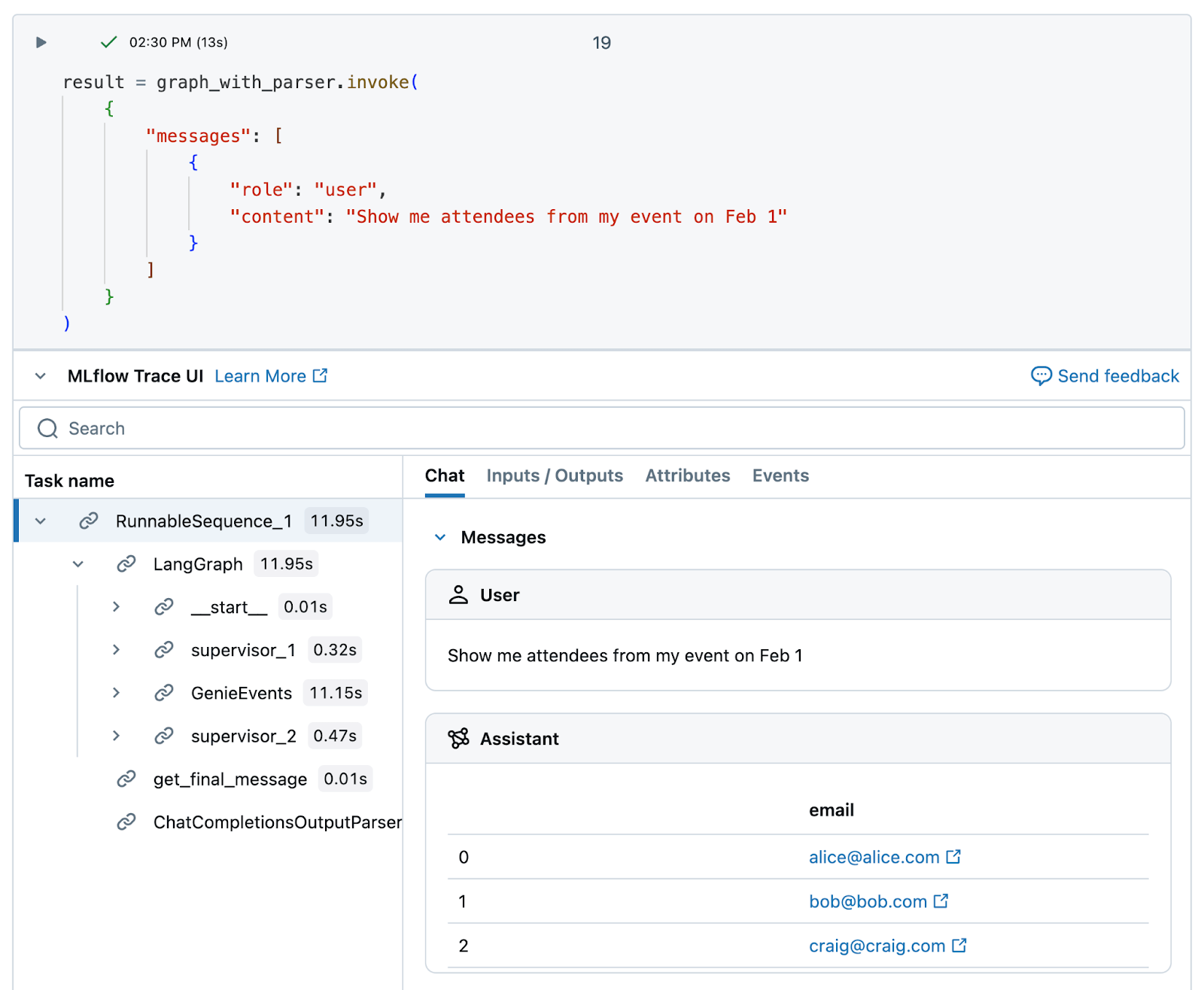

Your agent framework can now direct questions to their relevant agents. For example, if a marketing manager starts by asking “Show me attendees from my event on Feb 1”, the GenieEvents agent will be triggered. MLFlow traces show the framework’s steps:

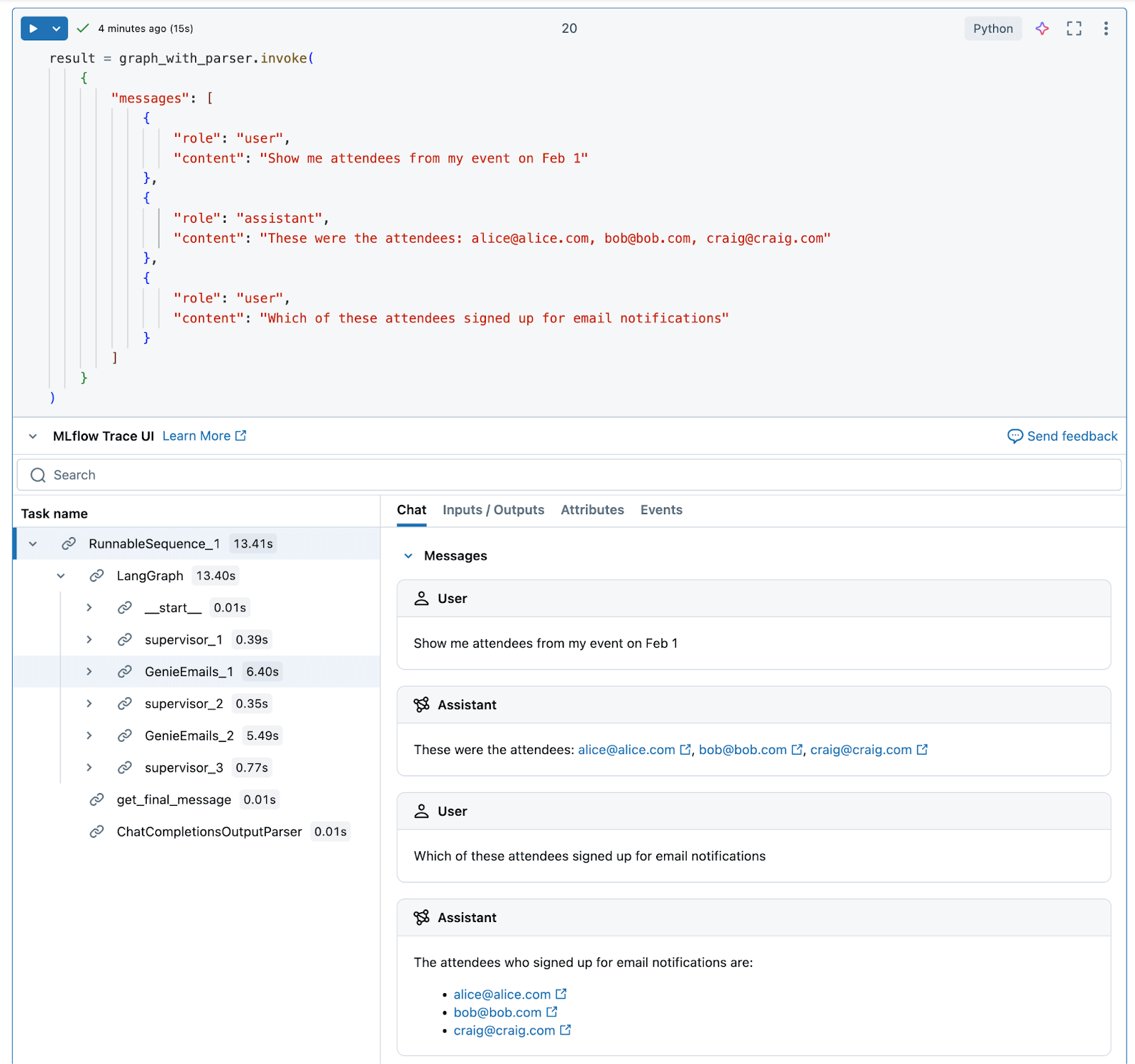

The agent framework also enables agents to share answers as context for each other. This allows users to get data answers that pull from multiple sources seamlessly. For example, the marketing manager may want to drill down and ask “Which of these attendees signed up for email notifications”. The framework will use the previous answer from GenieEvents as context for the GenieEmails agent:

With this approach, your business users can now answer data questions that span multiple topics/data types and build on each other. To learn more about using Genie in multi-agent systems, please refer to the product documentation.

Example: How to Integrate Genie with Microsoft Teams

During the Conversation API's Private Preview period, Microsoft Teams was one of the most popular productivity tools that customers integrated with Genie. This integration enables users to ask questions and get insights instantly, without leaving the Teams UI.

To do this, you will need to take the following steps:

- Create a new Azure Bot – including resource groups and app service plans.

- Add the necessary environment variables and dependencies to your bot.

- Implement the conversation logic using Conversation APIs (starting conversation, retrieving results, asking follow-up questions, etc.).

- Import the Genie Azure Bot into a Teams Channel.

For detailed examples of exactly how to configure the Conversation APIs for Microsoft Teams please refer to the following articles:

- Microsoft Teams Meets Databricks Genie API: A Complete Setup Guide

- Microsoft Teams <-> Databricks Genie API - End to End Integration

The example below highlights a real-world application from one of our customers who used the Conversation APIs during the Private Preview period. Casas Bahia, a leading retailer in Brazil, serves millions of customers both online and through its extensive network of physical stores. By integrating the Genie Conversation APIs, Casas Bahia empowered users across the organization—including C-level executives—to interact with Genie directly within their Microsoft Teams environment. To learn more about their use case, read the Casas Bahia customer story.

“Having Genie integrated with Teams has been a huge step forward for data democratization. It makes data insights accessible to everyone, no matter their technical background.” — Cezar Steinz, Data Operations Manager, Grupo Casas Bahia

Getting Started with Conversation APIs

With the Genie Conversation APIs now in Public Preview, you can empower business users to talk to their data from any surface. To get started, please refer to the product documentation.

We are excited to see how you will use the Genie Conversation APIs and encourage you to start creating Genie spaces right away. There’s a ton of content available to get you going– you can visit the AI/BI and Genie web pages, check out our extensive library of product demos, and be sure to read through the full AI/BI Genie documentation.

The Databricks team is always looking to improve the AI/BI Genie experience, and would love to hear your feedback!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read