How Habu Integrates With Databricks to Protect Sensitive Data

Habu’s data clean room technology works seamlessly with Databricks infrastructure to enable privacy-preserving access to data across teams and partners.

We recently announced our partnership with Databricks to bring multi-cloud data clean room collaboration capabilities to every Lakehouse. Our integration with Databricks combines the best of Databricks's Lakehouse technology with Habu's clean room orchestration platform to enable collaboration across clouds and data platforms, and make outputs of collaborative data science tasks available to business stakeholders. In this blog post, we'll outline how Habu and Databricks achieve this by answering the following questions:

- What are data clean rooms?

- What is Databricks' existing data clean room functionality?

- How do Habu & Databricks work together?

Let's get started!

What are Data Clean Rooms?

Data clean rooms are closed environments that allow companies to safely share data and models without concerns about compromising security or consumer privacy, or exposing underlying ML model IP. Many clean rooms, including those provisioned by Habu, provide a low- or no-code software solution on top of secure data infrastructure, which vastly expands the possibilities for access to data and partner collaborations. Clean rooms also often incorporate best practice governance controls for data access and auditing as well as privacy-enhancing technologies used to preserve individual consumer privacy while executing data science tasks.

Data clean rooms have seen widespread adoption in industries such as retail, media, healthcare, and financial services as regulatory pressures and privacy concerns have increased over the last few years. As the need for access to quality, consented data increases in additional fields such as ML engineering and AI-driven research, clean room adoption will become ever more important in enabling privacy-preserving data partnerships across all stages of the data lifecycle.

Databricks Moves Towards Clean Rooms

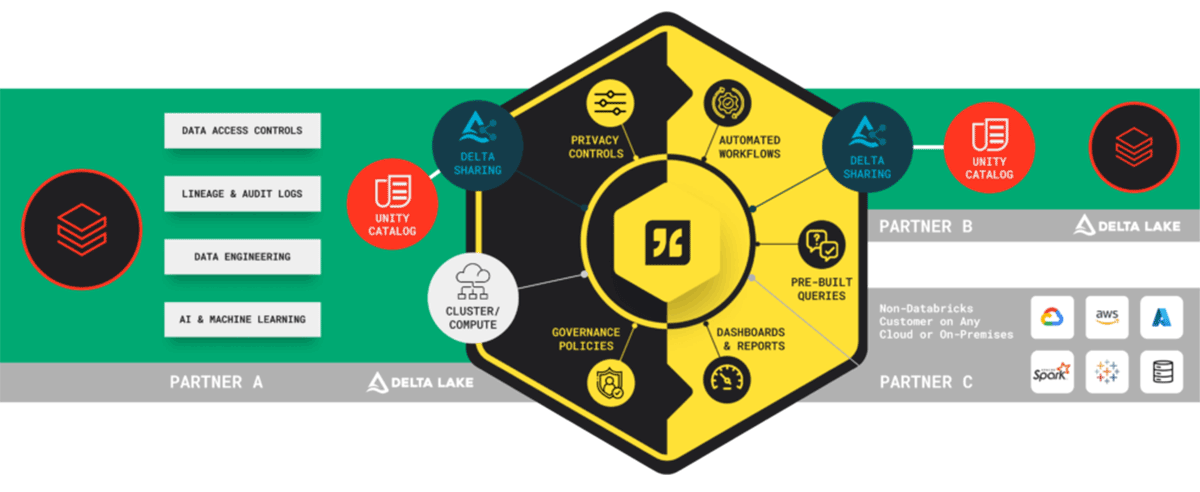

In recognition of this growing need, Databricks debuted its Delta Sharing protocol last year to provision views of data without replication or distribution to other parties using the tools already familiar to Databricks customers. After provisioning data, partners can run arbitrary workloads in any Databricks-supported language, while the data owner maintains full governance control over the data through configurations using Unity Catalog.

Delta Sharing represented the first step towards secure data sharing within Databricks. By combining native Databricks functionality with Habu's state-of-the-art data clean room technology, Databricks customers now have the ability to share access to data without revealing its contents. With Habu's low to no-code approach to clean room configuration, analytics results dashboarding capabilities, and activation partner integrations, customers can expand their data clean room use case set and partnership potential.

Habu + Databricks: How it Works

Habu's integration with Databricks removes the need for a user to deeply understand Databricks or Habu functionality in order to get to the desired data collaboration business outcomes. We've leveraged existing Databricks security primitives along with Habu's own intuitive clean room orchestration software to make it easy to collaborate with any data partner, regardless of their underlying architecture. Here's how it works:

- Agent Installation: Your Databricks administrator installs a Habu agent, which acts as an orchestrator for all of your combined Habu and Databricks clean room configuration activity. This agent listens for commands from Habu, which runs designated tasks when you or a partner take an action within the Habu UI to provision data to a clean room.

- Clean Room Configuration: Within the Habu UI, your team configures data clean rooms where you can dictate:

- Access: Which partner users have access to the clean room.

- Data: The datasets available to those partners.

- Questions: The queries or models the partner(s) can run against which data elements.

- Output Controls: The privacy controls on the outputs of the provisioned questions, as well as what use cases for which outputs can be used (e.g., analytics, marketing targeting, etc.).

- When you configure these elements, it triggers tasks within data clean room collaborator workspaces via the Habu agents. These tasks interact with Databricks primitives to set up the clean room and ensure all access, data, and question configurations are mirrored to your Databricks instance and compatible with your included partners' data infrastructure.

- Question Execution: Within a clean room, all parties are able to explicitly review and opt their data, models, or code into each analytical use case or question. Once approved, these questions are available to be run on-demand or on a schedule. Questions can be authored in either SQL or Python/PySpark directly in Habu, or by connecting notebooks.

There are three types of questions that can be used in clean rooms:- Analytical Questions: These questions return aggregated results to be used for insights, including reports and dashboards.

- List Questions: These questions return lists of identifiers, such as user identifiers or product SKUs, to be used in downstream analytics, data enrichment, or channel activations.

- CleanML: These questions can be used to train machine learning models and/or inference without parties having to provide direct access to data or code/IP.

You may be wondering, how does Habu perform all of these tasks without putting my data at risk? We've implemented three additional layers of security on top of our existing security measures to cover all aspects of our Databricks pattern integration:

- The Agent: When you install the agent, Habu gains the ability to create and orchestrate Delta Shares to provide secure access to views of your data inside the Habu workspace. This agent acts as a machine at your direction, and no Habu individual has the ability to control the actions of the agent. Its actions are also fully auditable.

- The Customer: We leverage Databricks' service principal concept to create a service principal per customer, or organization, upon activation of the Habu integration. You can think of the service principal as an identity created to run automated tasks or jobs according to pre-set access controls. This service principal is leveraged to create Delta Shares between you and Habu. By implementing the service principal on a customer level, we ensure that Habu can't perform actions in your account based on directions from other customers or Habu users.

- The Question: Finally, in order to fully secure partner relationships, we also apply a service principal to each question created within a clean room upon question execution. This means no individual users have access to the data provisioned to the clean room. Instead, when a question is run (and only when it is run), a new service principal user is created with the permissions to run the question. Once the run is finished, the service principal is decommissioned.

Wrap Up

There are many benefits to our integrated solution with Databricks. Delta Sharing makes collaborating on large volumes of data from the Lakehouse fast and secure. Plus, the ability to share data from your medallion architecture in a clean room opens up new insights. And finally, the ability to run Python and other code in containerized packages will enable customers to train and verify ML to Large Language Models (LLM) on private data.

All of these security mechanisms that are inherent to Databricks, as well as the security and governance workflows built into Habu, will ensure you can focus not only on the details of the data workflows involved in your collaborations, but also on the business outcomes resulting from your data partnerships with your most strategic partners.

To learn more about Habu's partnership with Databricks, register now for our upcoming joint webinar on May 17, "Unlock the Power of Secure Data Collaboration with Clean Rooms." Or, connect with a Habu representative for a demo so you can experience the power of Habu + Databricks for yourself.

Never miss a Databricks post

What's next?

Product

November 27, 2024/6 min read

How automated workflows are revolutionizing the manufacturing industry

Media & Entertainment

December 10, 2024/9 min read