Introducing the Databricks AI Security Framework (DASF)

An actionable framework to manage AI security

We are excited to announce the release of the Databricks AI Security Framework (DASF) version 1.0 whitepaper! The framework is designed to improve teamwork across business, IT, data, AI, and security groups. It simplifies AI and ML concepts by cataloging the knowledge base of AI security risks based on real-world attack observations and offers a defense-in-depth approach to AI security and gives practical advice for immediate application.

Machine learning (ML) and generative AI (GenAI) are transforming the future of work by enhancing innovation, competitiveness, and employee productivity. However, organizations are grappling with the dual challenge of leveraging artificial intelligence (AI) technologies for opportunities while managing potential security and privacy risks, such as data breaches and regulatory non-compliance.

This blog will give an overview of the DASF, how to leverage it to secure your organization's AI initiatives, share an update on our external momentum, and securely guide the adoption of AI in your organization.

Building the foundation of the Databricks AI Security Framework

AI is impacting every industry and transforming the way we work. Now more than ever, leaders want to leverage data and AI to transform their organizations. AI Security and Governance are critical to establishing trust in your organization's AI goals. According to Gartner, AI trust, risk, and security management is the #1 top strategy trend in 2024 that will factor into business and technology decisions, and by 2026, AI models from organizations that operationalize AI transparency, trust, and security will achieve 50% increase in terms of adoption, business goals, and user acceptance.

Earlier this year, we announced Databrick's acquisition of MosaicML and we shared more about our commitment to Responsible AI. Over the past few months, we have continued furthering our commitment and momentum as an industry leader in AI through new partnerships. To complement these investments, the Databricks Security team now hosts AI Security workshops for CISOs to educate organizations on how to successfully shepherd their AI journey in a risk-conscious manner. We learned from our customers that security and governance teams struggle to find reliable material that demystifies what AI is, how it works, and what security risks may arise as these technologies are rolled out.

We took these learnings to develop the DASF framework to help business, IT, data, AI, and security teams work better together in the deployment of AI under the following principles:

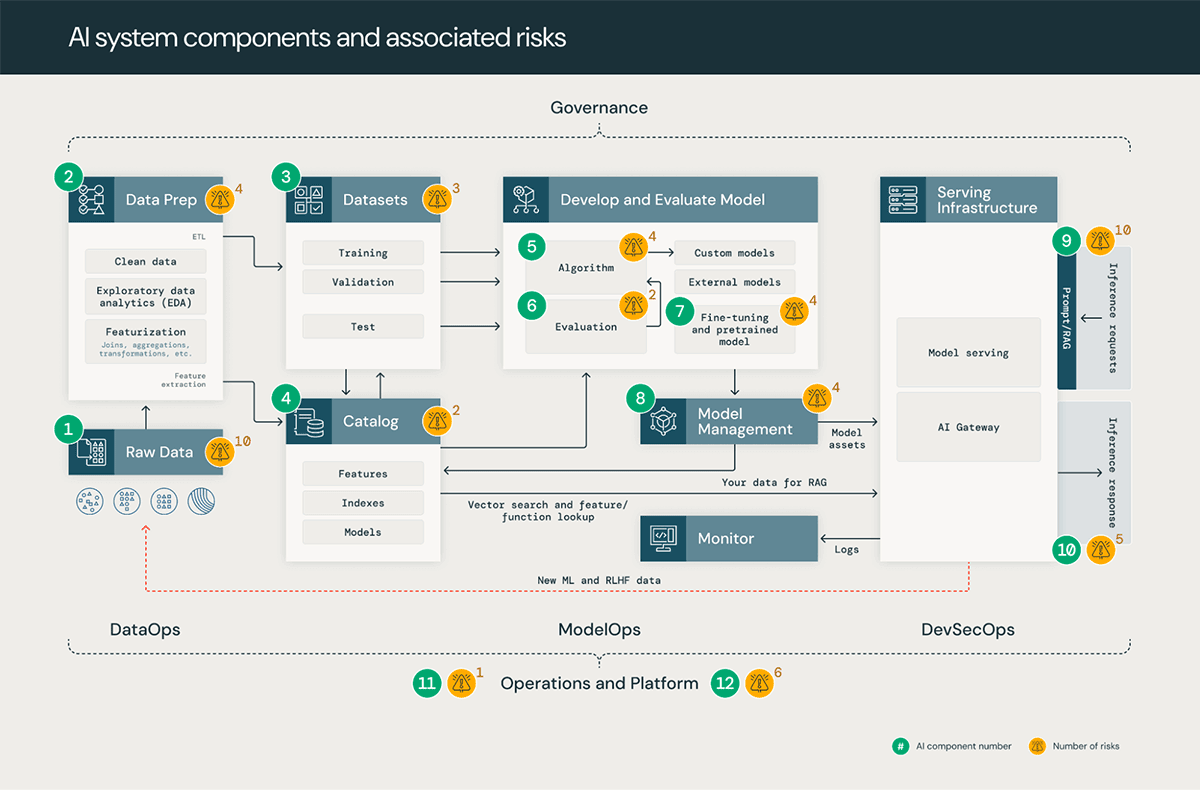

- Demystify AI and ML: The DASF breaks down the 12 components of an AI system, what types of AI models exist, and how they all work together. This helps the team's ability to work together by defining a common nomenclature of the AI systems, processes, and personas involved.

- Provide a defense-in-depth approach to securing AI: In our conversations with security leaders, there needs to be clarity on whether AI security is a cybersecurity issue, an adversarial machine learning issue, or something entirely new. The reality is, it is all of the above. The DASF provides a framework to understand the 55 security risks across the three stages of any AI system based on your specific AI use cases and deployment models. We also map the risks to common AI security frameworks such as MITRE ATLAS, OWASP Top 10 for LLMs, and NIST AML Taxonomy.

- Deliver actionable recommendations: Many existing frameworks do a good job outlining the risks associated with AI, but they do not clearly outline the necessary controls to mitigate them. The DASF recommends 53 controls applicable to any data and AI platform you use. If you are a Databricks customer, we go one step further: we have included links to Databricks documentation (by cloud) to provide specific instructions on implementing each mitigating control!

Getting started with the Databricks AI Security Framework

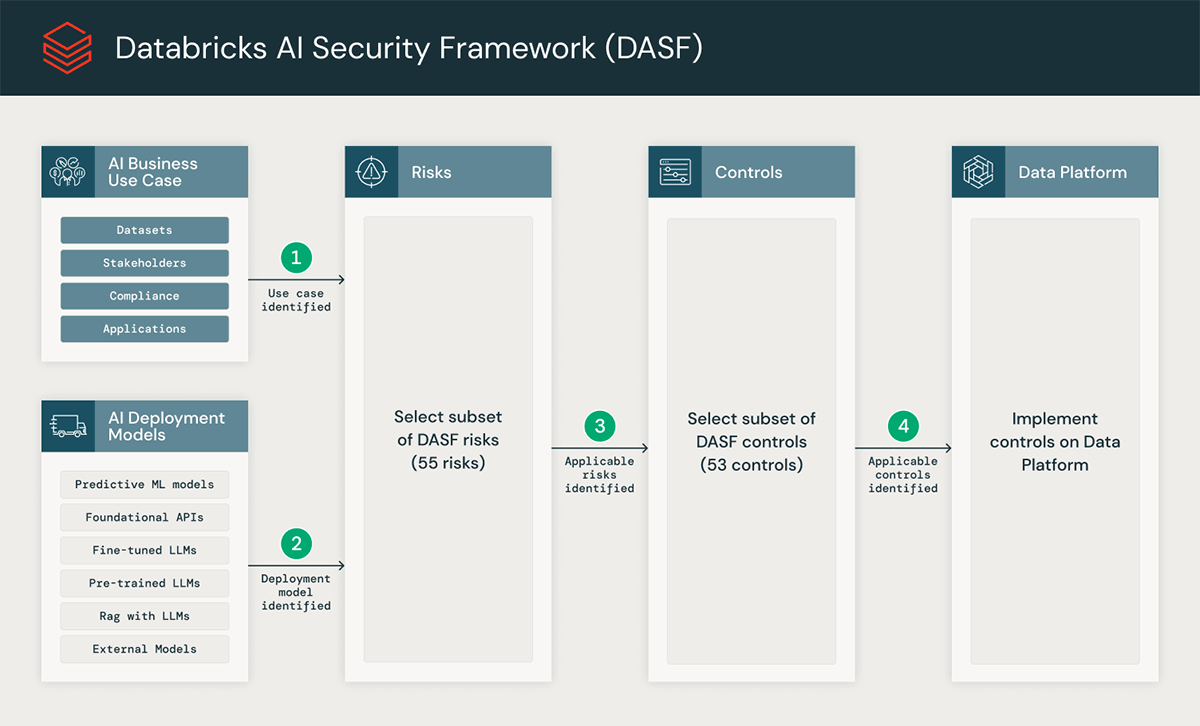

The DASF was designed so that no matter what data and AI platform you use, you can walk away with an end-to-end risk profile for your organization's AI deployment needs with a concrete set of controls you should consider implementing. To get started with the DASF, we recommend the following approach, as illustrated in the figure below:

Step 1 - Identify the AI business use case: Work with your stakeholders, whether already implemented or in the planning phases, on your organization's AI use cases. We recommend leveraging Databricks Solution Accelerators, which are purpose-built guides to speed up results across your most common and high-impact AI and ML use cases. Review what datasets will be used, what compliance requirements may apply, and which applications these AI systems will impact to come up with the baseline required capabilities of your data and AI platform.

Step 2 - Determine AI deployment model: Choose an appropriate deployment model such as predictive ML models, RAG-LLMs, fine-tuned LLMs, pretrained LLMs, foundation models, and external models. Each deployment model has a varying shared responsibility split across the 12 AI system components and among your organization, your data and AI platform, and any partners involved.

Step 3 - Select the most pertinent risks: From our documented list of 55 security risks, pinpoint the most relevant to your organization based on the use case and deployment model your organization is implementing. For most use cases, only a small subset of the 55 risks will need to be addressed.

Step 4 - Choose and implement controls: Select controls that align with your organization's risk appetite. These controls are defined generically for compatibility with any data and AI platform. Our framework also provides guidelines on tailoring these controls specifically for the Databricks Data Intelligence Platform with specific Databricks implementation guidance by cloud. You use these controls alongside your organization's policies and have the right assurance in place.

If this still feels like a lot, we will continue hosting our AI Security workshops for those who want an interactive experience.

Fostering AI security through industry allies, partners, and customers

In the security industry, cultivating peer collaboration is imperative for ensuring collective safety, security, and success. We would not be able to publish a document like the DASF today without the standards, frameworks, and third party tools that precede us such as the Guidelines for Secure AI System Development, NIST AI Risk Management Framework, and the Multilayer Framework for Good Cybersecurity Practices for AI. Many more are referenced throughout the DASF and can be reviewed in the resources section.

While we did countless internal reviews with our AI, ML, and security experts at Databricks, we also met with 15 AI security industry leaders including those from HITRUST, Carnegie Mellon University, Capital One, Protect AI, and Barracuda (and more quoted below!). We thank them for their time, insights, and feedback on how to make the DASF most practical for our peers. A full list of reviewers can be found in our acknowledgments section of the DASF.

"When I think about what makes a good accelerator, it's all about making things smoother, more efficient, and fostering innovation. The DASF is a proven and effective tool for security teams to help their partners get the most out of AI. Additionally, it lines up with established risk frameworks like NIST, so it's not just speeding things up – it's setting a solid foundation in security work." — Riyaz Poonawala, Vice President of Information Security, Navy Federal Credit Union

"Companies need not sacrifice security for AI innovation. The Databricks AI Security Framework is a comprehensive tool to enable AI adoption securely. It not only maps AI security concerns to the AI development pipeline, but makes them actionable for Databricks customers with practical controls. We're pleased to have contributed to the development of this valuable community resource." — Hyrum Anderson, CTO, Robust Intelligence

"The DASF is a great example of Databrick's leadership in AI and is a valuable contribution to the industry at a critical time. We know the greatest risk associated with Artificial Intelligence for the foreseeable future is bad people and this framework offers an effective counter-balance to those cyber criminals. The DASF is a pragmatic, operational, and efficient way to secure your organization." — Chris "Tito" Sestito, CEO and Co-founder of HiddenLayer

Take the first step to expanding a strong AI security foundation – download the Databricks AI Security Framework and engage with our team

The Databricks AI Security Framework whitepaper is available for download on the Databricks Security and Trust Center. We have made a concerted effort to ensure accuracy, and our team is eager to receive your feedback. Should you have any feedback or questions, please reach out to us via email at [email protected]. This alias is also where you can request more information about joining an upcoming AI Security workshop or scheduling a dedicated one for your organization. See our new AI Security page for detailed resources on AI and ML security.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read