Introducing Salesforce BYOM for Databricks

Salesforce and Databricks are excited to announce an expanded strategic partnership that delivers a powerful new integration - Salesforce Bring Your Own Model (BYOM) for Databricks. This collaboration enables data scientists and machine learning engineers to seamlessly leverage the best of both worlds: the robust customer data and business capabilities in Salesforce and the advanced analytics and AI capabilities of Databricks. With this integration, you can now build, train, and deploy custom AI models in Databricks and effortlessly integrate them into Salesforce to deliver intelligent and personalized customer experiences. Get ready to unlock the full potential of your data and revolutionize the way you engage with your customers.

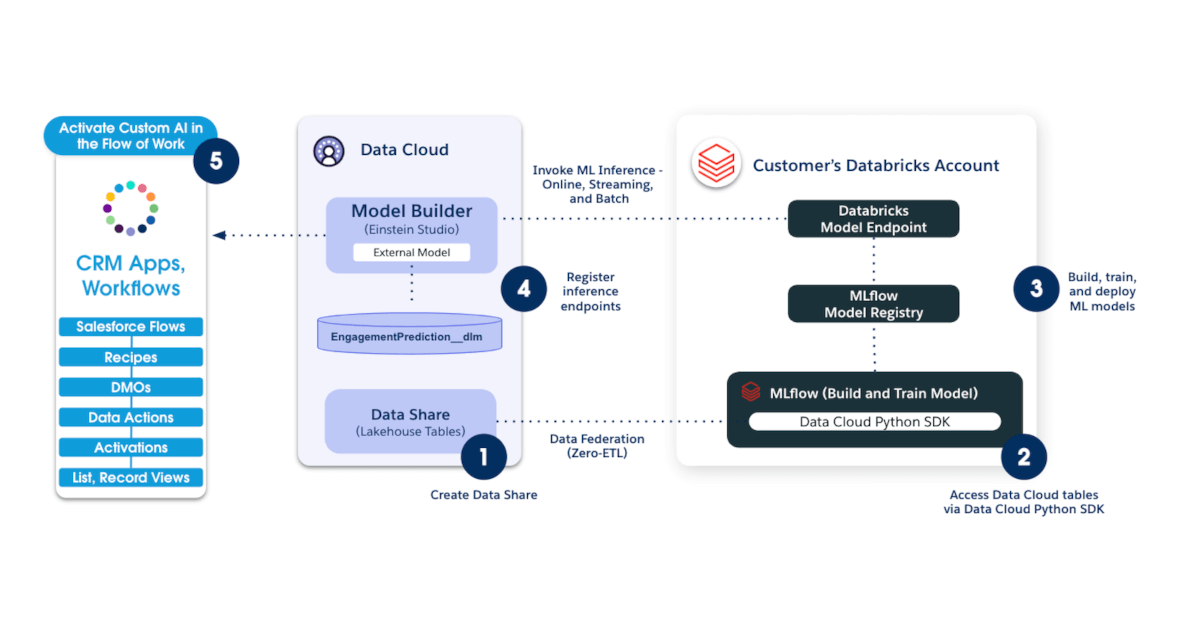

How it works

Salesforce Data Cloud enables teams to collect data from diverse sources and expose them to downstream Salesforce CRM applications and workflows. Users can quickly and easily configure data objects to pull data from other Salesforce applications or external sources and map them to built-in data models or custom data models as needed.

Once the data is loaded and mapped, the Python SDK connector can be used to access the Salesforce Data Model Objects (DMO's) from within Databricks. Data from these objects can be combined with other data in your Databricks Lakehouse to power exploratory analysis and featurization to prepare the data for model training, using all the tools your data scientists and engineers know and love from the Databricks platform, including Notebooks, Feature Engineering, AutoML, and MLflow.

Once the model is trained and registered in Unity Catalog, your machine learning engineers can quickly and easily spin up a model serving endpoint in Databricks Model Serving. This endpoint can then be made available in Salesforce Data Cloud via the new integration: navigate to Einstein 1 Studio's Model Builder and register this new endpoint as a new Databricks Model. That's it! Now your model is ready to use in any Salesforce applications and workflows.

How it helps

This powerful integration gives customers the ability to solve a broad variety of problems more quickly and easily than ever before.

- Data collection and ingestion: Data is the key ingredient of any machine learning workflow, and getting all the data into one place for training is non-trivial. This integration enables teams to combine data collected from Salesforce diverse offerings into Data Cloud, and then from there combine it with the rest of the enterprise data in the Databricks Lakehouse to power machine learning models.

- Powerful and consistent AI tools and MLOps: Data teams supporting use cases for Salesforce are often also supporting use cases from across the enterprise, leading to an explosion of models to manage. Databricks enables users to manage this complexity at scale with tools like Unity Catalog and MLflow that enables centralized governance and lifecycle management for your entire data and AI catalog.

- Integration of ML models into business processes: Once a model is built and deployed, getting it integrated with downstream business processes is often a key challenge. Businesses that run their sales, service, or marketing functions on Salesforce can now easily integrate Databricks machine learning models into any business process with a few clicks.

These three core capabilities work together to give your data teams superpowers. The synergy created by having data and application teams able to quickly and easily collaborate using the best parts of each platform greatly enhances business agility and amplifies business impact by enabling AI powered optimization across the entire business.

Example use cases

Given the breadth of Salesforce applications and its ability to power sales, service, marketing and commerce applications, the number of use cases you can imagine with this are of course limitless. Likewise, since Databricks Lakehouse can enable management of data and AI assets across all of those functions and then all of your other business functions as well, those use cases can now benefit from unlimited data and AI power to truly transform your business for the AI economy. Here are a few examples:

- Sales Efficiency: Focus limited resources on the most promising leads using AI models based on historical sales data and customer interactions to prioritize by likelihood to convert.

- Deal Forecasting and Next Best Action: Identify patterns and trends in sales data to forecast deal closures and recommend next best actions to sales reps, significantly increasing the probability of winning a deal.

- Service Agility: Detect problems more quickly and resolve them faster and more efficiently for a huge positive multiplier effect on customer satisfaction and retention as well as reducing cost and workload for your service teams. This includes models for automated case routing, case history analysis, and customer sentiment analysis to predict and mitigate negative outcomes.

- Customer Segmentation: Group customers based on their behavior, preferences, and purchase history so your marketers can tailor campaigns to increase engagement.

- Customer Journey Prediction: Personalize the customer journey by anticipating future actions so experiences can be dynamically adapted to match preferences.

- Product Recommendation: Incorporate browsing history, previous behaviors, and knowledge of what similar customers have liked and bought to suggest the products your customers will love faster and with less friction.

- Demand Forecasting: Combine personalization with other data sources to analyze trends and seasonality to drive demand forecasting models enabling more effective inventory management and supply chain operations.

How to get started

To help get your models into production faster, please check out our Salesforce Databricks BYOM solution accelerator. The solution accelerator is a series of notebooks and instructions that walk through all of the steps needed to create a robust ML pipeline integrating data from Salesforce Data Cloud and the Databricks Lakehouse and then deploys it for integration into business processes in any of your Salesforce applications. The example it uses is based on a fictional company called Northern Trail Outfitters that wants to predict customers' product preferences to help deliver personalized recommendations. It uses Customer 360 data synthesized and stored in Data Cloud to drive the model building process in Databricks, and then shows how to refer to that model from Salesforce.

These are just a few examples of use cases that can help your business deliver value with data and AI. Get started today with our new BYOM solution accelerator!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read