Machine Learning with Unity Catalog on Databricks: Best Practices

Summary

- Build and deploy ML models seamlessly with Unity Catalog, from data preprocessing to model training and deployment.

- Ensure secure collaboration by leveraging role-based access control, group clusters, and governance features.

- Optimize compute resources with dedicated group clusters and Delta Live Tables for efficient ML workflows.

Building an end-to-end AI or ML platform often requires multiple technological layers for storage, analytics, business intelligence (BI) tools, and ML models in order to analyze data and share learnings with business functions. The challenge is deploying consistent and effective governance controls across different parts with different teams.

Unity Catalog is Databricks’ built-in, centralized metadata layer designed to manage data access, security, and lineage. It also serves as the foundation for search and discovery within the platform. Unity Catalog facilitates collaboration among teams by offering robust features like role-based access control (RBAC), audit trails, and data masking, ensuring sensitive information is protected without hindering productivity. It also supports the end-to-end lifecycles for ML models.

This guide will provide a comprehensive overview and guidelines on how to use unity catalogs for machine learning use cases and collaborating among teams by sharing compute resources.

This blog post takes you through the steps for the end to end lifecycle of machine learning with the advantage features with unity catalogs on Databricks.

The example in this article uses the dataset containing records for the number of cases of the COVID-19 virus by date in the US, with additional geographical information. The goal is to forecast how many cases of the virus will occur over the next 7 days in the US.

Key Features for ML on Databricks

Databricks released multiple features to have better support for ML with unity catalog

- Databricks Runtime for Machine Learning (Databricks Runtime ML): automates the creation of a cluster with pre-built machine learning and deep learning infrastructure including the most common ML and DL libraries.

- Dedicated group clusters: create a Databricks Runtime ML compute resource assigned to a group using the Dedicated access mode

- Fine-grained access control on delicate access mode: enables fine-grained access control on queries that run on Databricks Runtime ML in dedicated access mode. This will support materialized views, streaming tables, and standard views.

Requirements

- The workspace must be enabled for Unity Catalog. Workspace admins can check the doc to show how to enable workspaces for unity catalog.

- You must use Databricks Runtime 15.4 LTS ML or above.

- A workspace admin must enable the Compute: Dedicated group clusters preview using the Previews UI. See Manage Databricks Previews.

- If the workspace has Secure Egress Gateway (SEG) enabled, pypi.org must be added to the Allowed domains list. See Managing network policies for serverless egress control.

Setup a group

In order to enable the collaboration, an account admin or a workspace admin needs to setup a group by

- Click your user icon in the upper right and click Settings

- In the “Workspace Admin” section, click “Identity and access”, then click “Manage” in the Groups section

- Click “Add group”,

- click “Add new”

- Enter the group name, and click Add

- Search for your newly created group and verify that the Source column says “Account”

- Click your group’s name in the search results to go to group details

- Click the “Members” tab and add desired members to the group

- Click the “Entitlements” tab and check both “Workspace access” and “Databricks SQL access” entitlements

- If you want to be able to manage the group from any non-admin account, you can grant “Group: Manager” access to the account in the “Permissions” tab

- NOTE: user account MUST be a member of the group in order to use group clusters - being a group manager is not sufficient.

Enable Dedicated group clusters

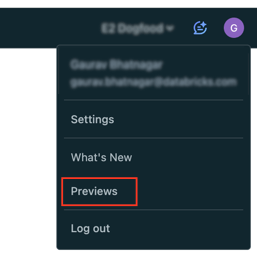

Dedicated group clusters are in public preview, to enable the feature, the workspace admin should enable the feature using the Previews UI.

- Click your username in the top bar of the Databricks workspace.

- From the menu, select Previews.

- Use toggles On for Compute: Dedicated group clusters to enable or disable previews.

Create Group compute

Dedicated access mode is the latest version of single user access mode. With dedicated access, a compute resource can be assigned to a single user or group, only allowing the assigned user(s) access to use the compute resource.

To create a Databricks runtime with ML with

- In your Databricks workspace, go to Compute and click Create compute.

- Check “Machine learning” in the Performance section to choose Databricks runtime with ML. Choose “15.4 LTS” in Databricks Runtime. Select desired instance types and number of workers as needed.

- Expand the Advanced section on the bottom of the page.

- Under Access mode, click Manual and then select Dedicated (formerly: Single-user) from the dropdown menu.

- In the Single user or group field, select the group you want assigned to this resource.

- Configure the other desired compute settings as needed then click Create.

After the cluster starts, all users in the group can share the same cluster. For more details, see best practices for managing group clusters.

Data Preprocessing via Delta live table (DLT)

In this sectional, we will

- Read the raw data and save to Volume

- Read the records from the ingestion table and use Delta Live Tables expectations to create a new table that contains cleansed data.

- Use the cleansed records as input to Delta Live Tables queries that create derived datasets.

To setup a DLT pipeline, you may need to following permissions:

- USE CATALOG, BROWSE for the parent catalog

- ALL PRIVILEGES or USE SCHEMA, CREATE MATERIALIZED VIEW, and CREATE TABLE privileges on the target schema

- ALL PRIVILEGES or READ VOLUME and WRITE VOLUME on the target volume

- Download the data to Volume: This example loads data from a Unity Catalog volume. Replace <catalog-name>, <schema-name>, and <volume-name> with the catalog, schema, and volume names for a Unity Catalog volume. The provided code attempts to create the specified schema and volume if these objects do not exist. You must have the appropriate privileges to create and write to objects in Unity Catalog. See Requirements.

- Create a pipeline. To configure a new pipeline, do the following:

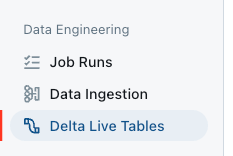

- In the sidebar, click Delta Live Tables in Data Engineering section.

- Click Create pipeline.

- In Pipeline name, type a unique pipeline name.

- Select the Serverless checkbox.

- In Destination, to configure a Unity Catalog location where tables are published, select a Catalog and a Schema.

- In Advanced, click Add configuration and then define pipeline parameters for the catalog, schema, and volume to which you downloaded data using the following parameter names:

- my_catalog

- my_schema

- my_volume

- Click Create.

The pipelines UI appears for the new pipeline. A source code notebook is automatically created and configured for the pipeline.

- In the sidebar, click Delta Live Tables in Data Engineering section.

- Declare materialized views and streaming tables. You can use Databricks notebooks to interactively develop and validate source code for Delta Live Tables pipelines.

- Click the link under the Source code field in the Pipeline details panel to open the notebook

- Develop code with Python or SQL. For details, see Develop pipeline code with Python or Develop pipeline code with SQL.

- Click the link under the Source code field in the Pipeline details panel to open the notebook

- Start a pipeline update by clicking the start button on top right of the notebook or the DLT UI. The DLT will be generated to the catalog and schema defined the DLT

`<my_catalog>.<my_schema>`.

Model Training on the materialized view of DLT

We will launch a serverless forecasting experiment on the materialized view generated from the DLT.

- click Experiments in the sidebar in Machine Learning section

- In the Forecasting tile, select Start training

- Fill in the config forms

- Select the materialized view as the Training data:

`<my_catalog>.<my_schema>.covid_case_by_date` - Select date as the Time column

- Select Days in the Forecast frequency

- Input 7 in the horizon

- Select cases in the target column in Prediction section

- Select Model registration as

`<my_catalog>.<my_schema>`

- Click Start training to start the forecasting experiment.

- Select the materialized view as the Training data:

After training completes, the prediction results are stored in the specified Delta table and the best model is registered to Unity Catalog.

From the experiments page, you choose from the following next steps:

- Select View predictions to see the forecasting results table.

- Select Batch inference notebook to open an auto-generated notebook for batch inferencing using the best model.

- Select Create serving endpoint to deploy the best model to a Model Serving endpoint.

Conclusion

In this blog, we have explored the end-to-end process of setting up and training forecasting models on Databricks, from data preprocessing to model training. By leveraging unity catalogs, group clusters, delta live table, and AutoML forecasting, we were able to streamline model development and simplify the collaborations between teams.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read