Introducing MLflow 2.7 with new LLMOps capabilities

New UI for Prompt Engineering and AI Gateway updates

Published: September 14, 2023

by Corey Zumar, Kasey Uhlenhuth and Ridhima Gupta

As part of MLflow 2’s support for LLMOps, we are excited to introduce the latest updates to support prompt engineering in MLflow 2.7.

Assess LLM project viability with an interactive prompt interface

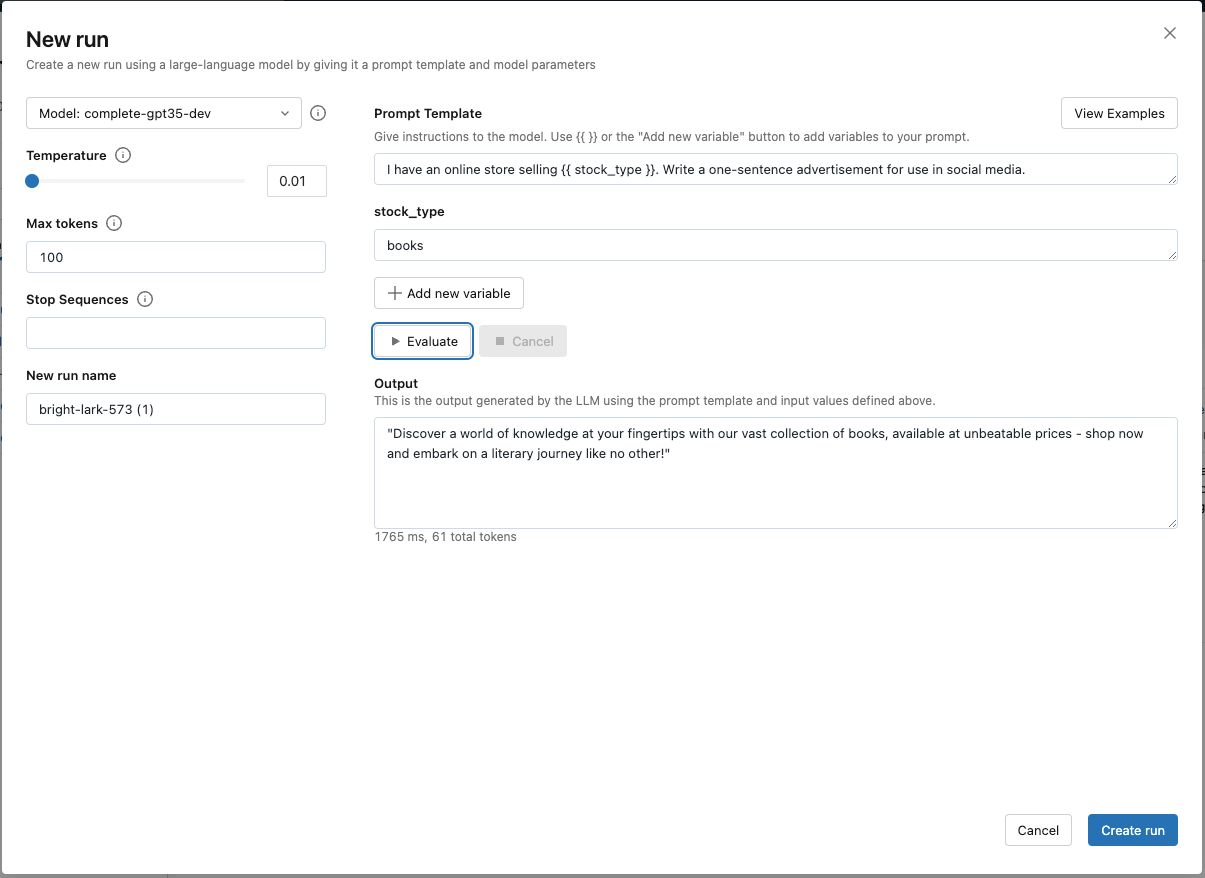

Prompt engineering is a great way to quickly assess if a use case can be solved with a large language model (LLM). With the new prompt engineering UI in MLflow 2.7, business stakeholders can experiment with various base models, parameters, and prompts to see if outputs are promising enough to start a new project. Simply create a new Blank Experiment or (open an existing one) and click “New Run” to access the interactive prompt engineering tool. Sign up to join the preview here.

Automatically track prompt engineering experiments to build evaluation datasets and identify best model candidates

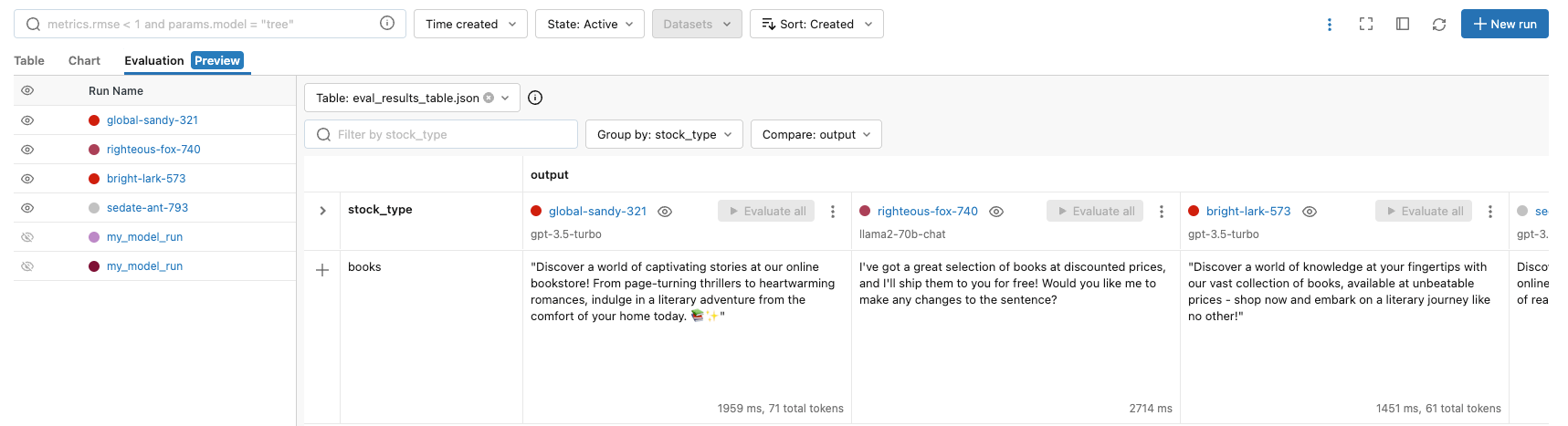

In the new prompt engineering UI, users can explicitly track an evaluation run by clicking “Create run” to log results to MLflow. This button tracks the set of parameters, base models, and prompts as an MLflow model and outputs are stored in an evaluation table. This table can then be used for manual evaluation, converted to a Delta table for deeper analysis in SQL, or be used as the test dataset in a CI/CD process.

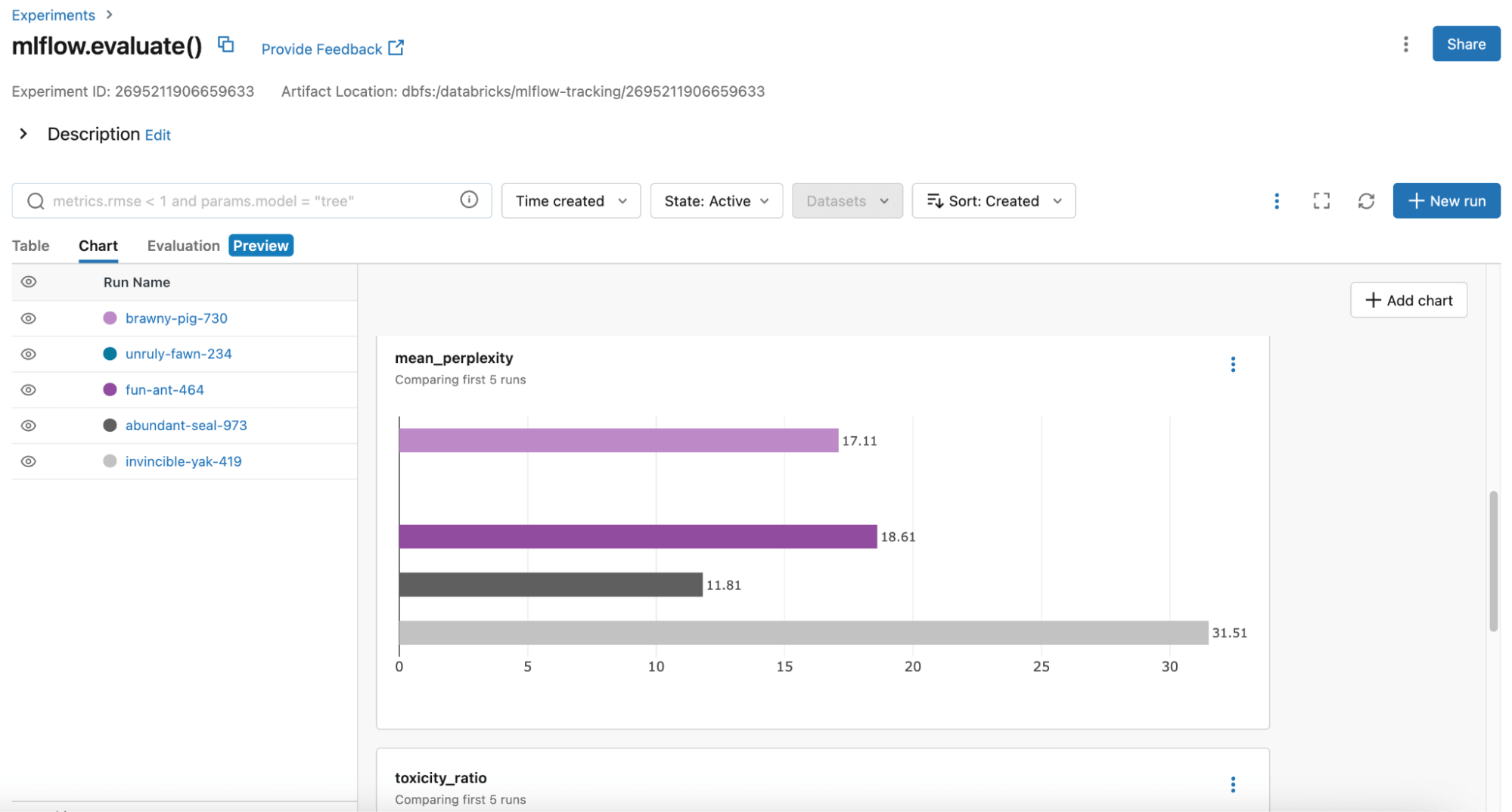

MLflow is always adding more metrics in the MLflow Evaluation API to help you identify the best model candidate for production, now including toxicity and perplexity. Users can use the MLflow Table or Chart view to compare model performances:

Because the set of parameters, prompts, and base models are logged as MLflow models, this means you can deploy a fixed prompt template for a base model with set parameters in batch inference or serve it as an API with Databricks Model Serving. For LangChain users this is especially useful as MLflow comes with model versioning.

Democratize ad-hoc experimentation across your organization with guardrails

The MLflow prompt engineering UI works with any MLflow AI Gateway route. AI Gateway routes allow your organization to centralize governance and policies for SaaS LLMs; for example, you can put OpenAI’s GPT-3.5-turbo behind a Gateway route that manages which users can query the route, provides secure credential management, and provides rate limits. This protects against abuse and gives platform teams confidence to democratize access to LLMs across their org for experimentation.

The MLflow AI Gateway supports OpenAI, Cohere, Anthropic, and Databricks Model Serving endpoints. However, with generalized open source LLMs getting more and more competitive with proprietary generalized LLMs, your organization may want to quickly evaluate and experiment with these open source models. You can now also call MosaicML’s hosted Llama2-70b-chat.

Try MLflow today for your LLM development!

We are working quickly to support and standardize the most common workflows for LLM development in MLflow. Check out this demo notebook to see how to use MLflow for your use cases. For more resources:

- Sign up for the MLflow AI Gateway Preview (includes the prompt engineering UI) here.

- To get started with the prompt engineering UI, simply upgrade your MLflow version (pip install –upgrade mlflow), create an MLflow Experiment, and click “New Run”.

- To evaluate various models on the same set of questions, use the MLflow Evaluation API.

- If there is a SaaS LLM endpoint you want to support in the MLflow AI Gateway, follow the guidelines for contribution on the MLflow repository. We love contributions!

Never miss a Databricks post

What's next?

Product

December 10, 2024/7 min read