Announcing Advanced Security and Governance in Mosaic AI Gateway

Support for all GenAI models across the enterprise

Published: September 9, 2024

by Ahmed Bilal, Kasey Uhlenhuth and Archika Dogra

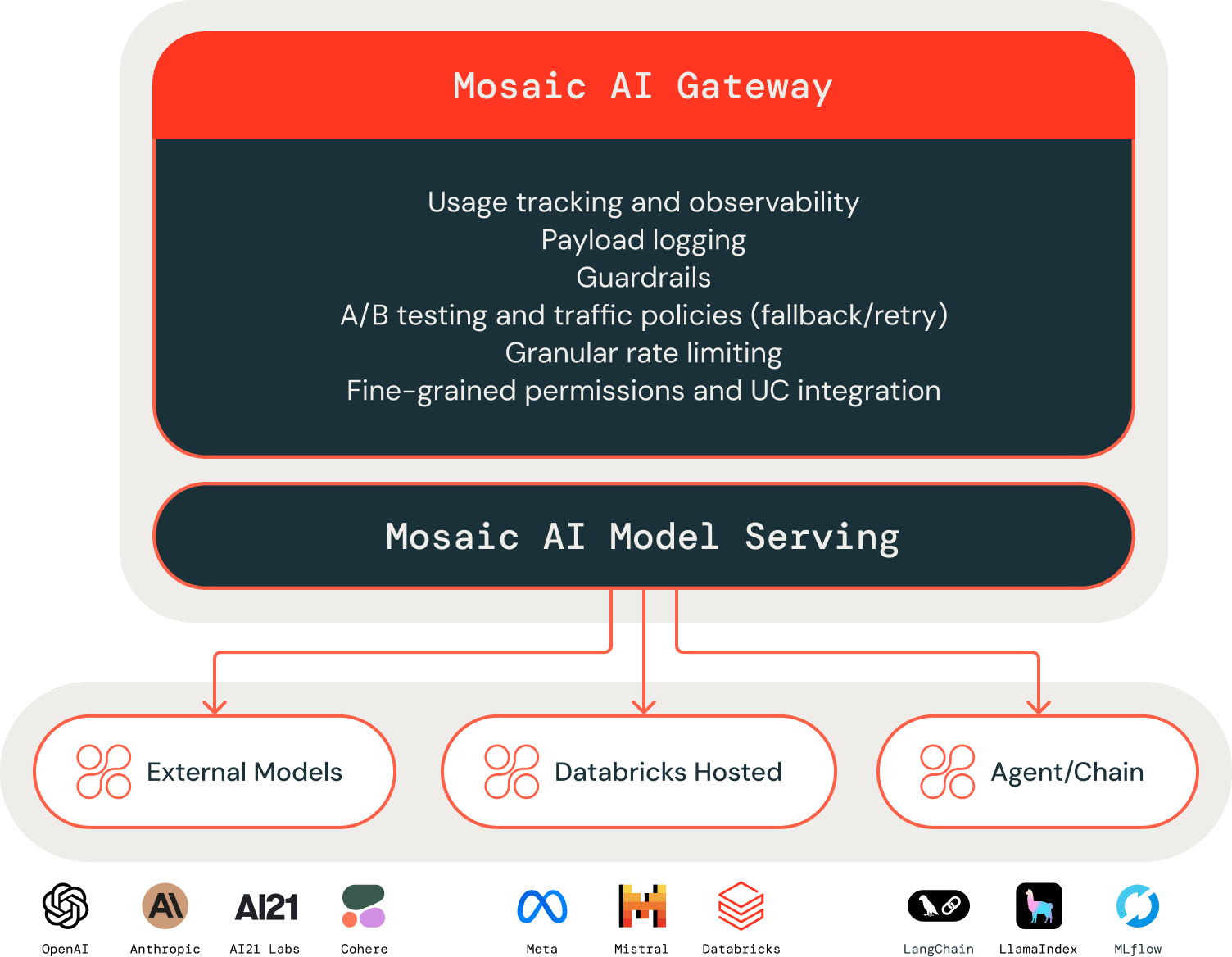

We are excited to introduce several powerful new capabilities to Mosaic AI Gateway, designed to help our customers accelerate their AI initiatives with even greater simplicity, security, and governance.

As enterprises race to implement AI solutions, managing security, compliance, and costs has become increasingly challenging. That’s why we launched Mosaic AI Gateway last year, now used by many organizations, to manage AI traffic for a wide range of models, including OpenAI GPT, Anthropic Claude, and Meta Llama models.

Today’s update introduces advanced features for usage tracking, payload logging, and guardrails, enabling enterprises to apply security and governance to any AI model within the Databricks Data Intelligence Platform. With this release, the Mosaic AI Gateway now provides production-grade security and governance, even for the most sensitive data and traffic.

“Mosaic AI Gateway is providing us with a secure way to consume AI models and connect them to our proprietary data. This enables us to build secure, compliant, and context-aware AI systems, improving our productivity and helping us fulfill our mission to deliver superior healthcare services to all." — Kapil Ashar, Vice President, Enterprise Data and Clinical Platform, Accolade

What is Mosaic AI Gateway?

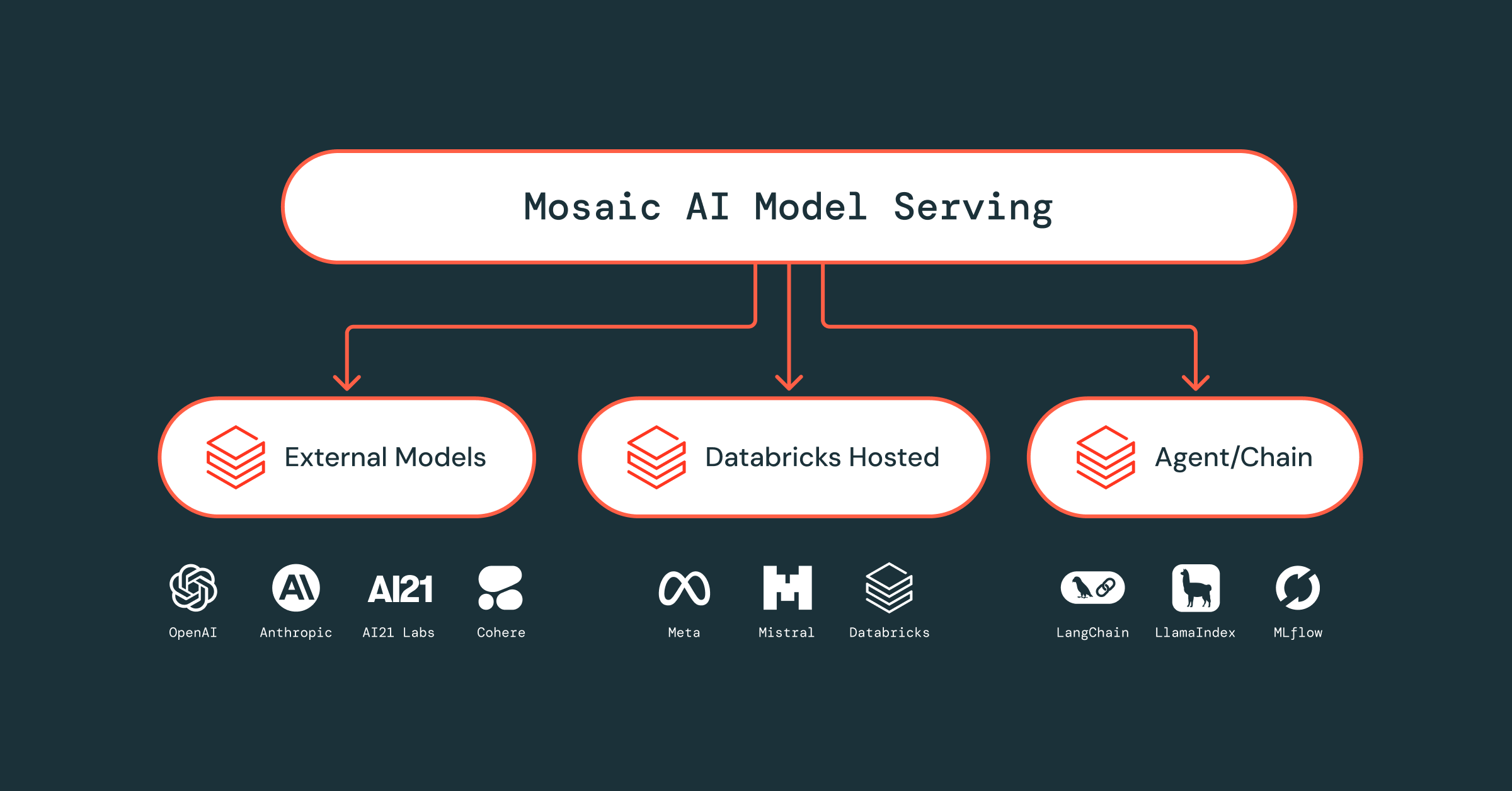

Many enterprises mix and match multiple AI models from different providers to build compound AI systems (e.g., RAG, multi-agent architectures) that achieve the quality needed to deploy GenAI applications into production. However, as enterprises integrate a diverse array of open and proprietary models, they encounter challenges with operational inefficiencies, cost overruns, and potential security risks.

Mosaic AI Gateway addresses these challenges by providing a unified service to access, manage, and secure AI traffic. It allows enterprise administrators to enforce guardrails and monitor AI usage while offering developers a simple interface to quickly experiment, combine, and safely deploy applications into production. Companies like OMV and Edmunds, which have adopted Mosaic AI Gateway, have been able to accelerate their AI initiatives more effectively than their peers, all while maintaining compliance, security, and operational efficiency.

What can you do with Mosaic AI Gateway?

Securely access any AI models

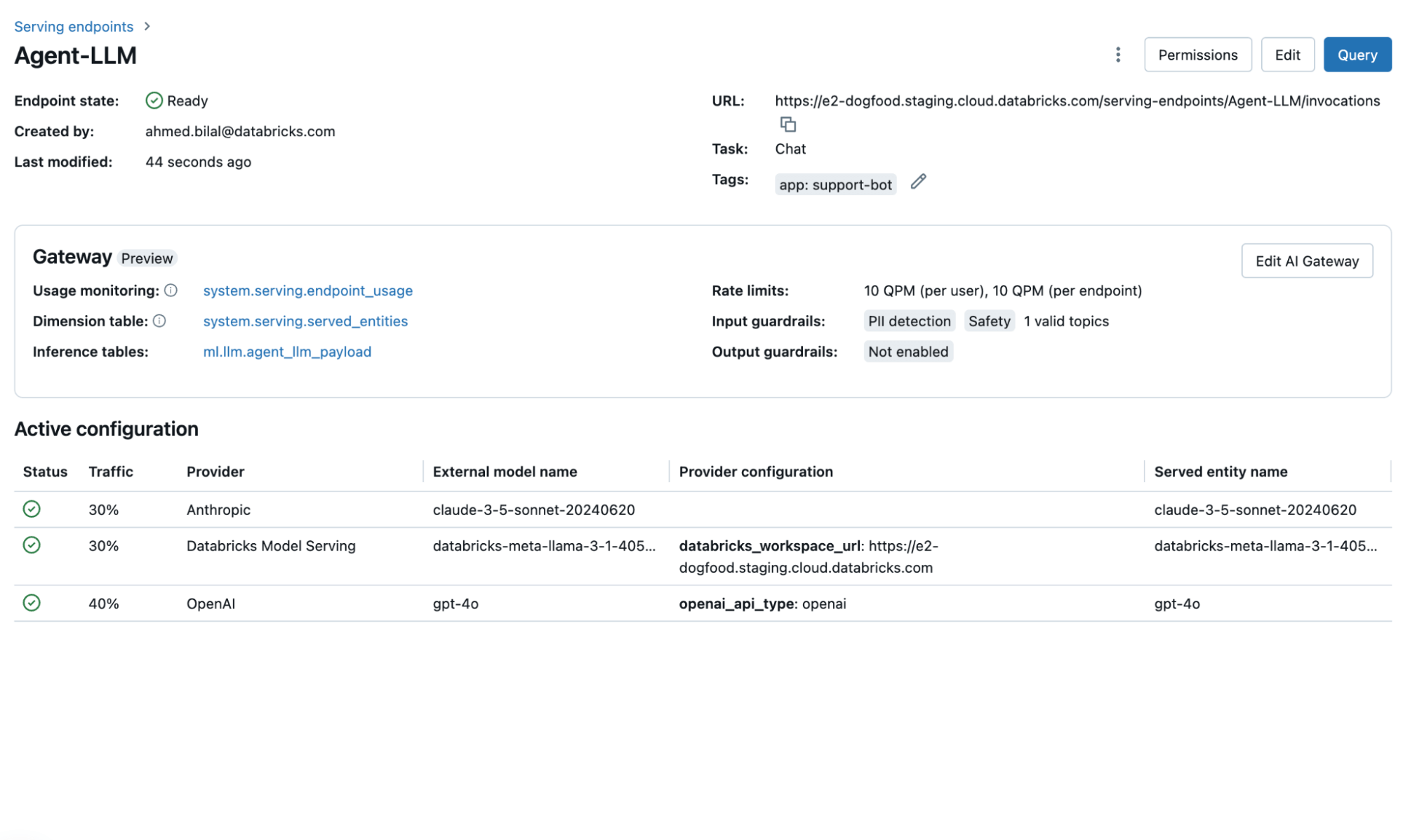

Mosaic AI Gateway simplifies access to any LLM through a single interface (API, SDK, SQL), significantly reducing both development time and integration costs. You can easily switch between proprietary and open models without changes to the client app. What sets Mosaic AI Gateway apart is its support for all AI assets—traditional models, GenAI models, chains, and agents—eliminating the need for multiple systems. It also enables routing and traffic splitting between models for A/B testing or distributing workloads across providers to handle high demand.

“With Mosaic AI Gateway, we were able to confidently experiment with a variety of open and proprietary AI models, accelerating innovation while ensuring regulatory compliance. This allowed us to integrate multiple GenAI apps, reducing information search time and improving data-driven decision-making.” — Harisyam Manda, Senior Data Scientist at OMV

Monitor and Debug Production AI Traffic

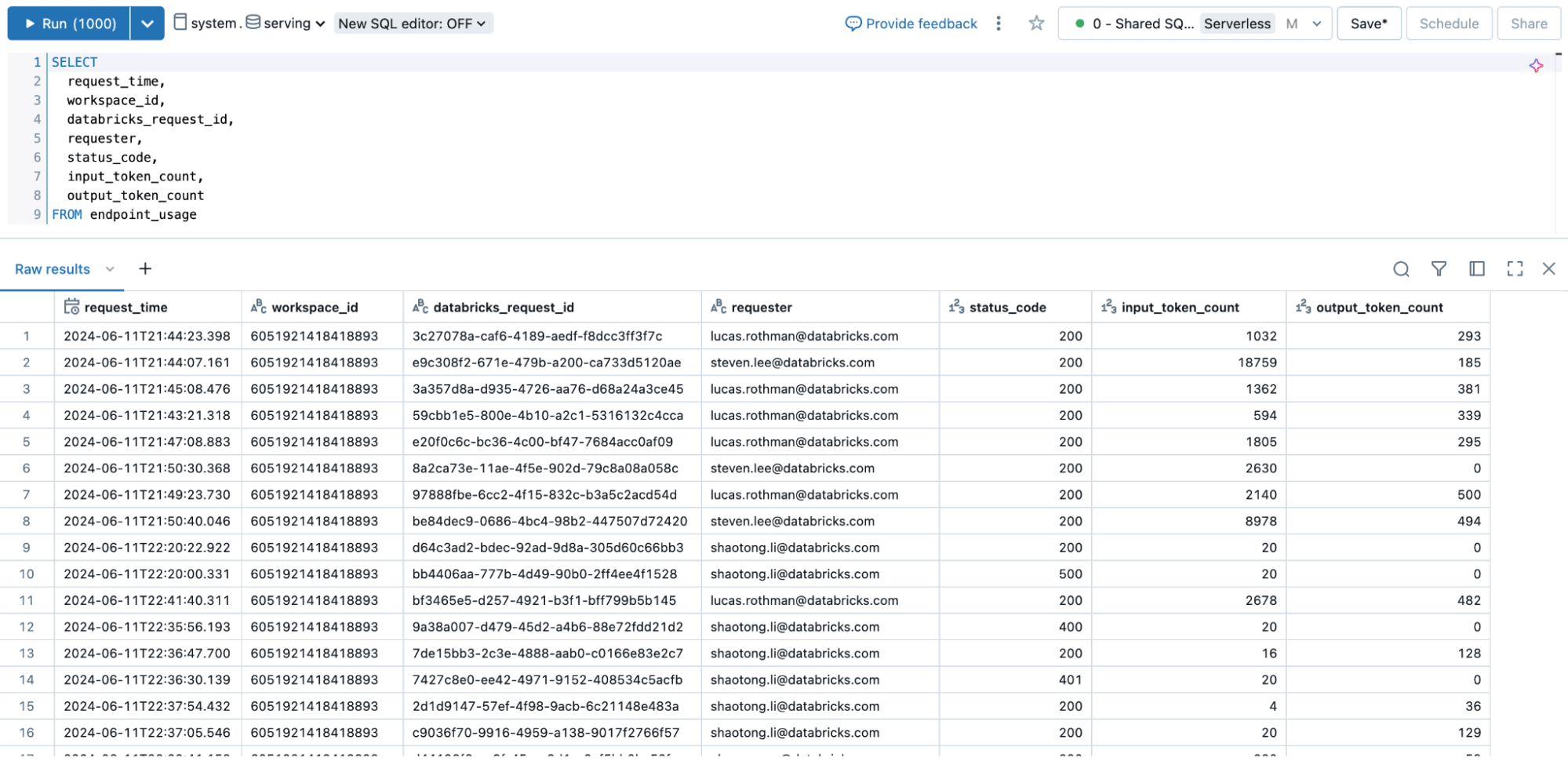

Mosaic AI Gateway now captures usage and payload data for all traffic flowing in and out of endpoints to Unity Catalog Delta Tables. We are introducing two key tables:

- Endpoint Usage Table: This system table logs every request across all serving endpoints in the account, including requester details, usage statistics, and custom metadata. This data helps optimize spending and maximize ROI—for example, by setting rate limits on experimental endpoints or working with providers to increase quotas for production endpoints.

- Inference Table: This table continuously captures raw inputs, outputs, HTTP status codes, and latency for each serving endpoint. You can use this data to monitor AI app quality, debug issues, or even as a training corpus to fine-tune AI models.

The best part? All data is captured in Unity Catalog, making it easy to securely share, search, visualize, and analyze using familiar data tools. For example, you can combine these tables with other tables, such as label data or business metrics, to perform custom analysis or build dashboards tailored to your business needs.

"Mosaic AI Gateway allows us to securely consume any LLMs, be they OpenAI or other models hosted on Databricks, while ensuring LLM traffic is properly governed and tracked. This has democratized GenAI, allowing us to deploy new use cases like a customer service bot that has improved customer satisfaction." —Manuel Velaro Méndez, Head of Big Data at Santalucía Seguros

Continuously Safeguard Users and Applications

Mosaic AI Gateway includes comprehensive guardrails to secure traffic to any model API, enforcing safety policies and protecting sensitive information in real-time. These guardrails include:

- Safety Filtering: Filters harmful content such as hate speech, insults, sexual content, violence, misconduct, and other categories.

- PII Filters: Detects and blocks requests containing sensitive content such as personally identifiable information (PII) in user inputs.

- Keyword Filters: Blocks unwanted topics in your applications to ensure safe, relevant interactions aligned with your business and policies.

- Topic Filters: Keeps your application focused on its trained scope by avoiding responses to unrelated or risky topics, minimizing liability.

These guardrails can be set at the endpoint or request levels to fit specific use cases and policies. All data is logged in Inference Tables, which can then be analyzed with Lakehouse Monitoring to track model safety over time.

“Guardrails helps us prevent unsafe content from reaching our end user. With payload logging, we can also trace guardrails to track performance of the application." — Ryan Jockers, Assistant Director at North Dakota University System

Connect AI with Your Data Effortlessly

Mosaic AI Gateway is built on Databricks Data Intelligence Platform, enabling enterprises to easily connect LLMs to their data using techniques like RAG, agent workflows, and fine-tuning, helping transform general intelligence into actionable data intelligence. This is already in action—many customers are using Mosaic AI’s Vector Search with external models to create embeddings or leveraging agent frameworks and evaluation tools to build agents with external models.

"Databricks Model Serving is accelerating our AI-driven projects by making it easy to securely access and manage multiple SaaS and open models, including those hosted on or outside Databricks. Its centralized approach simplifies security and cost management, allowing our data teams to focus more on innovation and less on administrative overhead." — Greg Rokita, AVP, Technology at Edmunds.com

Getting Started with Mosaic AI Gateway

Mosaic AI Gateway’s new monitoring and guardrails are now available for all model serving workspaces. Enable Mosaic AI Gateway on new and existing external models with just a few clicks. Support for additional endpoints is coming soon.

- Attend the next Databricks GenAI Webinar on 10/8/24: The Shift to Data Intelligence.

- Watch the Mosaic AI Gateway Demo.

- Check out the Mosaic AI Gateway Notebook Demo.

- Dive deeper into the Mosaic AI Gateway Documentation (AWS | Azure)

Want to see it in action?

Try the Mosaic AI Gateway Product Tour to help customers accelerate their AI projects with simplicity, security, and governance.

Never miss a Databricks post

What's next?

Data Science and ML

June 12, 2024/8 min read

Mosaic AI: Build and Deploy Production-quality AI Agent Systems

Platform & Products & Announcements

December 17, 2024/2 min read