Simplified Analytics Engineering with Databricks and dbt Labs

For over a year now, Databricks and dbt Labs have been working together to realize the vision of simplified real-time analytics engineering, combining dbt's highly popular analytics engineering framework with the Databricks Lakehouse Platform, the best place to build and run data pipelines. Together, Databricks and dbt Labs enable data teams to collaborate on the lakehouse, simplifying analytics and turning raw data into insights efficiently and cost-effectively. Many of our customers such as Conde Nast, Chick-fil-A, and Zurich Insurance are building solutions with Databricks and dbt Cloud.

The collaboration between Databricks and dbt Labs brings together two industry leaders with complementary strengths. Databricks, the data and AI company, provides a unified environment that seamlessly integrates data engineering, data science, and analytics. dbt Labs helps data practitioners work more like software engineers to produce trusted datasets for reporting, ML modeling, and operational workflows, using SQL and python. dbt Labs calls this practice analytics engineering.

What's difficult about Analytics today?

Organizations seeking streamlined analytics frequently encounter three significant obstacles:

- Data Silos Hinder Collaboration: Within organizations, multiple teams operate with different approaches to working with data, resulting in fragmented processes and functional silos. This lack of cohesion leads to inefficiencies, making it difficult for data engineers, analysts, and scientists to collaborate effectively and deliver end-to-end data solutions.

- High Complexity and Costs for Data Transformations: To achieve analytics excellence, organizations often rely on separate ingestion pipelines or decoupled integration tools. Unfortunately, this approach introduces unnecessary costs and complexities. Manually refreshing pipelines when new data becomes available or when changes are made is a time-consuming and resource-intensive process. Incremental changes often require full recompute, leading to excessive cloud consumption and increased expenses.

- Lack of End-to-End Lineage and Access Control: Complex data projects bring numerous dependencies and challenges. Without proper governance, organizations face the risk of using incorrect data or inadvertently breaking critical pipelines during changes. The absence of complete visibility into model dependencies creates a barrier to understanding data lineage, compromising data integrity and reliability.

Together, Databricks and dbt Labs seek to solve these problems. Databricks' simple, unified lakehouse platform provides the optimal environment for running dbt, a widely used data transformation framework. dbt Cloud is the fastest and easiest way to deploy dbt, empowering data teams to build scalable and maintainable data transformation pipelines.

Databricks and dbt Cloud together are a game changer

Databricks and dbt Cloud enable data teams to collaborate on the lakehouse. By simplifying analytics on the lakehouse, data practitioners can effectively turn raw data into insights in the most efficient, cost-effective way. Together, Databricks and dbt Cloud help users break down data silos to collaborate effectively, simplify ingestion and transformation to lower TCO, and unify governance for all their real-time and historical data.

Collaborate on data effectively across your organization

The Databricks Lakehouse Platform is a single, integrated platform for all data, analytics and AI workloads. With support for multiple languages, CI/CD and testing, and unified orchestration across the lakehouse, dbt Cloud on Databricks is the best place for all data practitioners - data engineers, data scientists, analysts, and analytics engineers - to easily work together to build data pipelines and deliver solutions using the languages, frameworks and tools they already know.

Simplify ingestion and transformation to lower TCO

Build and run pipelines automatically using the most effective set of resources. Simplify ingestion and automate incrementalization within dbt models to increase development agility and eliminate waste so you pay for only what's required, not more.

We also recently announced two new capabilities for analytics engineering on Databricks that simplify ingestion and transformation for dbt users to lower TCO: Streaming Tables and Materialized Views.

1. Streaming Tables

Data ingest from cloud storage and queues in dbt projects

Previously, to ingest data from cloud storage (e.g. AWS S3) or message queues (e.g. Apache Kafka), dbt users would have to first set up a separate pipeline, or use a third-party data integration tool, before having access to that data in dbt.

Databricks Streaming Tables enable continuous, scalable ingestion from any data source including cloud storage, message buses and more.

And now, with dbt Cloud + Streaming Tables on the Databricks Lakehouse Platform, ingesting from these sources comes built-in to dbt projects.

2. Materialized Views

Automatic incrementalization for dbt models

Previously, to make a dbt pipeline refresh in an efficient, incremental manner, analytics engineers would have to define incremental models and manually craft specific incremental strategies for various workload types (e.g. dealing with partitions, joins/aggs, etc.).

With dbt + Materialized Views on the Databricks Lakehouse Platform, it is much easier to build efficient pipelines without complex user input. Leveraging Databricks' powerful incremental refresh capabilities, dbt leverages Materialized Views within its pipelines to significantly improve runtime and simplicity, enabling data teams to access insights faster and more efficiently. This empowers users to build and run pipelines backed by Materialized Views to reduce infrastructure costs with efficient, incremental computation.

Although the idea of materialized views is itself not a new concept, the dbt Cloud/Databricks integration is significant because now both batch and streaming pipelines are accessible in one place, to the entire data team, combining the streaming capabilities of Delta Live Tables (DLT) infrastructure with the accessibility of the dbt framework. As a result, data practitioners working in dbt Cloud on the Databricks Lakehouse Platform can simply use SQL to define a dataset that is automatically, incrementally kept up to date. Materialized Views are a game changer for simplifying user experience with automatic, incremental refreshing of dbt models, saving time and costs.

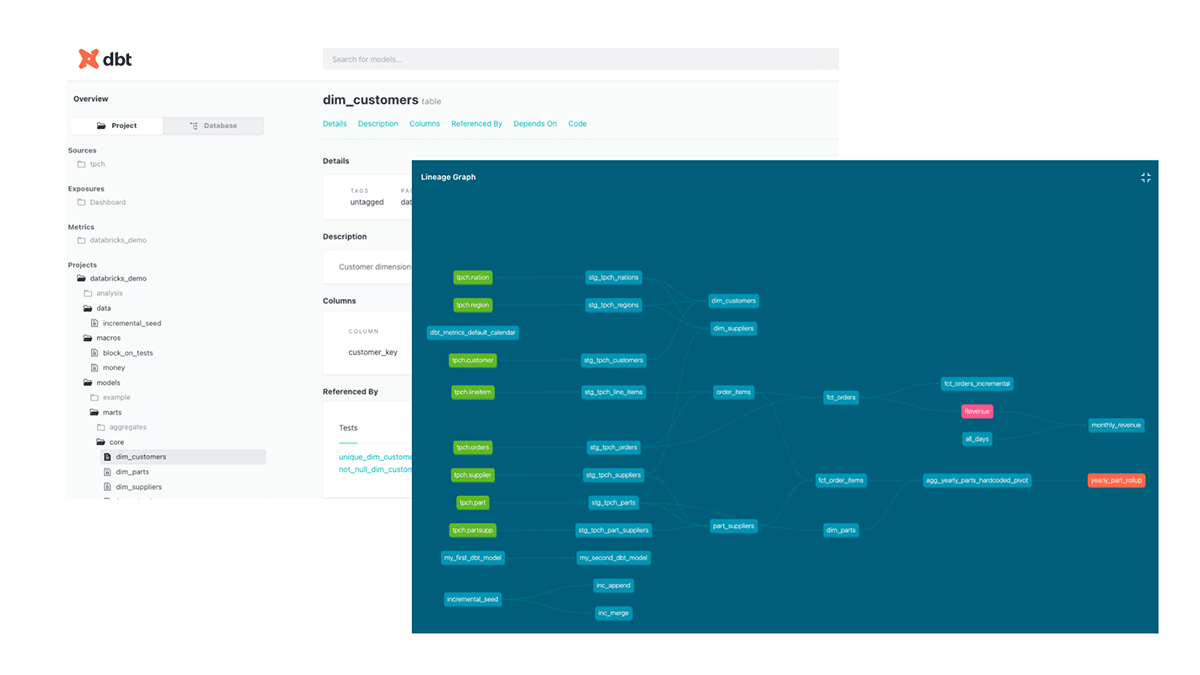

Unify governance for all your real-time and historical data with dbt and Unity Catalog

From data ingestion to transformation, with complete visibility into upstream and downstream object dependencies, dbt and Databricks Unity Catalog provide the complete data lineage and governance organizations need to have confidence in their data. Understanding dependencies becomes effortless, mitigating risks and forming a solid foundation for effective decision-making.

Transforming the Insurance Industry to Create Business Value with Advanced Analytics and AI

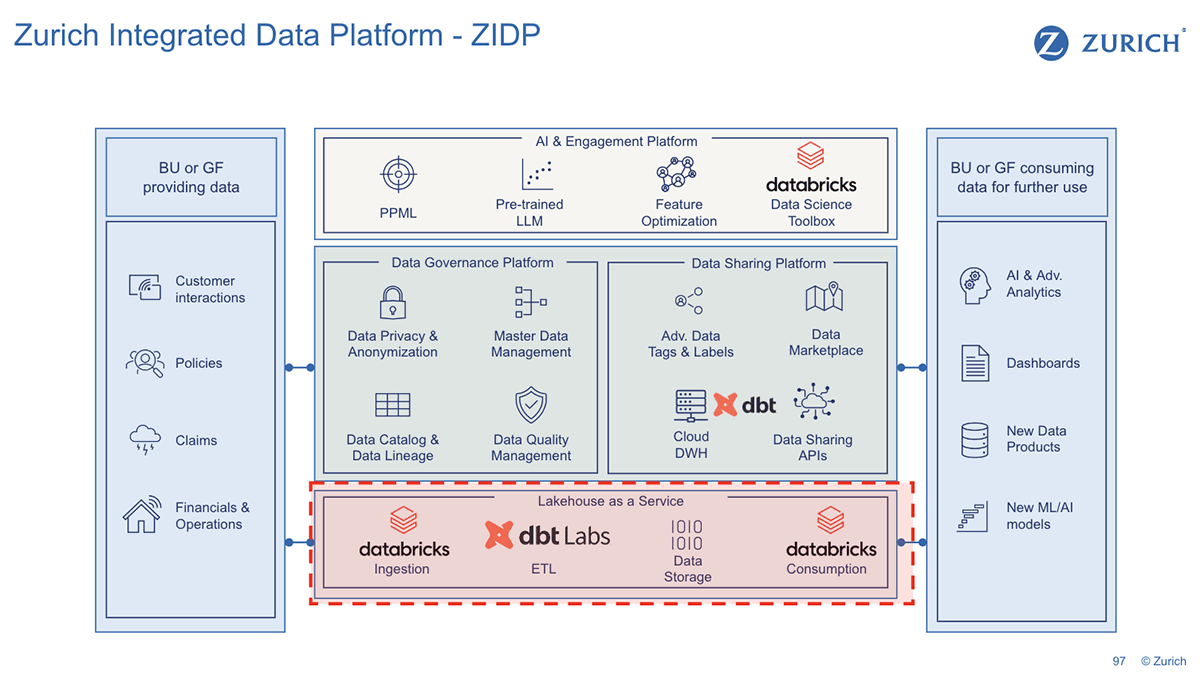

Zurich Insurance is changing the way the insurance industry leverages data and AI. Shifting focus from traditional internal use cases to the needs of its customers and distribution partners, Zurich has built a commercial analytics platform that offers insights and recommendations on underwriting, claims and risk engineering to strengthen customers' business operations and improve servicing for key stakeholder groups.

The Databricks Lakehouse Platform and dbt Cloud are the foundation of Zurich's Integrated Data Platform for advanced analytics and AI, data governance and data sharing. Databricks and dbt Labs form the ETL layer, Lakehouse as a Service, where data lands from different geographies, organizations, and departments into a multi-cloud lakehouse implementation. The data is then transformed from its raw format to Silver (analytics-ready) and Gold (business-ready) with dbt Cloud. "Zurich's data consumers are now able to deliver data science and AI use cases including pre-trained LLM models, scoring and recommendations for its global teams", said Jose Luis Sanchez Ros, Head of Data Solution Architecture, Zurich Insurance Company Ltd. "Unity Catalog simplifies access management and provides collaborative data exploration with a company-wide view of data that is shared across the organization without any replication."

Hear from Zurich Insurance at Data + AI Summit: Modernizing the Data Stack: Lessons Learned From the Evolution at Zurich Insurance

Get started with Databricks and dbt Labs

No matter where your data teams like to work, dbt Cloud on the Databricks Lakehouse Platform is a foundationally great place to start. Together, dbt Labs and Databricks help your data teams collaborate effectively, run simpler and cheaper data pipelines, and unify data governance.

Talk to your Databricks or dbt Labs rep on best-in-class analytics pipelines with Databricks and dbt, or get started today with Databricks and dbt Cloud. Sign up for The Case for Moving to the Lakehouse virtual event for a deep dive with Databricks and dbt Labs co-founders and see it all in action with a dbt Cloud on Databricks product demo.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read