Solution Accelerator: Building Digital Twins for Operational Efficiency With Databricks

Check out our Solution Accelerator for Digital Twins for more details and to download the notebooks.

As a real-time, virtual representation of a real-world physical system or process, digital twins have begun to play a critical role in manufacturing for quality management, supply chain management, predictive maintenance, and customer experience.

Manufacturers are responding to market dynamics that are requiring them to bring products to market quicker, optimize production processes and build agile supply chains at scale at a lower cost while ensuring the highest return on invested capital (ROIC). Digital twins play a big role in this by enabling process optimization modeling, risk assessments, condition monitoring, and optimized design.

What is a digital twin?

A digital twin is a virtual representation of an object, product, piece of equipment, person, process, or even a complete manufacturing ecosystem. It is created using data derived from sensors (often IoT or IIoT) that are attached to or embedded in the original object. This data allows for both structural and operational views of what happens to the object in real-time, allowing engineers to monitor systems and model systems dynamics. Adjustments can be made to the digital twin to assess the impact of real-world changes before making any changes to the original system.

For a discrete or continuous manufacturing process, a digital twin gathers process and system data with the help of various IoT sensors (both IT and OT) to form a virtual model which is then used to run simulations, study performance issues, and generate new insights.

Digital twins are addressing several common challenges in the manufacturing industry including:

- Increased complexity in product designs, resulting in higher costs, and increasingly longer development times

- Opaque supply chains

- Lack of optimization in production lines – performance variations, unknown defects, and projection of operating cost is obscure

- Poor quality management – over-reliance on theory, managed by individual departments,

- Excessive downtime or process disruptions due to the cost of reactive maintenance between the departments

- Inability to collect real-time feedback makes predicting customer demand very challenging

How Databricks helps to deliver Digital Twins

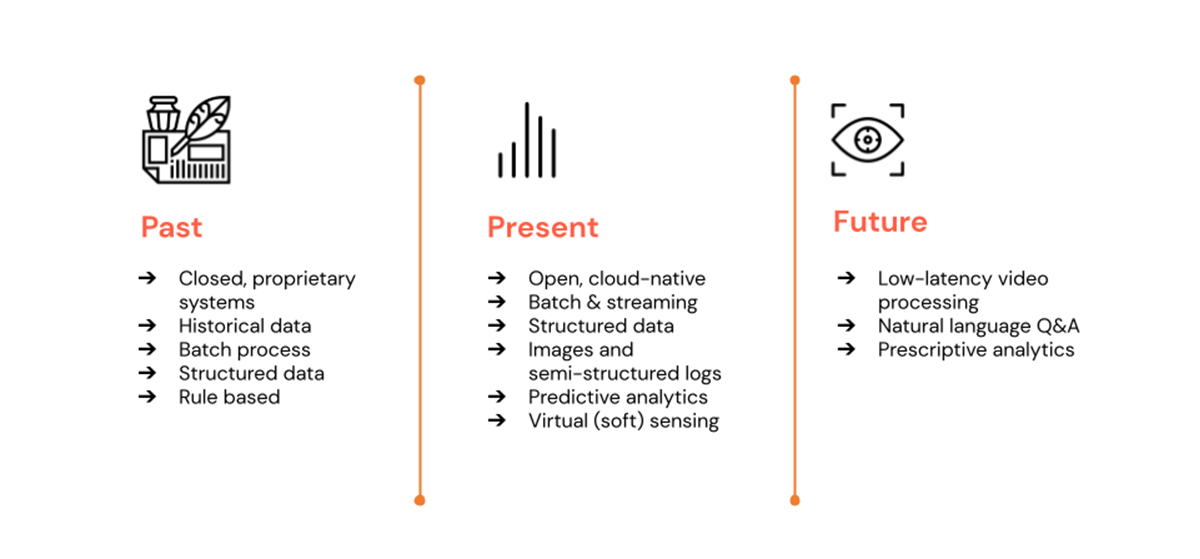

In the past, software suites for digital twins were prohibitive both in terms of license costs as well as limited integration with companies' wider application and analytics landscapes. Proprietary frameworks and CapEx-intensive licensing models hindered not only application development but also the democratization of data and insights to other teams or personas in the organization.

Today, consumption-based cloud services have significantly lowered the barrier to entry. Additionally, the convergence of open standards and interfaces (e.g. Apache Kafka, REST APIs, Python/SQL) has drastically eased integration and reusability.

Nevertheless, digital twins are distinguished from traditional simulation solutions by their fundamental ability to consume real-world data and generate insights continuously. Data required by a digital twin can come from numerous sources (e.g. sensor data, object specifications, production processes, etc.) at high volumes and in different formats. Here, organizations still often struggle with tradeoffs between data freshness, cost performance, and the flexibility to express complex business logic.

The Databricks Lakehouse Platform directly addresses the above challenges:

- Near real-time processing of IoT sensor data with Spark Structured Streaming:

- Scalable, fault-tolerant stream processing engine supporting SQL, Python, Scala, and Java

- Complex Event Processing - including stateful operations (often important for modeling physical processes)

- Support for arbitrary REST APIs, SDKs, and libraries (e.g. for machine learning inference)

- Project Lightspeed doubles down even further on performance and expands Python capabilities for streaming workloads

- Unified analytics & ML lifecycle management (MLOps)

- Data governance, sharing, and lineage via Unity Catalog

- Single source of truth for your analytical data, with data versioning and reliable data management

Architecture

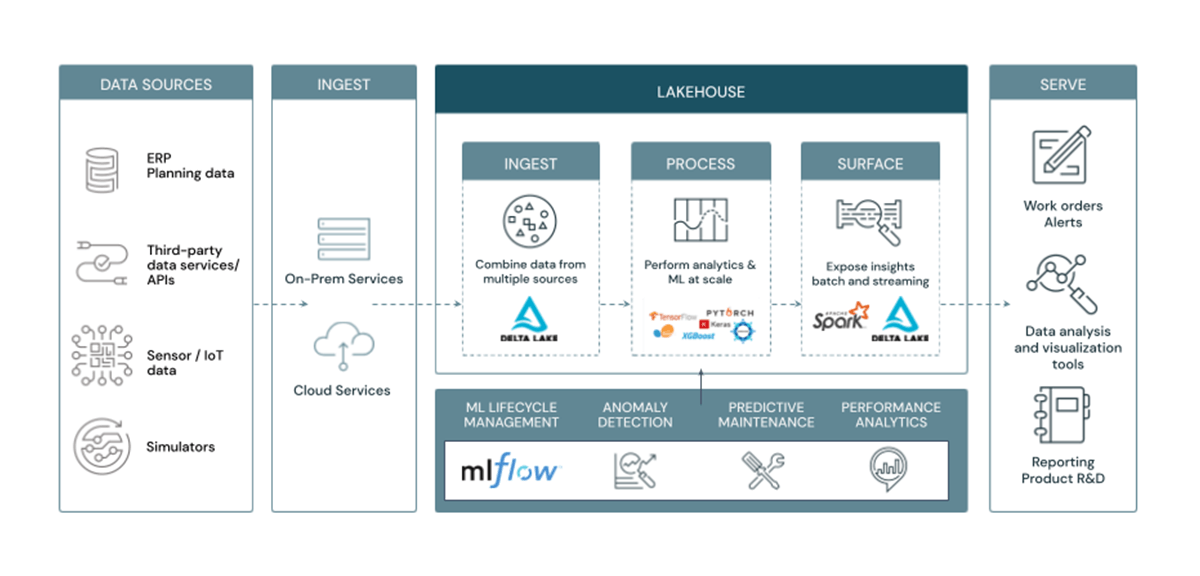

A modern, end-to-end digital twin often requires the following four capabilities, as depicted in the figure below:

- Twin visualization and exploration via 3D models, ontologies, knowledge graphs, etc.

- Timely processing of real-world data

- Stateful operations and scalable state management

- Flexible (programmatic) support for custom logic and libraries

Services such as Azure Digital Twins or AWS IoT TwinMaker predominantly solve for the first capability of defining, exploring, and visualizing your digital twins. Explore the examples provided by Azure and AWS here.

Databricks cohesively tackles the other three capabilities, ensuring that data volumes and velocities are appropriately handled as well as enabling meaningful, non-trivial insights to be computed and delivered to multiple downstream applications.

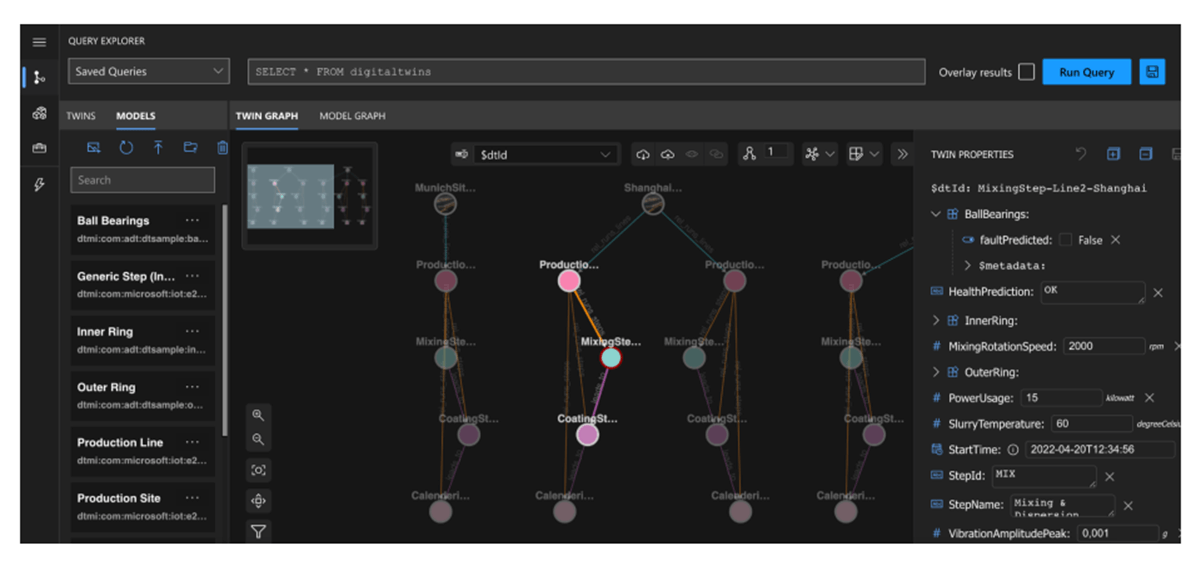

In this new Solution Accelerator (with notebooks included), we demonstrate a predictive maintenance use case for digital twins. Any data scientist can quickly get started with Databricks AutoML to train a model for diagnosing faults while fulfilling MLOps best practices for data versioning, experiment tracking, and model management. Once validated, the same model can be immediately deployed into a streaming pipeline - without the need for complex handovers or re-implementation processes. Finally, any predictions or insights produced can be continuously delivered to twin services for monitoring and exploration.

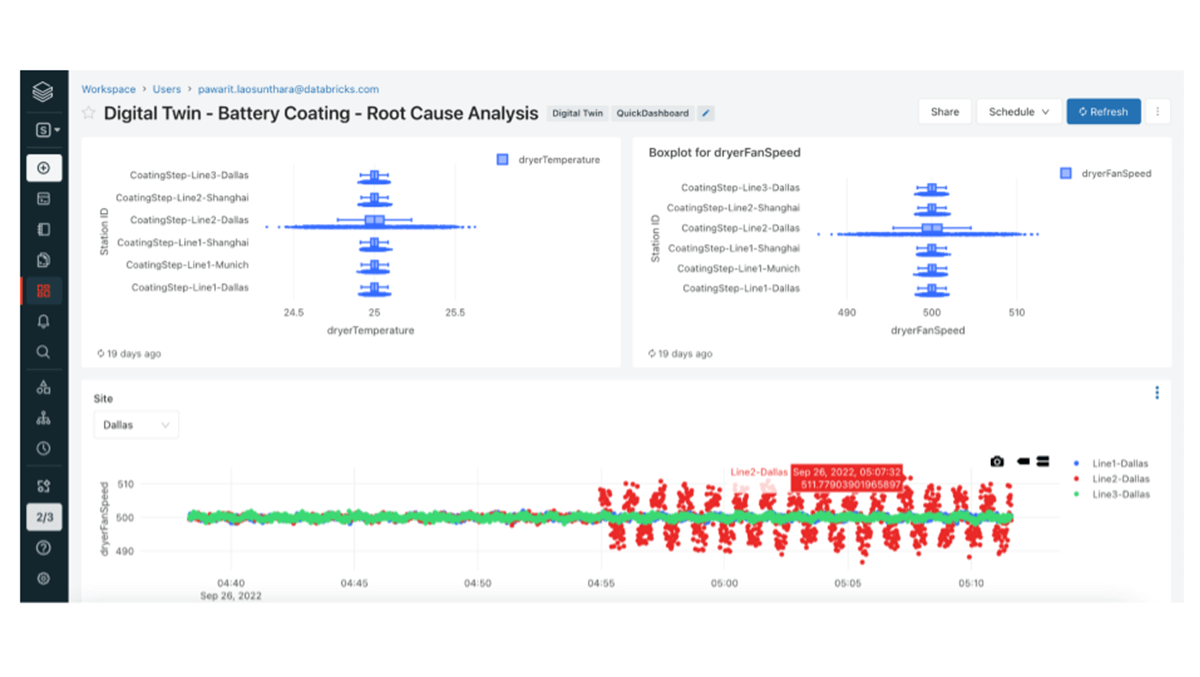

While advanced use cases such as predictive maintenance and anomaly detection are increasingly popular, more straightforward tasks such as ad-hoc data exploration, root cause analysis, and alerting are also readily accessible for low-code personas thanks to Databricks SQL's customizable dashboards and alerts.

Try our Digital Twins Solution Accelerator

Interested in seeing how this works? Check out the Databricks Solution Accelerator for Digital Twins in manufacturing. By following the step-by-step instructions provided, users can learn how building blocks provided by Databricks and Azure Digital Twins can be assembled to enable performant and scalable end-to-end digital twins.

Never miss a Databricks post

What's next?

Manufacturing

October 1, 2024/5 min read

From Generalists to Specialists: The Evolution of AI Systems toward Compound AI

Product

November 27, 2024/6 min read