Solution Accelerator: Scalable Route Generation With Databricks and OSRM

Published: September 2, 2022

by Rohit Nijhawan, Bryan Smith and Timo Roest

Check our new Scalable Route Generation Solution Accelerator for more details and to download the notebooks.

Delivery has become an integral part of the modern retail experience. Customers increasingly expect access to goods delivered to their front doors within narrowing windows of time. Organizations that can meet this demand are attracting and retaining customers, forcing more and more retailers into the delivery game.

But even the largest retailers struggle with the cost of providing last mile services. Amazon has played a large part in driving the expansion of rapid home delivery, but even it has had difficulty recouping the costs. For smaller retailers, delivery is often provided through partner networks which have had their own profitability challenges given customers' unwillingness to pay for delivery, especially for fast turnaround items such as food and groceries.

While many conversations about the future of delivery focus on autonomous vehicles, in the short term retailers and partner providers are spending considerable effort on improving the efficiency of driver-enabled solutions in an attempt to bring down costs and return margin to delivery orders. Given constraints like order-specific pickup and narrow delivery windows, differences in item perishability, which limits the amount of time some items may sit in a delivery vehicle, and the variable (but generally rising) cost of fuel and labor, easy solutions remain elusive.

Accurate Route Information Is Critical

Software options intended to help solve these challenges are dependent on quality information about travel times between pickup and delivery points. Simple solutions such as calculating straight-line distances and applying general rates of traversal may be appropriate in hypothetical examinations of routing problems, but for real-world evaluations of new and novel ways to move drivers through a complex network of roads and pathways, access to far more sophisticated approaches for time and distance estimations are required.

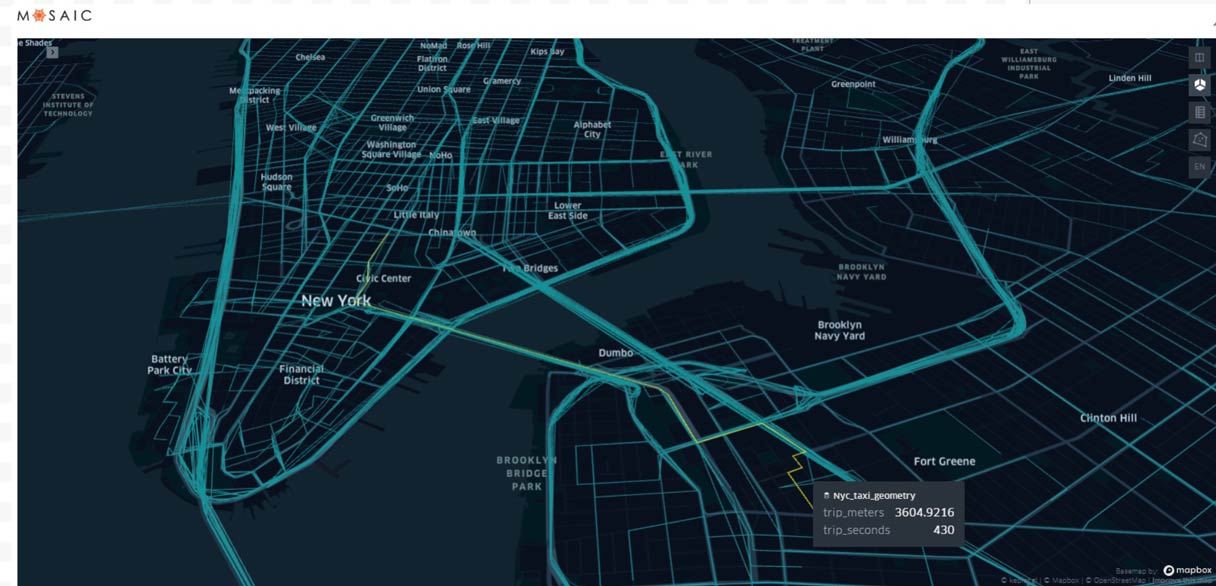

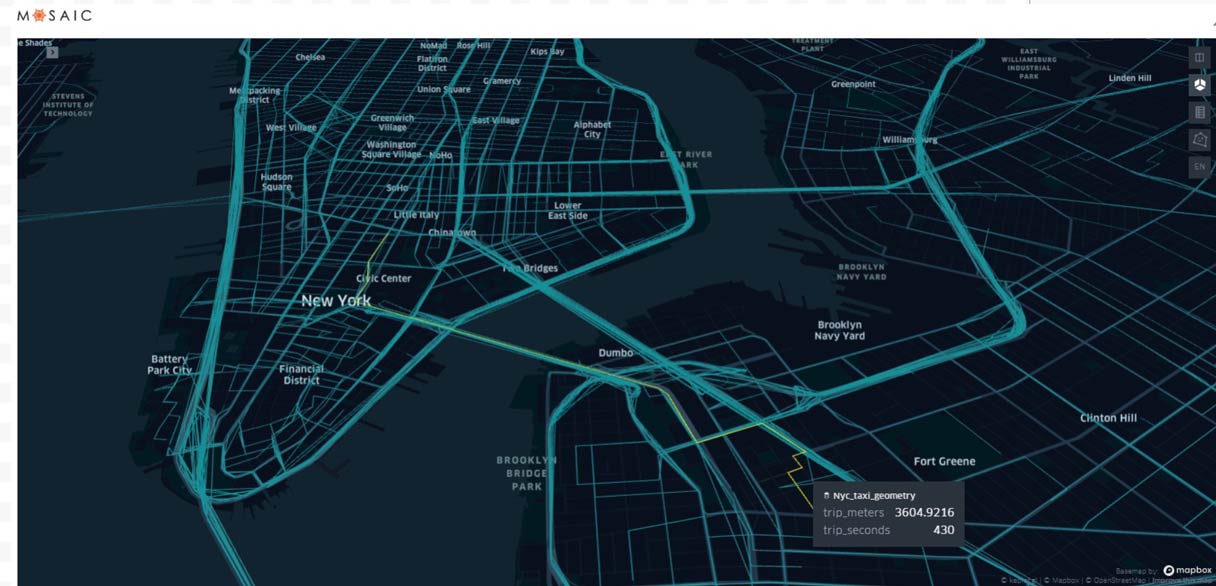

It's for this reason that more and more organizations are adopting routing software in both their operational and analytics infrastructures. One of the more popular software solutions for this is Project OSRM (Open Source Routing Machine). Project OSRM is an open source platform for the calculation of routes using open source map data provided by the OpenStreetMap project. It provides a fast, low-cost way for organizations to deploy a routing API within their environment for use by a wide range of applications.

Scalability Needed for Route Analytics

Typically deployed as a containerized service, the OSRM server is often employed as a shared resource within an IT infrastructure. This works fine for most application integration scenarios, but as teams of data scientists push large volumes of simulated and historical order data to such solutions for route generation, they can often become overwhelmed. As a result, we are hearing an increasing number of complaints from data scientists about bottlenecks in their route optimization and analysis efforts and from applications teams frustrated with applications being taken offline by hard to predict analytics workloads.

To address this problem, we wish to demonstrate how the OSRM server can be deployed within a Databricks cluster. Databricks, a unified platform for machine learning, data analysis and data processing, provides scalable and elastic access to cloud computing resources. Equally as important, it has support for complex data structures such as those generated by the OSRM software and geospatial analysis through a variety of native capabilities and open source libraries. By deploying the OSRM server into a Databricks cluster, data science teams can access the routing capacity they need without interfering with other workloads in their environment.

The key to such a solution is in how Databricks leverages the combined computational capacity of multiple servers within a cluster. When the OSRM server software is deployed to these servers, the capacity of the environment grows with the cluster. The cluster can be spun-up and shut-down on-demand as needed, helping the organization avoid wasting budget on idle resources. Once configured, the OSRM route generation capabilities are presented as easy to consume functions that fit nicely into the familiar data processing and analysis work the data science teams perform today.

By deploying the OSRM server in the Databricks cluster, data science teams can now evaluate new algorithms against large volumes of data without capacity constraints or fear of interfering with other workloads. Faster evaluation cycles mean these teams can more rapidly iterate their algorithms, fine tuning their work to drive innovation. We may not yet know the perfect solution to getting drivers from stores to customers' homes in the most cost effective manner, but we can eliminate one more of the impediments to finding it.

To examine more closely how the OSRM server can be deployed within a Databricks cluster, check out our Databricks Solution Accelerator for Scalable Route Generation.

Never miss a Databricks post

What's next?

Retail & Consumer Goods

September 20, 2023/11 min read

How Edmunds builds a blueprint for generative AI

Retail & Consumer Goods

September 9, 2024/6 min read