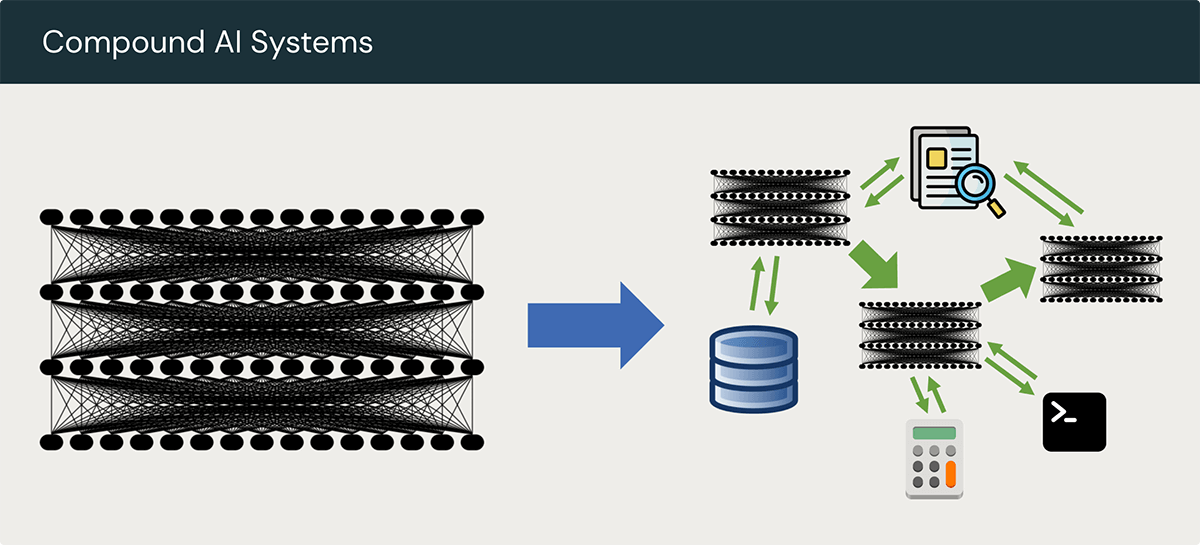

Compound AI Systems

What Are Compound AI Systems?

Compound AI systems, as defined by the Berkeley AI Research (BAIR) blog, are systems that tackle AI tasks by combining multiple interacting components. These components can include multiple calls to models, retrievers or external tools. Retrieval augmented generation (RAG) applications, for example, are compound AI systems, as they combine (at least) a model and a data retrieval system. Compound AI systems leverage the strengths of various AI models, tools and pipelines to enhance performance, versatility and re-usability compared to solely using individual models.

Increasingly, many new AI results are from compound systems (Source)

Developers are increasingly constructing compound AI systems to tackle their most challenging AI tasks. These systems can often outperform models alone, but as a community, we are still determining the best way to design these systems and the components that go into them.

Why build compound AI systems?

- Some tasks are easier to improve via system design: Using larger and more capable models can improve AI applications, but there is often a point of diminishing returns. Furthermore, improving or customizing a model via training or fine-tuning can be slow and costly. Integrating other models or tools into a compound system may be able to improve application quality beyond what can be achieved by a single model, no matter how capable.

- Systems can be dynamic: Individual models are fundamentally limited by their training data. They learn a certain set of information and behaviors and lack functionality such as the ability to search outside data sources or enforce access controls. A systems approach can add outside resources such as databases, code interpreters, permissions systems and more, making compound AI systems much more dynamic and flexible than individual models.

- Better control and trust: It can be challenging to get individual models to reliably return factual information or consistently formatted results. This may require careful prompting, multiple examples, fine-tuning or even workarounds, such as suggesting to an LLM that getting a good answer is a matter of life and death. Orchestrating LLMs with other tools and data sources can make AI systems more trustworthy and reliable by supplying them with accurate information from external sources or by using tools to better enforce output formatting constraints.

- More cost-quality options: Individual models generally offer a fixed level of quality at a fixed cost, but the cost-to-quality ratios available via single models are not suitable for all use cases. The ability to integrate LLMs with outside tools offers greater flexibility in the available cost-quality options. For example, a small and carefully tuned model combined with various search heuristics might provide good results at a lower cost than larger and more capable models. On the other hand, given a larger budget, bringing in outside tools and data sources can improve the performance of even the largest and most capable models.

How are effective compound AI systems built?

Compound AI systems are still an emerging application category, so the best practices guiding their development and use are still evolving. There are many different approaches to compound AI systems, each with different benefits and trade-offs. For example:

- Control logic: In a compound AI system, a code base defining control logic might call on a model to perform specific tasks under specific conditions, offering the reliability of programmatic control flow while still benefiting from the expressiveness of LLMs. Other systems might use an LLM for control flow, offering greater flexibility in interpreting and acting on inputs with the possible sacrifice of some reliability. Tools like Databricks External Models can help with control logic by simplifying the process of routing different parts of an application to different models.

- Where to invest time and resources: When developing compound AI systems, it is not always obvious whether to invest more time and resources on improving the performance of the model or models used in the system or on improving other aspects of the system. Fine-tuning a model or even switching to a more generally capable model might improve a compound AI system’s performance, but so might improving a data retrieval system or other components.

- Measuring and optimizing: Evaluation is important in any system involving AI models, but the approach to take is very application-specific. In some systems, a discrete metric assessing end-to-end performance might be suitable, while in others, it might make more sense to assess different components individually. For example, in a RAG application, it is often necessary to evaluate the retrieval and generation components separately. MLflow offers a flexible approach to evaluation that can accommodate many different aspects of compound AI systems, including retrieval and generation.

Despite rapidly changing approaches, there are a couple of key principles for building effective compound AI systems:

- Develop a strong evaluation system: Compound AI systems tend to involve numerous interacting components, and changing any one of them can impact the performance of the whole system. It is essential to come up with an effective way to measure the performance of the system and set up the necessary infrastructure to record, access and act on these evaluations.

- Experiment with different approaches: As noted above, there are few established best practices for building compound AI systems. In all likelihood, it will be necessary to experiment with different ways of integrating AI models and other tools, both in terms of the overall application control logic and the individual components. Modularity helps with experimentation: It is easier to experiment with an application that supports swapping out different models, data retrievers, tools, etc. MLflow provides a suite of tools for evaluation and experimentation, making it especially useful for developing compound AI systems.

What are the key challenges in building compound AI systems?

Compound AI systems introduce several key development challenges compared to AI models alone:

- Larger design space: Compound AI systems combine one or more AI models with tools such as retrievers or code interpreters, data sources, code libraries and more. There are typically multiple options for each of these components. Developing a RAG application, for example, typically requires choosing (at least) which models to use for embeddings and text generation, which data source or sources to use, where to store the data, and what retrieval system to use. Without clear best practices, developers must often invest significant effort to explore this vast design space and find a working solution.

- Co-optimizing system components: AI models often need to be optimized to work well with specific tools and vice versa. Changing one component of a compound AI system can change the behavior of the whole system in unexpected ways, and it can be challenging to make all the components work well together. For example, in a RAG system, one LLM might work very well with a given retrieval system, while another may not.

- Complex operations: LLMs alone can already be challenging to serve, monitor and secure. Combining them with other AI models and tools can compound these challenges. Using these compound systems may require developers to combine MLOps and DataOps tools and practices in unique ways in order to monitor and debug applications. Databricks Lakehouse Monitoring can provide excellent visibility into the complex data and modeling pipelines in compound AI systems.