Managed MLflow

Natively manage AI models, agents and apps with MLflow in Databricks

What is Managed MLflow?

Managed MLflow on Databricks delivers state-of-the-art experiment tracking, observability, performance evaluation, and model management for the full spectrum of machine learning and AI, from classical models and deep learning to generative AI applications and agents, all natively within the Databricks Data Intelligence Platform. Managed MLflow is built on the flexible foundation of open-source MLflow and fortified with enterprise-grade reliability, security, and scalability. This empowers enterprises to confidently build high quality models and agents using their preferred tools across the entire AI & ML ecosystem, all while ensuring their AI and data assets are governed and protected.

Benefits

Unified ML and Gen AI lifecycle

Managed MLflow unifies classical ML, deep learning, and GenAI development in a single, streamlined workflow. From experiment tracking to deployment, it delivers consistent versioning, prompt management, and packaging across models and agents—eliminating the need to stitch together separate tools.

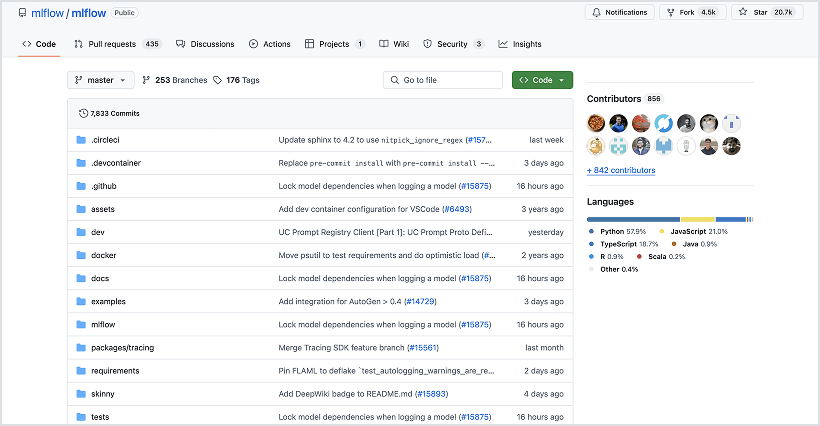

Flexible and open-source

Avoid vendor lock-in and maintain full flexibility across your stack. Built on open-source MLflow — with over 800 community contributors, 25+ million monthly package downloads, and trusted by more than 5,000 organizations worldwide — Managed MLflow seamlessly supports your choice of frameworks, languages, and tools. You get all the freedom and reliability of open source — plus the simplicity of a fully managed experience.

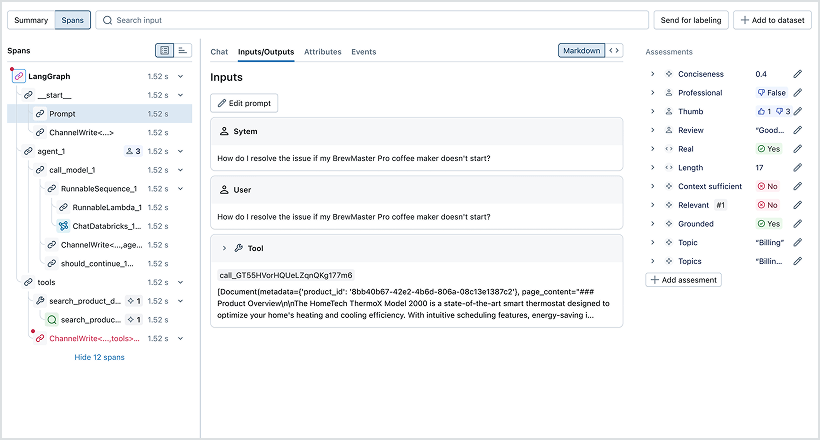

Enterprise-grade observability and governance

Deeply integrated within the Databricks platform, Managed MLflow provides full traceability, real-time monitoring, and unified governance across your AI workflows. With Unity Catalog, you can automatically enforce access controls, track lineage, and ensure compliance across your models, data and agents.

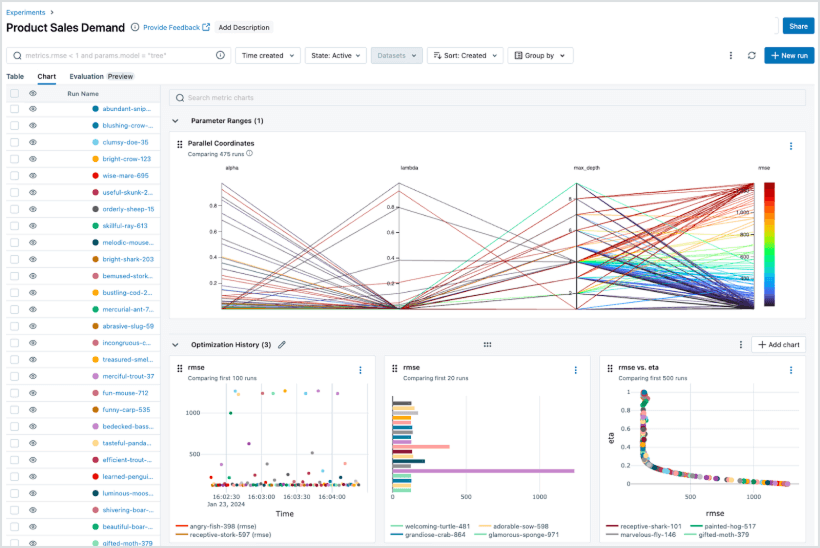

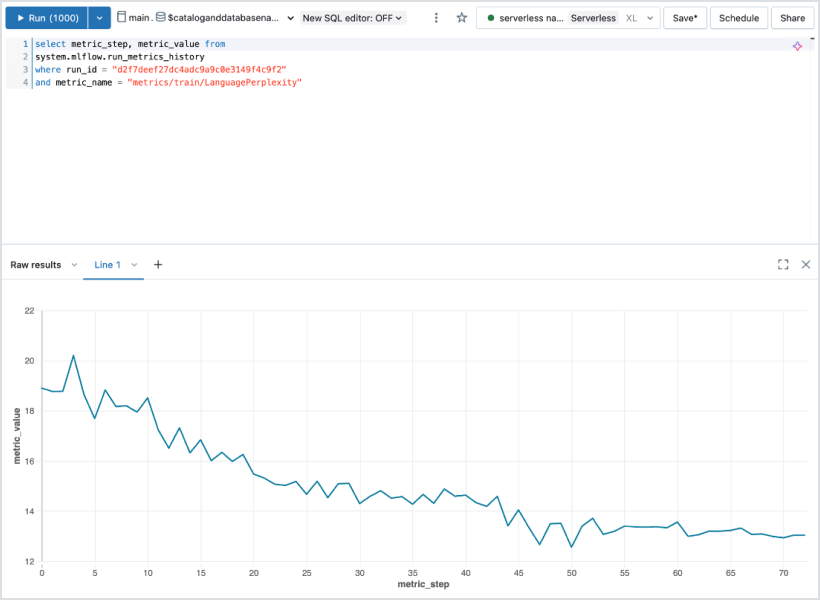

Powerful performance analytics

Analyze, compare, and visualize performance across dev, staging, and prod—all from a single place. With MLflow’s unified data model and integration with Databricks AI/BI and SQL, data scientists can uncover trends, identify regressions, and drive business impact using the same platform they use to build and deploy.

New GenAI features

Core features

See our Product News from Azure Databricks and AWS to learn more about our latest features.

Comparing MLflow offerings

Open Source MLflow | Managed MLflow on Databricks | |

|---|---|---|

Tracing & AI Observability | ||

Tracing APIs | ||

Notebook debugging integration | ||

Tracing for production applications | ||

Customizable observability dashboards | ||

Query trace data with SQL and AI/BI tools | ||

Production monitoring | ||

Generative AI Evaluation | ||

Evaluation APIs | ||

Human feedback UI and APIs | ||

High-quality LLM judges | ||

Versioned evaluation datasets | ||

Prompt Management | ||

MLflow Prompt Registry | ||

Prompt editor UI | ||

Experiment Tracking | ||

MLflow tracking API | ||

Rich performance & comparison dashboards | ||

Query experiment data with SQL and AI/BI tools | ||

MLflow tracking server | Self-hosted | Fully managed |

Notebooks integration | ||

Workflows integration | ||

Model Management | ||

MLflow Model Registry | ||

Model versioning | ||

Role-based approval workflows | ||

CI/CD workflow integrations | ||

Flexible Deployment | ||

Model packaging | ||

Large scale batch inference | ||

Low-latency real-time deployment | ||

Built-in streaming analytics | ||

Security and Management | ||

Enterprise governance | ||

High availability | ||

Automated updates |

Resources

Documentation

Tutorials

Blogs

Videos

eBooks

Webinars

Frequently Asked Questions

Ready to get started?