Tensorflow Estimator API

What is the Tensorflow Estimator API?

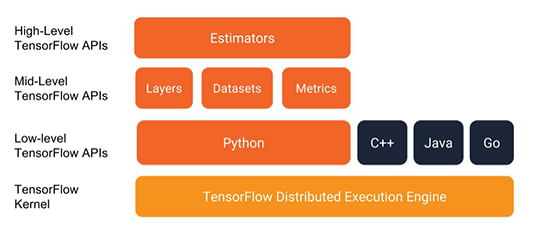

Estimators represent a complete model but also look intuitive enough to less user. The Estimator API provides methods to train the model, to judge the model’s accuracy, and to generate predictions. TensorFlow provides a programming stack consisting of multiple API layers like in the below image:

There are two types of estimators; you can either choose the pre-made Estimators, alternatively, you can write your own custom Estimators. Estimators-based models can be run on local hosts as well as on distributed multi-server environment without changing your model. In addition, you can run Estimators-based models on CPUs, GPUs, or TPUs without having to record your model.

Estimators Encapsulate Four Main Features:

- Training- they will train a model on a given input for a fixed number of steps

- Evaluation- they will evaluate the model based on a test set.

- Prediction- estimators will run inference using the trained model.

- Export your model for serving.

On top of that, the Estimator includes default behavior common to training jobs, such as saving and restoring checkpoints, creating summaries, etc. An Estimator will require you to write a model_fn and an input_fn that correspond to the model and input portions of your TensorFlow graph.

Estimators Come with Numerous Benefits:

- Estimators simplify sharing implementations between model developers.

- You can develop a great model with high-level intuitive code, as they usually are easier to use if you need to create models compared to the low-level TensorFlow APIs.

- Estimators are themselves built on tf.keras.layers, that makes customization a lot easier.

- Estimators will make your life easier by building the graph for you.

- Estimators provide a safely distributed training loop that controls how and when to:

- build the graph

- initialize variables

- load data

- handle exceptions

- create checkpoint files and recover from failures

- save summaries for TensorBoard