Running Apache Spark Clusters with Spot Instances in Databricks

Reduce your EC2 costs without sacrificing predictability

Using Amazon EC2 Spot instances to launch your Apache Spark clusters can significantly reduce the cost of running your big data applications in the cloud. But how do you manage the risks of nodes terminating and balance that with cost savings?

In this blog post, I’ll cover what Spot instances are and how they can dramatically lower your computing costs for jobs that are time flexible and tolerant to interruption. Then I’ll explain how you can combine Spot and On-Demand instances in the same cluster to get the best of both worlds.

With Databricks, you can run the Spark Master node and some dedicated workers On-Demand supplemented by more workers on Spot instances. The On-Demand workers guarantee your jobs will eventually complete (ensuring predictability), while the Spot instances accelerate the job completion times.

What are EC2 Spot instances?

The idea behind Spot instances is to allow you to bid on spare Amazon EC2 compute capacity. You choose the max price you’re willing to pay per EC2 instance hour. If your bid meets or exceeds the Spot market price, you win the Spot instances. However, unlike traditional bidding, when your Spot instances start running, you pay the live Spot market price (not your bid amount). Spot prices fluctuate based on the supply and demand of available EC2 compute capacity and are specific to different regions and availability zones.

So, although you may have bid 0.55 cents per hour for a r3.2xlarge instance, you’ll end up paying only 0.10 cents an hour if that’s what the going rate is for the region and availability zone.

The first hour of your Spot instance price is based on the current market price at the time of launch. AWS then re-evaluates this market price every hour.

If the Spot market price increases above your bid price at any point, your EC2 instance could be automatically terminated and you are not charged for that last partial hour. However, if you manually terminate your Spot instances, you will be billed for the full last hour.

This is different from the traditional On-Demand instances, where Amazon guarantees the availability of instances (99.95% SLA) but dictates the price they'll charge you per hour. On-demand pricing is statically set and rarely changes.

How significant can the cost savings be?

At the time of writing this (October 2016), an On-Demand r3.2xlarge instance (with 8 vCPUs and 61 GB RAM) costs $0.665 per hour in the US East region. However, the current Spot price for that instance is only $0.10… a cost savings of over 80%!

Launching Spark Clusters with Spot instances

There are several ways to use Spot instances in your Spark clusters:

- Mix of On-Demand and Spot instances in a single cluster (Hybrid model):

- Only Master node (with Spark Driver) is On-Demand, with all workers running on Spot instances

- Master node and some dedicated workers are On-Demand, while other workers are on Spot instances

- In either of these two hybrid models, terminated Spot instances could optionally be replaced by On-Demand instances

- 100% Spot instance based cluster:

- If prices spike, terminate cluster

- If prices spike, try to relaunch nodes with On-Demand instances so cluster fully recovers, but costs more to run (this option can only take place if the Spot based Driver is not terminated because of the price spike)

Databricks supports all of the variations above. I’ll first discuss the pros/cons and use cases of the hybrid model, which is our recommendation for most customers. Then I’ll discuss launching 100% Spot instance clusters. Finally, I’ll show you how to set the actual bid price in Databricks and research the historic and live Spot market prices for different instance types.

Mixing On-Demand and Spot instances in the same Cluster (Default and Recommended Approach)

What if you want to get the best of both worlds… a single Spark cluster with a combination of On-Demand and Spot instances?

This would allow you to launch the Spark Driver On-Demand to ensure the stability of your Spark cluster. The Worker nodes could be a mix of On-Demand and Spot. The On-Demand instances guarantee your Spark jobs will run to completion, while the Spot instances let you accelerate batch workloads affordably.

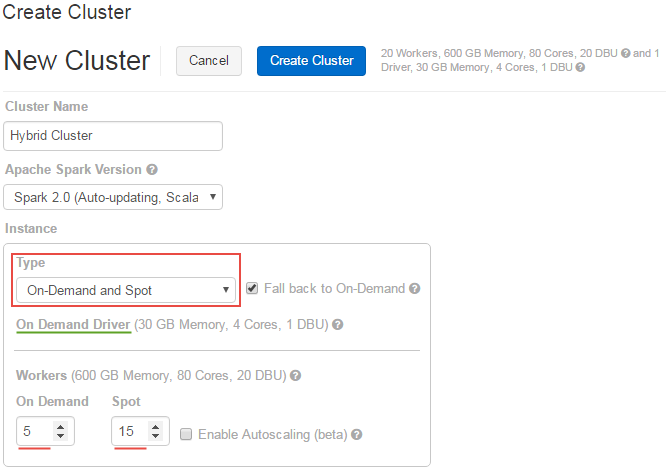

Creating Spark clusters with a mix of On-Demand and Spot EC2 instances is simple in Databricks. On the Create Cluster page, just choose the default of “On-Demand and Spot” Type from the drop-down and pick the number of On-Demand vs Spot instances you want:

The screenshot above shows a minimum of 5 On-Demand worker instances and a variable amount of 15 Spot instances.

In “On-Demand and Spot” type clusters, the Driver is always run on an On-Demand instance (see green line above). The numbers you choose for On Demand and Spot only affect the worker nodes.

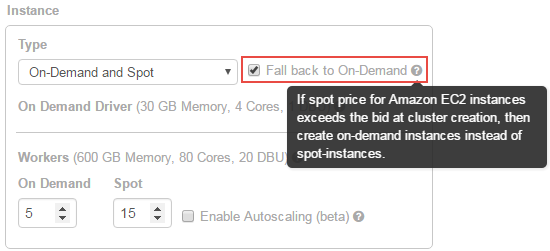

The “Fall back to On-demand” option will be turned on by default:

When enabled, this setting will launch your Spark cluster with On-Demand instances if the Spot market price is greater than your bid price at the cluster creation time.

Additionally, while your Spot instances are running, if the Spot price increases above your bid price, the “Fall back to On-Demand” feature will re-launch your terminated Spot instances as On-Demand instances.

Once a node falls back to On-Demand, it remains On-Demand for the duration of the cluster’s lifetime.

“Fall back to On-Demand” is recommended for use cases where you want Databricks to always aim to keep the cluster at the desired size, even if that means launching On-Demand nodes. This brings predictability to your workloads, as you can pre-calculate how long it takes to run a Job on a specific size cluster.

If you’re planning on doing exploratory data analysis and are okay with the Spot nodes in the cluster terminating and running in a degraded state, then uncheck the “Fall back to On-demand” option.

If you turn off the “Fall back to On-demand” option, you will never pay more than your bid price for each EC2 Spot instance (see how to set your bid price below).

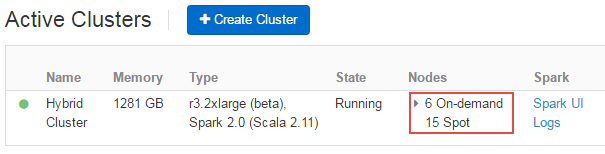

Once your cluster has launched, you can check how many nodes are On-Demand vs. Spot in the active clusters dashboard:

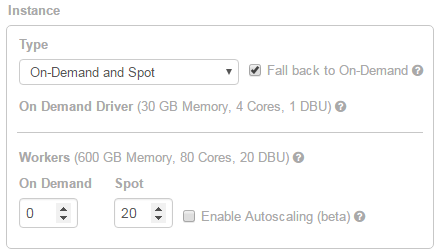

For example, to run a hybrid cluster with only the Master/Driver node On-Demand with 20 worker Spot instances, use the following settings:

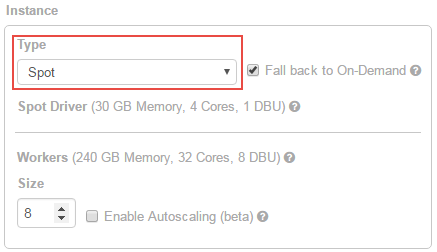

Note in the screenshot above that “Fall back to On-Demand” is enabled, so if any of the 20 Spot instances terminate, they will automatically be replaced by On-Demand instances so the cluster will aim to always run with 20 nodes. If you’re okay running in a degraded state with only a fraction of the 20 Spot instances, uncheck “Fall back to On-Demand”.

Gartner®: Databricks Cloud Database Leader

100% Spot instance based clusters

Using 100% Spot instance based Spark clusters is not recommended for production workloads because of the threat of losing production jobs to unpredictable Spot prices. Specifically, if the EC2 Spot instance running the Master node (with Spark Driver) is terminated, the entire cluster is also immediately terminated.

Nevertheless, 100% Spot instances are commonly used when Data Analysts or Data Scientists are exploring new data sets. If their Spot cluster terminates, they can quickly launch a new cluster with a higher bid price or with On-Demand instances instead. In either recovery attempt, the analyst or scientist can easily re-run their Notebook with one click (the ‘Run All’ button in Databricks).

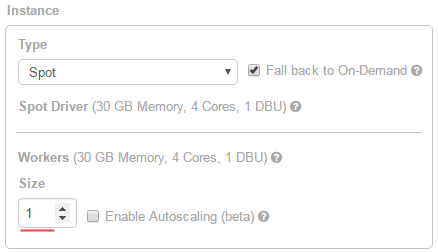

Creating Spark clusters with only EC2 Spot instances is simple in Databricks. On the Create Cluster page, choose “Spot” for the Instance type:

If you want the most affordable Spark cluster that can run Spark commands, use a size of 1:

Spot Clusters with size 1 will use two EC2 spot instances, one for the Spark Driver and another for the Spark Worker.

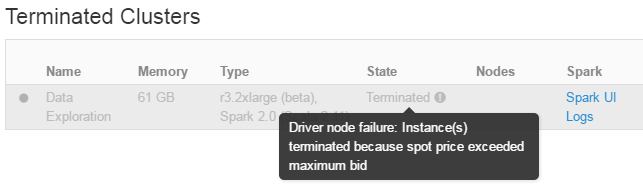

How do you know if a cluster terminated because Spot market price exceeded your bid price? Hover over a cluster’s state to see why it was terminated:

How do you set your bid price?

Now that you’ve seen the different types of clusters Databricks supports, you may be wondering how to control the bid price.

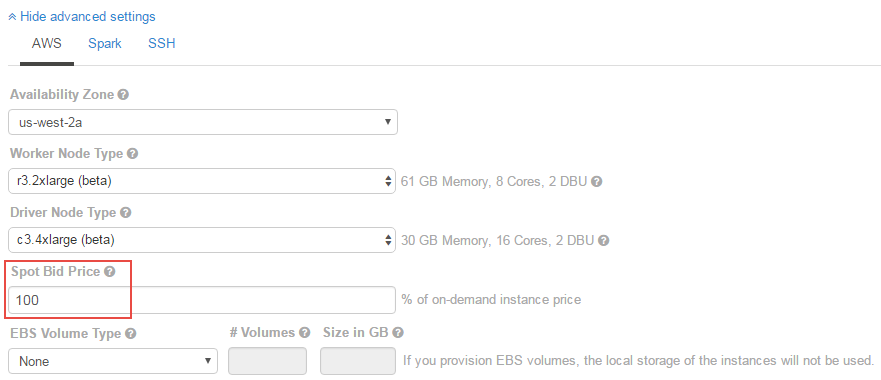

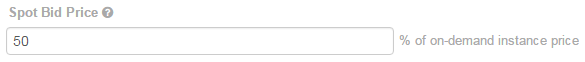

On the Create Cluster page, scroll down and click on “Show advanced settings”, then notice the Spot Bid Price field:

Databricks defaults to a bid price that is equal to the current on-demand price. So if the on-demand price for your instance type is $0.665 cents per hour, then the default bid price is also set to $0.665 cents per hour.

Recall that with Spot instances, you don’t necessarily pay your actual bid price - you pay the Spot market price for your instance type. So, even though you may have bid $0.665 cents, in reality you may only be charged $0.10 for the hour.

By setting your bid price to 100% of the on-demand price, you’re essentially saying “charge me whatever the live Spot market price is, but never more than the on-demand price for the same instance type”.

However, sometimes achieving cost savings is the top priority. In this case Databricks lets you set your own bid price. Let’s say you actually are only willing to pay 50% of the On-Demand price. You can change your bid accordingly by clicking on “Show advanced settings” and changing the “Spot Bid Price” field:

On the other hand, if stability is more important and if you don't mind occasionally paying more than the On-Demand instance price so that the overall amortized cost over time is cheaper than On-Demand, you can also bid at more than 100%... say 120% or 150%. In these cases, you are still billed the Spot market price, and although some hours may cost more than the On-Demand equivalent, the total cost for your cluster should generally be cheaper than the On-Demand costs would have been.

How much is the On-Demand price vs the Spot market price right now?

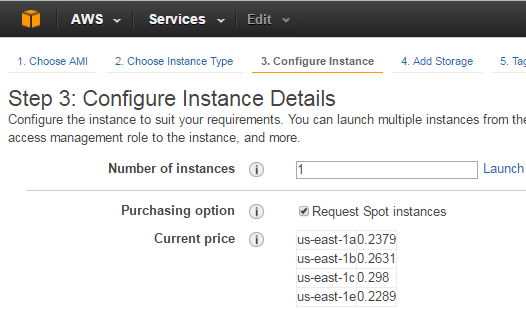

You can look up the current On-Demand instance prices on the AWS EC2 Pricing page. To see the live Spot market price for your instance type, one way is to attempt launching that instance via the EC2 console and check “Request Spot instances” during Step 3:

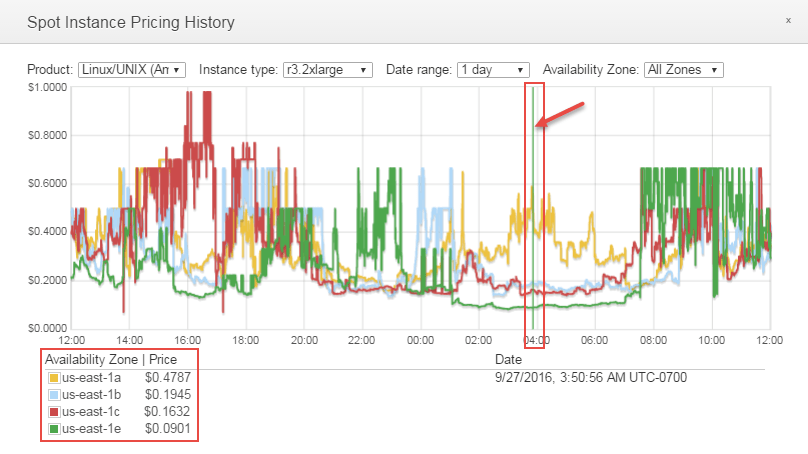

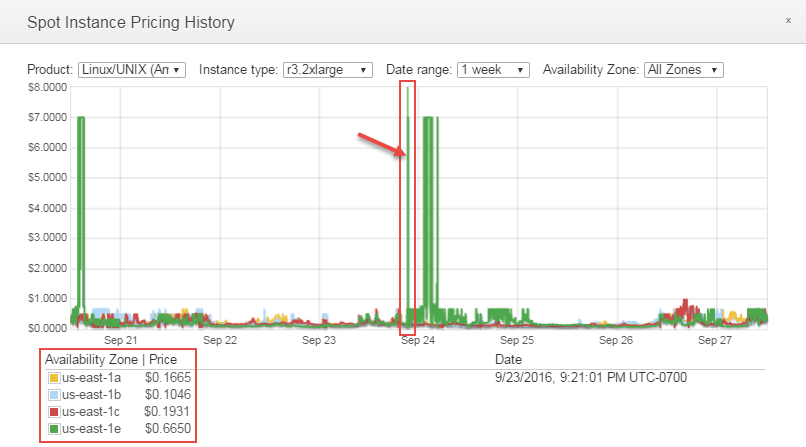

For historic trend analysis of Spot market prices, I like using Amazon’s official Pricing History and Spot Bid Advisor, along with this unofficial EC2 Spot Prices website.

To access the official Spot Pricing History, log into your AWS console, go to the EC2 services dashboard, choose “Spot Requests” in the left pane, then click the Pricing History button at the top.

As you hover your mouse across the chart, the prices in the bottom left change based on their historical values. You can choose date ranges of 1 day, 1 week, 1 month and 3 months.

If I zoom out to 1 week, I can see that the us-east-1e availability zone has experienced multiple price spikes, so perhaps I’ll avoid this zone when launching my Spot cluster:

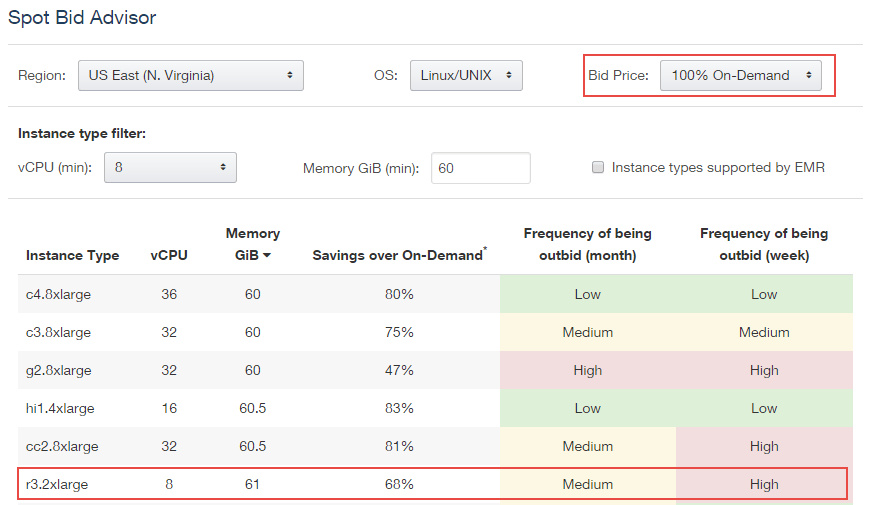

I also find Amazon’s Spot Bid Advisor very useful in helping me decide my bid price, based on how likely I am to be out-bid:

What’s Next

Databricks makes it simple to manage On-Demand and Spot instances within the same Spark cluster. Our users can create purely Spot clusters, purely On-Demand clusters, or hybrid clusters with just a few clicks.

Get started with a free Databricks trial.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read

How to present and share your Notebook insights in AI/BI Dashboards

Product

December 10, 2024/7 min read