Do you DIY your AI? You are probably failing on security.

This is Blog #2 in a series of blog posts about Databricks security. My colleague David Cook (our CISO) laid out Databricks' approach to Security in blog #1. With this blog, I will be talking in detail about our platform.

DIY Platforms: A Lack of Cohesion in Security

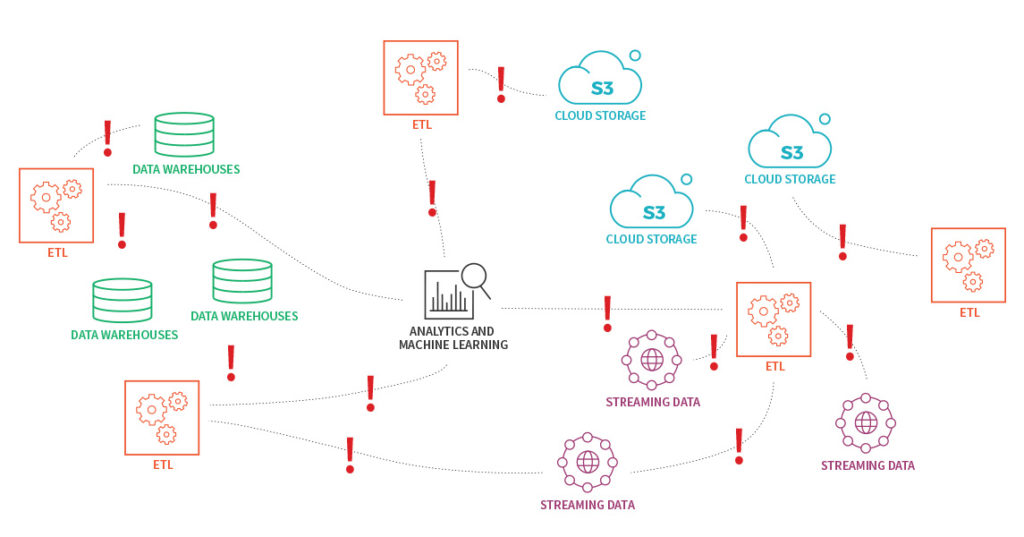

Many companies today operate on homegrown DIY big data and AI platforms comprised of various open-source tools and technologies. These patchwork platforms pit data scientists against data engineers and put the entire organization at risk of a security breach. On one hand, data scientists demand the latest AI tools and prioritize speed and productivity. They see IT and security as slow, and inflexible. On the other hand, data engineers are incented to maintain pristine data on a secure, supportable, production-ready platform. Moreover, each of these teams requires their own toolsets to deliver on the needs of their job. These tools are often times disjointed and data workflows cannot be tracked end-to-end. This results in data engineering and data science teams working in silos with the following challenges:

From a productivity perspective:

- Sometimes, data is locked down on-prem with a perimeter security approach making scale and elasticity hard, eventually impeding innovation.

- When teams fail to collaborate, use siloed tools and build disjointed data workflows, it becomes virtually impossible to innovate and progress AI projects.

From a security and regulatory perspective:

- Rapidly evolving, open source toolsets are cobbled together. They are not patched, tested, or well maintained - the potential for gaps, and errors by well-intended insiders is high.

- Different systems have different security paradigms, making it a challenge to replicate policies and ensure security fidelity from one toolset to the next.

- Lack of a single workspace makes it harder to get the right data to the right person while controlling who has access to what.

To overcome these obstacles, data scientists end up hiring data engineers into their teams, and data engineers do the same, busting budgets and creating duplicative functions. In the end, you’ve got teams that can’t work together, are not accountable, and blame each other for creating an untenable situation. This can create significant exposure of a data breach or regulatory infringement putting companies at risk in ways they may not have even considered. I believe, there’s a better way to meet the needs of data science teams than DIY AI.

Unified Analytics Platform with security at its Core

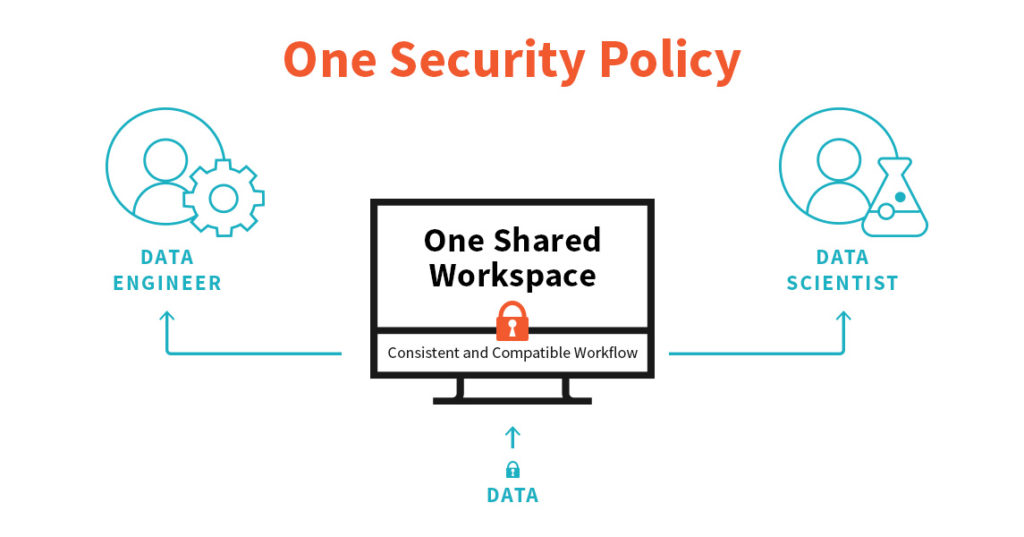

Databricks brings data engineering and data science teams together in a unified analytics platform giving the data scientist the agility they want while providing data engineers a consistent, secure and reliable toolset. That unification is key. Unlike DIY solutions, we provide a coherent security model across the entire data workflow. We enable you to set up the security once for your data framework and it can then be used for your data processing and as well as your ML and AI needs.

Knockdown silos while keeping data secure

Our unified analytics platform provides the security you require while enabling teams to work together to drive innovation. At the core of our approach are the following:

1. Security as a Core Design Principle—Databricks is a cloud-native platform that was designed with security as a first-class citizen from day one and is

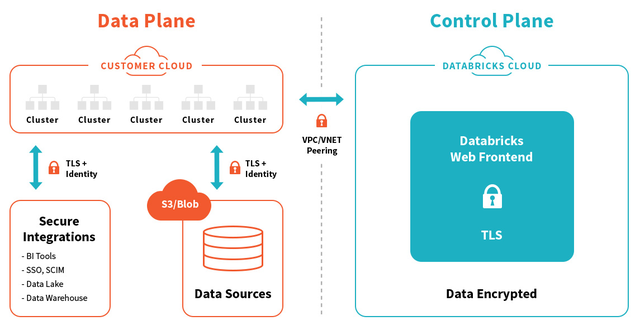

- Cloud native using security best practices - People often say that they keep important data on-prem to keep it safe. This is a dangerous misnomer. Security on-prem is just as difficult as security in the cloud. In addition to the elasticity and the pay as you go advantages of cloud, Databricks offers a fully managed and monitored security offering that utilizes the best cloud practices with world-leading security experts. We have built-in controls to minimize human error that includes isolating data workloads and controlling access through specific VPCs/VNETs, IAM Security groups while encrypting data in-transit and at-rest with fine-grained access controls.

- Designed to not touch your data - Data is the business differentiator for your company. We want to ensure you have maximum power over your data to generate business value. With the data staying in your cloud account, we provide you the infrastructure and manage it to ensure you are secure and get maximum value out of your data. With separate data and control planes, the data and the workloads reside in your cloud account and Databricks has no direct access to it.

- Integrated with your existing processes - Being a single platform having secure integrations with familiar products for SSO (Single Sign-On), Data Warehouse and BI tools, Databricks can easily be integrated into your existing environment and processes.

2. Consistent, Reliable and Compatible Workflows - Data Scientists are constantly looking for the latest software with the latest models. Even a half percent improvement in ML models could impact millions of dollars in revenue. DIY requires teams to integrate their own Data Engineering Tools (Spark, Hive etc) and Data Science tools (SparkML, Tensorflow, Keras etc). Databricks does that work for you by providing a single unified platform with streamlined workflows. No longer do you need to worry about interoperability issues. Patching, configuration is all taken care off and the whole system is pen tested and monitored by world-leading experts. This makes it easy for IT and Security teams to maintain security across the entire workflow with ease.

3. Secure and Transparent Collaboration - Databricks lets both Data Engineering and Data scientist teams work together in a single shared workspace. Databricks notebook can be shared by multiple teams with commenting and versioning much like google docs. Not only does this enhance collaboration and but is a single interface to control, track and audit user access of data. Fine-grained access controls let you govern data not only at a bucket, file level but also at a row and column level. With this level of control, you could give database access to a wide swath of people but block out specific sensitive columns such as credit card numbers and social security numbers.

Conclusion

When leveraged to its full potential, data can be a true differentiator for your company. We want to empower your teams to generate business value using your preferred frameworks and libraries for AI while ensuring data security and regulatory compliance.

If you are currently working through the challenges posed by DIY AI, we can help identify security gaps in your current data analytics set up and show you how you can address those more effectively with a single platform solution.

Try It!

- Call us to find out how Databricks can improve your security posture.

- Learn more by downloading our security e-book Protecting Enterprise Data on Apache Spark.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read

How to present and share your Notebook insights in AI/BI Dashboards

Product

December 10, 2024/7 min read