Announcing Databricks Runtime 5.1

We are excited to announce the release of Databricks Runtime 5.1. This release includes several new features, performance and quality improvements. We highly recommend upgrading to Databricks Runtime 5.1 to benefit from all of these. Release highlights:

- Azure Active Directory Credential Passthrough to ADLS Gen1 (Preview)

- 7% performance gain overall for Databricks Azure

- New in Delta: Enhanced syntax for MERGE, isolation levels and CONVERT to Delta

- Ability to install Python Libraries from Notebooks using Databricks Utilities library API

- An order of magnitude performance improvement specifically for queries with range joins

Databricks Azure Improvements

- Azure Active Directory Credential Passthrough to Azure Data Lake Storage Gen1 (Preview): You can now authenticate automatically to Azure Data Lake Storage Gen1 (ADLS) from Azure Databricks clusters using the same Azure Active Directory (Azure AD) identity that you use to log into Azure Databricks. When you enable your cluster for Azure AD credential passthrough, commands that you run on that cluster will be able to read and write your data in Azure Data Lake Storage Gen1 without requiring you to configure service principal credentials for access to storage. For more information, see Enabling Azure AD Credential Passthrough to Azure Data Lake Storage Gen1 (Preview).

- Azure Data Lake Storage Gen2 Support: We have also upgraded the connector for Azure Data Lake Storage Gen2 and added the ability to mount an Azure Data Lake Storage Gen2 File System. The mount is a pointer to a Data Lake Store, so the data is never synced locally.

- Performance Improvements: Following quickly on the heels of the Databricks Runtime 5.0 release, we have once again improved our overall performance, this time by 7% for Azure Databricks, by improving the performance of reading from object stores.

Databricks Delta Improvements

- Extended syntax in MERGE: In order to enable writing change data (inserts, updates, and deletes) from a database to a Delta table, there is now support for multiple MATCHED clauses, additional conditions in MATCHED and NOT MATCHED clauses, and a DELETE action. There is also support for * in UPDATE and INSERT actions to automatically fill in column names (similar to * in SELECT). This makes it easier to write MERGE queries for tables with a very large number of columns. Please see the documentation for more details: Azure | AWS.

- Isolation levels in Delta tables: Databricks Delta tables can now be configured to have one of two isolation levels, Serializable and WriteSerializable. In Databricks Runtime 5.1, the default isolation level has been relaxed from Serializable to WriteSerializable to enable higher availability. Specifically, UPDATE, DELETE, and MERGE will be allowed to run concurrently with appends (from a streaming query, for example). For more information about the implications of this relaxation or to learn how to revert to previous behavior, please see the documentation: Azure | AWS.

- CONVERT TO DELTA (Preview): We are excited to share the news of a new command in preview - CONVERT TO DELTA - which you can use to convert a Parquet table to a Delta table in place, without copying any files. Please contact Databricks support for more information.

Gartner®: Databricks Cloud Database Leader

Notebook-scoped Python Libraries

In Databricks Runtime 5.1, we introduce a new approach for installing Python libraries, including Eggs, Wheels, and PyPI into Python notebooks using the Databricks Utilities library API. Libraries installed through this API are available only in the notebook where the libraries are installed. For more information, see the documentation: Azure | AWS.

More performant range join queries

We have added optimizer rules for more efficient rewrites of range joins,speeding up this type of query by an order of magnitude. To enable the optimization, you add a hint to the query. The current rewrite supports the following range join query shapes:

- Point in interval queries, that is: a.begin

- Interval overlap queries, that is: a.begin

To read more about the above new features and to see the full list of improvements included in Databricks Runtime 5.1, please see the release notes:

- Databricks on Microsoft Azure: Databricks Runtime 5.1 release notes

- Databricks on Amazon Web Services: Databricks Runtime 5.1 release notes

Never miss a Databricks post

What's next?

Announcements

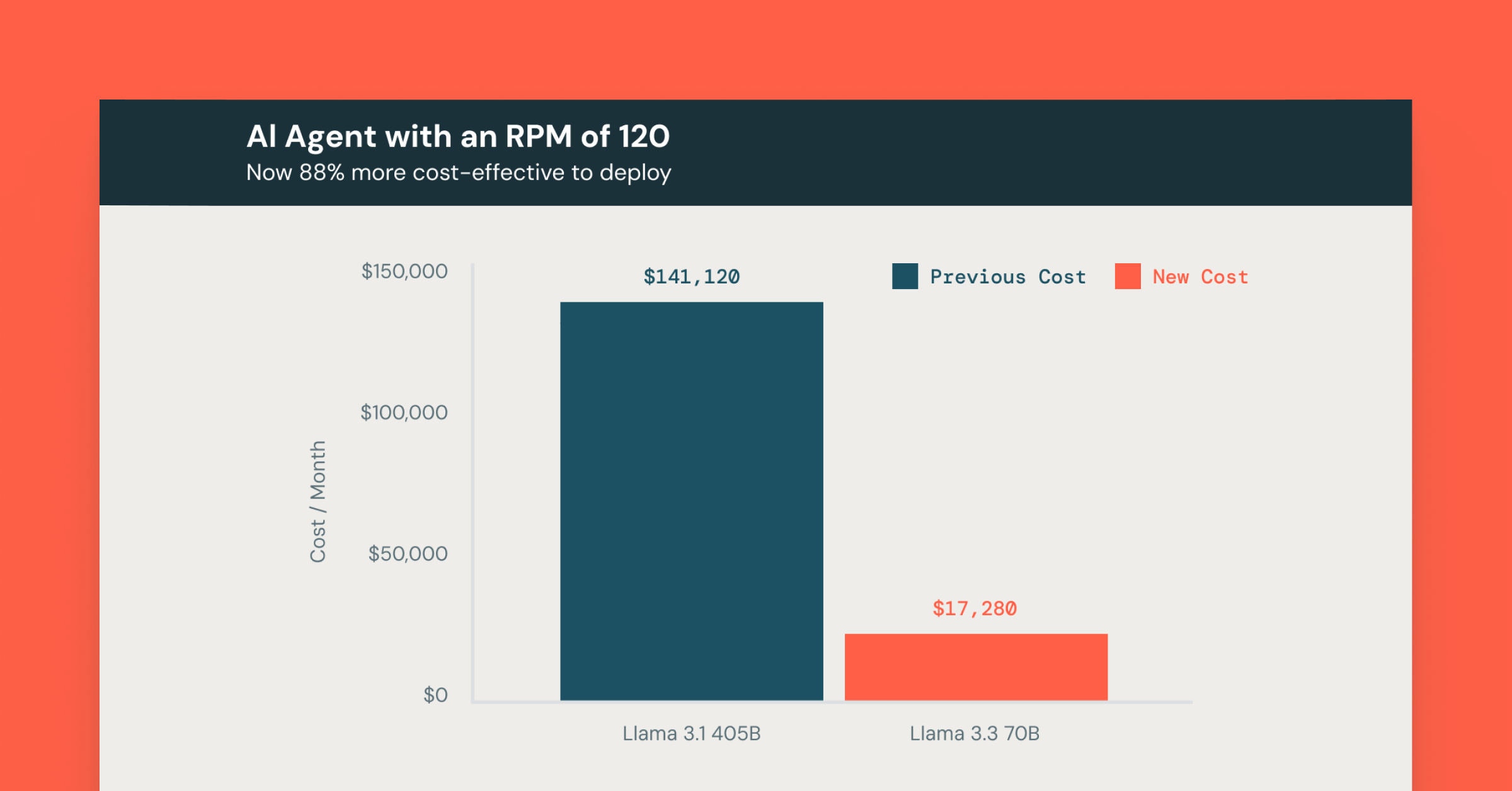

December 12, 2024/4 min read

Making AI More Accessible: Up to 80% Cost Savings with Meta Llama 3.3 on Databricks

Announcements

March 19, 2025/4 min read