Simplify Data Lake Access with Azure AD Credential Passthrough

Azure Databricks brings together the best of the Apache Spark, Delta Lake, an Azure cloud. The close partnership provides integrations with Azure services, including Azure’s cloud-based role-based access control, Azure Active Directory(AAD), and Azure’s cloud storage Azure Data Lake Storage (ADLS).

Even with these close integrations, data access control continues to prove a challenge for our users. Customers want to control which users have access to which data and audit who is accessing what. They want a simple solution that integrates with their existing controls. Azure AD Credential Passthrough is our solution to these requests.

Get an early preview of O'Reilly's new ebook for the step-by-step guidance you need to start using Delta Lake.

Azure Data Lake Storage Gen2

Azure Data Lake Storage (ADLS) Gen2, which became generally available earlier this year, is quickly becoming the standard for data storage in Azure for analytics consumption. ADLS Gen2 enables a hierarchical file system that extends Azure Blob Storage capabilities and provides enhanced manageability, security and performance.

The hierarchical file system provides granular access control to ADLS Gen2. Role-based access control (RBAC) could be used to grant role assignments to top-level resources and POSIX compliant access control lists (ACLs) allow for finer grain permissions at the folder and file level. These features allow users to securely access their data within Azure Databricks using the Azure Blob File System (ABFS) driver, which is built into the Databricks Runtime.

Challenges with Accessing ADLS from Databricks

Even with the ABFS driver natively in Databricks Runtime, customers still found it challenging to access ADLS from an Azure Databricks cluster in a secure way. The primary way to access ADLS from Databricks is using an Azure AD Service Principal and OAuth 2.0 either directly or by mounting to DBFS. While this remains the ideal way to connect for ETL jobs, it has some limitations interactive use cases:

- Accessing ADLS from an Azure Databricks cluster requires a service principal to be made with delegated permissions for each user. The credentials should then be stored in Secrets. This creates complexity for Azure AD and Azure Databricks admins.

- Mounting a filesystem to DBFS allows all users in the Azure Databricks workspace to have access to the mounted ADLS account. This requires customers to set up multiple Azure Databricks workspaces for different roles and access controls in line with their storage account access, thereby increasing complexity.

- When assessing ADLS, either directly or with mount points, users on an Databricks cluster share the same identity when accessing resources. This means there is no audit trail of which user accessed which data with cloud-native logging such as Storage Analytics

To solve this, we looked into how we could expand our seamless Single Sign-on with Azure AD integration to reach ADLS.

Getting Started with Azure AD Credential Passthrough

Azure AD Credential Passthrough allows you to authenticate seamlessly to Azure Data Lake Storage (both Gen1 and Gen2) from Azure Databricks clusters using the same Azure AD identity that you use to log into Azure Databricks. Your data access is controlled via the ADLS roles and ACLs you have already set up and can be analyzed in Azure’s Storage Analytics.

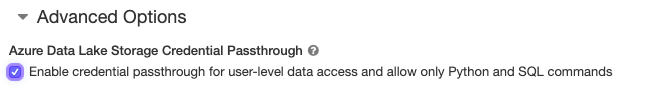

When you enable your cluster for Azure AD Credential Passthrough, commands that you run on that cluster will be able to read and write your data in ADLS without requiring you to configure service principal credentials for access to storage. In order to use Credential Passthrough, just enable the new “Azure Data Lake Storage Credential Passthrough” cluster configuration.

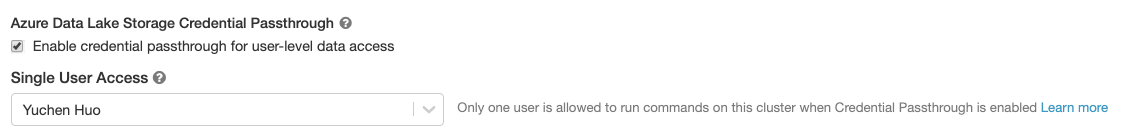

Passthrough is available on both High Concurrency and Standard clusters. Currently, Python and SQL are supported on High Concurrency clusters, which isolate commands run by different users to ensure that credentials cannot be leaked across different sessions. This allows multiple users to share one Passthrough clusters and access ADLS using their own identities.

Passthrough is available on both High Concurrency and Standard clusters. Currently, Python and SQL are supported on High Concurrency clusters, which isolate commands run by different users to ensure that credentials cannot be leaked across different sessions. This allows multiple users to share one Passthrough clusters and access ADLS using their own identities.

On Standard clusters, Python, SQL, Scala and R are all supported and users are isolated by restricting the cluster to a single user.

Gartner®: Databricks Cloud Database Leader

Powerful, Built-in Access Control

Azure AD Passthrough allows for powerful data access controls by supporting both RBAC and ACLs for ADLS Gen2. Users can be granted to the whole storage account through RBAC or one filesystem/folder/file using ACLs. Passthrough will ensure a user can only access the data that they have previously been granted access to via Azure AD in ADLS Gen2.

Since Passthrough identifies the individual user, auditing is available by simply enabling ADLS logging via Storage Analytics. All ADLS access will be tied directly to the user via the OAuth user ID in Storage Analytic logs.

Conclusion

Azure AD Credential Passthrough provides end to end security from Azure Databricks to Azure Data Lake Storage. This feature provides seamless access control over your data with no additional setup. You can safely let your analysts, data scientists, and data engineers use the powerful features of the Databricks Unified Analytics Platform while keeping your data secure!

Related Resources

Real-time distributed monitoring and logging in the Azure Cloud

How can you observe the unobservable? At Databricks we rely heavily on detailed metrics from our internal services to maintain high availability and reliability. However,...

Azure Databricks – Bring Your Own VNET

Azure Databricks Unified Analytics Platform is the result of a joint product/engineering effort between Databricks and Microsoft. It’s available as a managed first-party service on...

Spark + AI Summit 2019 Product Announcements and Recap. Watch the keynote recordings today!

Spark + AI Summit 2019, the world’s largest data and machine learning conference for the Apache Spark™ Community, brought nearly 5000 registered data scientists, engineers,...

Keep up with us

Recommended for you

Security and Trust

October 24, 2019/4 min read

Simplify Data Lake Access with Azure AD Credential Passthrough

Never miss a Databricks post

Sign up

What's next?

Partners

December 11, 2024/15 min read

Introducing Databricks Generative AI Partner Accelerators and RAG Proof of Concepts

News

December 11, 2024/4 min read