Integrating large-scale Genomic Variation and Annotation Data with Glow

Genomic annotations augment variant data by providing context for each change in the genome. For example, annotations help answer questions like does this mutation cause a change in the protein-coding sequence of a gene? If so, how does it change the protein? Or is the mutation in a low information part of the genome, also known as “junk DNA”? And everything in between. Recently (since release 0.4), Glow, an open-source toolkit for large-scale genomic analysis, introduced the capability to ingest genomic annotation data from the GFF3 (Generic Feature Format Version 3) flat-file format. GFF3 was proposed by the Sequence Ontology Project in 2013 and has become the de-facto format for genome annotation. This format is widely used by genome browsers and databases such as NCBI RefSeq and GenBank. GFF3 is a 9-column tab-separated text format that typically carries the majority of the annotation data in the ninth column. This column is called attributes and stores the annotations as a semi-colon separated list of csv data source, the resulting DataFrame is unwieldy for query and data manipulation of annotation data, because the whole list of attribute tag-value pairs for each sequence will appear as a single semicolon-separated string in the attributes column of the DataFrame.

Glow’s new and flexible gff Spark data source addresses this challenge. While reading the GFF3 file, the gff data source parses the attributes column of the file to create an appropriately typed column for each tag. In each row, this column will contain the value corresponding to that tag in that row (or null if the tag does not appear in the row). Consequently, all tags in the GFF3 attributes column will have their own corresponding column in the Spark DataFrame, making annotation data query and manipulation much easier.

Ingesting GFF3 Annotation Data

Like any other Spark data source, reading GFF3 files using Glow's gff data source can be done in a single line of code. Figure 1 shows how we can ingest the annotations of the Homo Sapiens genome assembly GRCh38.p13 from a GFF3 file (obtained from RefSeq) as shown below. Here, we have also filtered the annotations to chromosome 22 in order to use the resulting annotations_df DataFrame in continuation of our example. The annotations_df alias is for the same purpose as well.

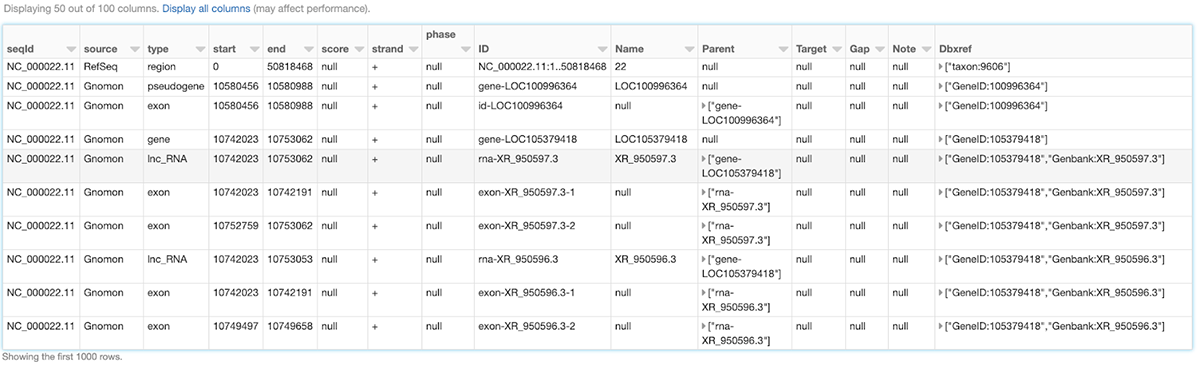

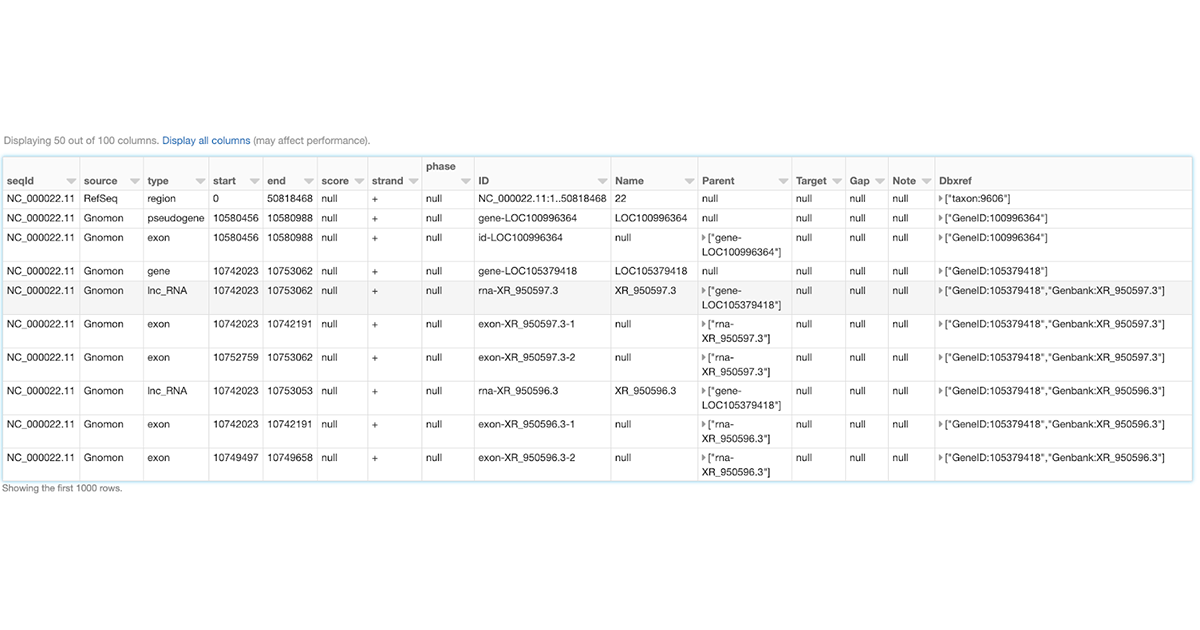

Figure 1: A small section of the annotations_df DataFrame

In addition to reading uncompressed .gff files, the gff data source supports all compression formats supported by Spark's csv data source, including .gz and .bgz. It is strongly recommended to use splittable compression formats like .bgz instead of .gz to enable parallelization of the read process.

Schema

Let us have a closer look at the schema of the resulting DataFrame, which was automatically inferred by Glow's gff data source:

This schema has 100 fields (not all shown here). The first eight fields (seqId, source, type, start, end, score, strand, and phase), here referred to as the "base" fields, correspond to the first eight columns of the GFF3 format cast in the proper data types. The rest of the fields in the inferred schema are the result of parsing the attributes column of the GFF3 file. Fields corresponding to any "official" tag (those referred to as "tags with a pre-defined meaning" in the GFF3 format description), if present in the GFF3 file, are automatically assigned the appropriate data types. The official fields are then followed by the "unofficial" fields (fields corresponding to any other tag) in alphabetical order. In the example above, ID, Name, Parent, Target, Gap, Note, Dbxref, and Is_circular are the official fields, and the rest are the unofficial fields. The gff data source discards the comments, directives, and FASTA lines that may be in the GFF3 file.

As it is not uncommon for the official tags to be spelled differently in terms of letter case and underscore usage within and/or across different GFF3 files the gff data source is designed to be insensitive to letter case and underscore when extracting official tags from the attributes field. For example, the official tag Dbxref will be correctly extracted as an official field even if it appears as dbxref or dbx_ref in the GFF3 file. Please see Glow documentation for more details.

Like other Spark data sources, Glow's gff data source is also able to accept a user-specified schema through the .schema command. The data source behavior in this case is also designed to be quite flexible. More specifically, the fields (and their types) in the user-specified schema are treated as the list of fields, whether base, official, or unofficial, to be extracted from the GFF3 file (and cast to the specified types). Please see the Glow documentation for more details on how user-specified schemas can be used.

Example: Gene Transcripts and Transcript Exons

With the annotation tags extracted as individual DataFrame columns using Glow's gff data source, query and data preparation over genetic annotations becomes as easy as writing common Spark commands in the user's API of choice. As an example, here we demonstrate how simple queries can be used to extract data regarding hierarchical grouping of genomic features from the annotations_df created earlier.

One of the main advantages of the GFF3 format compared to older versions of GFF is the improved presentation of feature hierarchies (see the GFF3 format description for more details). Two examples of such hierarchies are:

- Transcripts of a gene (here, gene is the "parent" feature and its transcripts are the "children" features).

- Exons of a transcript (here, the transcript is the parent and its exons are the children).

In the GFF3 format, the parents of the feature in each row are identified by the value of the parent tag in the attributes column, which includes the ID(s) of the parent(s) of the row. Glow's gff data source extracts this information as an array of parent ID(s) in a column of the resulting DataFrame called parent.

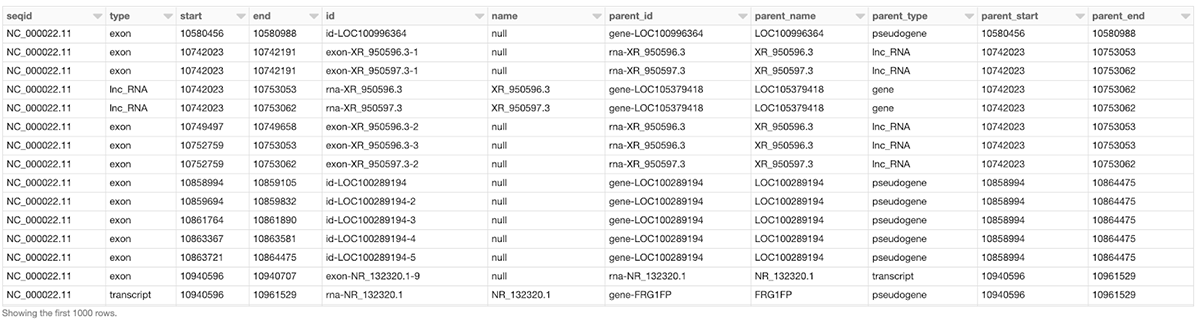

Assume we would like to create a DataFrame, called gene_transcript_df, which, for each gene on chromosome 22, provides some basic information about the gene and all its transcripts. As each row in the annotations_df of our example has at most a single parent, the parent_child_df DataFrame created by the following query will help us in achieving our goal. This query joins annotations_df with a subset of its own columns on the parent column as the key. Figure 2 shows a small section of the parent_child_df dataframe.

Figure 2: A small section of the parent_child_df DataFrame

Having the parent_child_df DataFrame, we can now write the following simple function, called parent_child_summary, which, given this DataFrame, the parent type, and the child type, generates a DataFrame containing basic information on each parent of the given type and all its children of the given type.

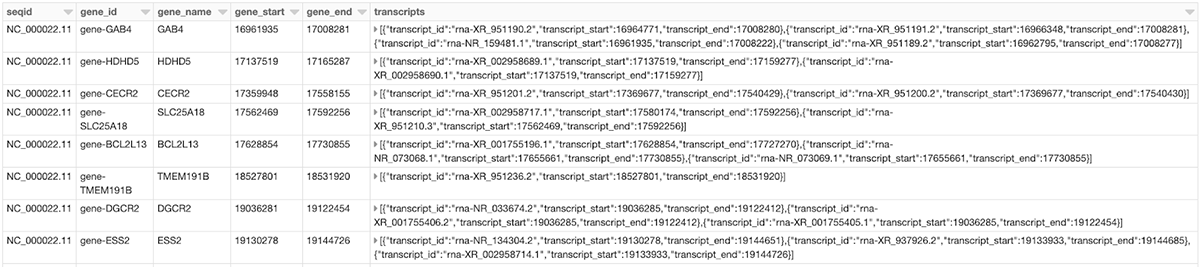

Now we can generate our intended gene_transcript_df DataFrame, shown in Figure 3, with a single call to this function:

gene_transcript_df = parent_child_summary(parent_child_df, 'gene', 'transcript')

Figure 3: A small section of the gene_transcript_df DataFrame.

In each row of this DataFrame, the transcripts column contains the ID, start and end of all transcripts of the gene in that row as an array of structs.

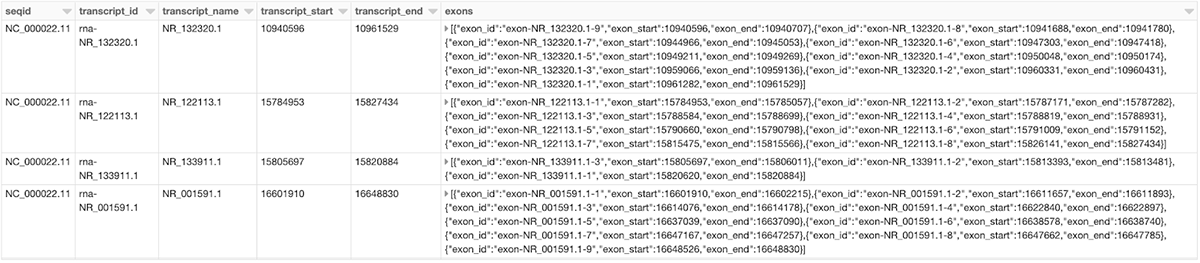

The same function can now be used to generate any parent-child feature summary. For example, we can generate the information of all exons of each transcript on chromosome 22 with another call to the parent_child_summary function as shown below. Figure 4 shows the generated transcript_exon_df DataFrame.

transcript_exon_df = parent_child_summary(parent_child_df, 'transcript', 'exon')

Figure 4: A small section of the transcript_exon_df DataFrame

Example Continued: Integration with Variant Data

Glow has data sources to ingest variant data from common flat file formats such as VCF, BGEN, and PLINK. Combining Glow's variant data sources with the new gff data source, users can seamlessly annotate their variant DataFrames by joining them with annotation DataFrames.

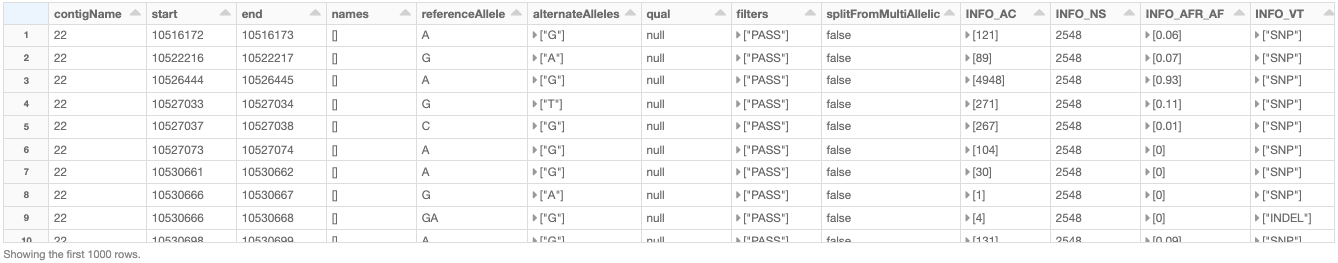

As an example, let us load the chromosome 22 variants of the 1000 Genome Project (on GRCh38 genome assembly) from a VCF file (obtained from the project's ftp site). Figure 5 shows the resulting variants_df.

Figure 5: A small section of the variants_df DataFrame

Figure 6 shows a DataFrame which, for each variant on a gene on chromosome 22, provides the information of the variant as well as the exon, transcript, and gene on which the variant resides. This is computed using the following query with two joins. Note that the first two exploded DataFrames can also be constructed directly from parent_child_df. Here, since we had already defined gene_transcript_df and transcript_exon_df, we generated these exploded DataFrames simply by applying the explode function followed by Glow's expand_struct function on them.

Figure 6: A small section of the variant_exon_transcript_gene_df DataFrame

Try Glow!

Glow is installed in the Databricks Genomics Runtime (Azure | AWS) and is optimized for improved performance when using cloud computing to analyze large genomics datasets. Learn more about our genomics solutions and how we’re helping to further human and agricultural genome and other genetic research and enable advances like population-scale next-generation sequencing in the Databricks Unified Analytics Platform for Genomics and try out a preview today. TRY THE NOTEBOOK!

Never miss a Databricks post

Sign up

What's next?

Data Science and ML

October 1, 2024/5 min read

Build Compound AI Systems Faster with Databricks Mosaic AI

Healthcare & Life Sciences

November 14, 2024/2 min read