Building a Lakehouse Faster on AWS With Databricks: Announcing Our New Pay-as-You-Go Offering

As the need for data and AI applications accelerates, customers need a faster way to get started with their data lakehouse. The Databricks Lakehouse Platform was built to be simple and accessible, enabling organizations across industries to quickly reap the benefits from all of their data. But we’re always looking for ways to accelerate the path to Lakehouse even more. Today, Databricks is launching a pay-as-you-go offering on Databricks AWS, which lets you use your existing AWS account and infrastructure to get started.

Databricks initially launched on AWS, and now we have thousands of joint customers - like Comcast, Amgen, Edmunds and many more. Our Lakehouse architecture accelerates innovation and processes exabytes of data every day. This new pay-as-you-go offering builds off recent investments in our AWS partnership, and we’re thrilled to help our customers drive new business insights.

Building a Lakehouse on AWS just got easier

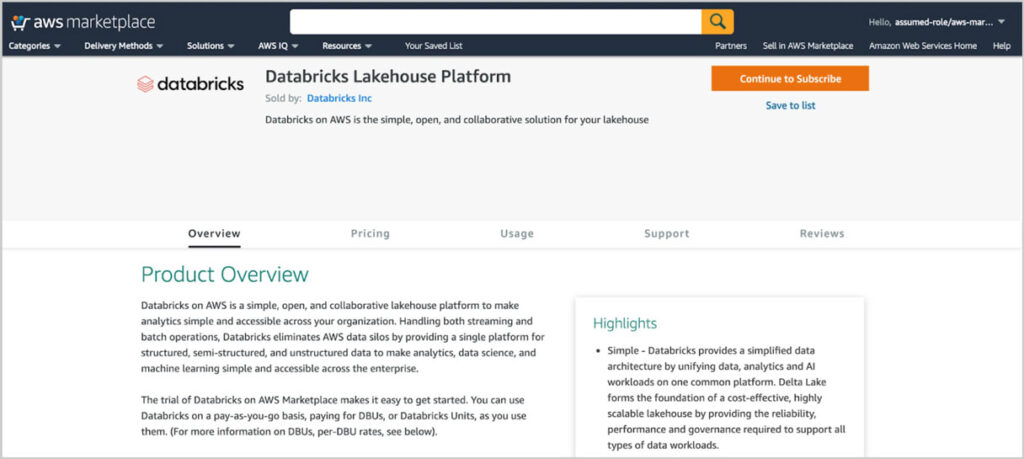

Our new pay-as-you-go basis on AWS Marketplace makes building a lakehouse even simpler with fewer steps – from set-up to billing – and provides AWS customers a seamless integration between their AWS configuration and Databricks. Benefits include:

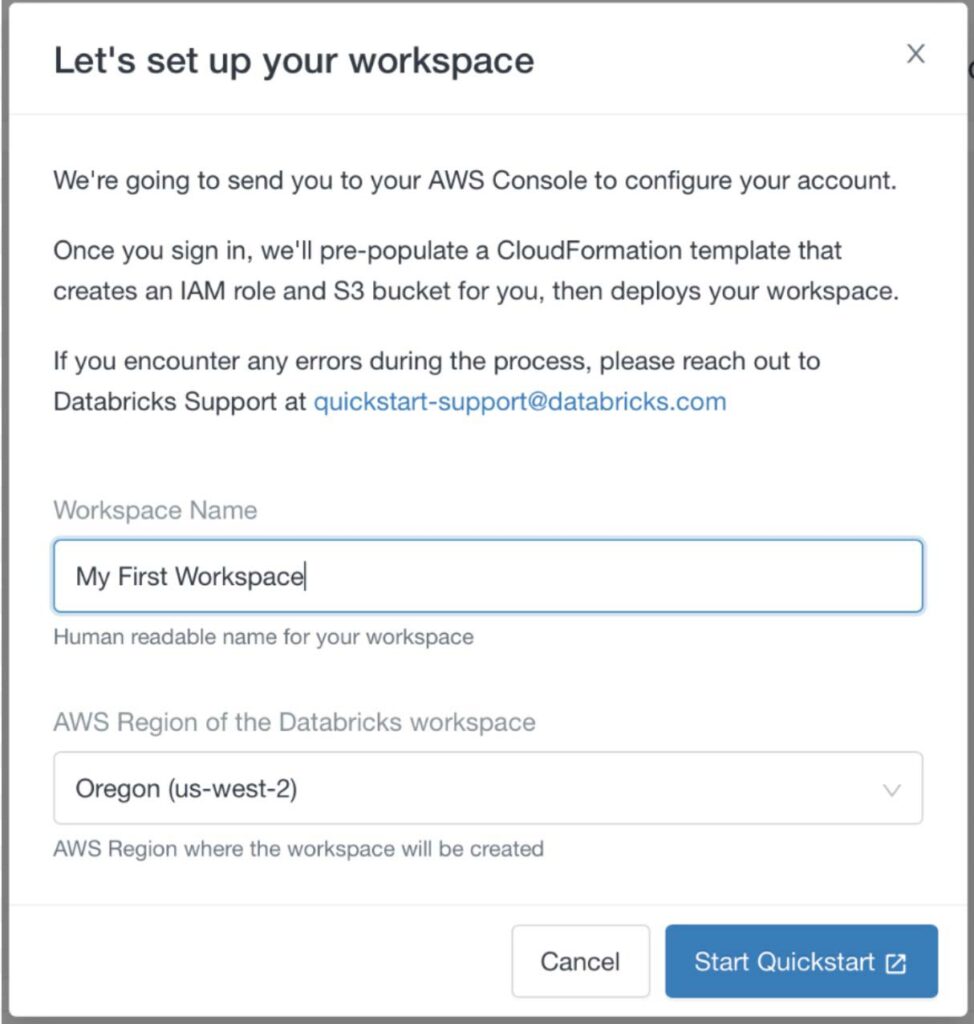

Setup in Just a Few Clicks: No need to create a whole new account. Now, customers can use their existing AWS credentials to add a Databricks subscription directly from their AWS account.

Smoother Onboarding: Once set up using AWS credentials, all account settings and roles are preserved with Databricks pay-as-you-go, enabling customers to get started right away with building their Lakehouse.

Consolidated Admin & Billing: AWS customers pay only for the resources they use and can bill against their existing EDP (Enterprise Discount Program) commitment with their Databricks usage, providing greater flexibility and scale to build a lakehouse on AWS that adapts to their needs.

Take it for a test drive: Existing AWS customers can sign also up for a free 14-day trial of Databricks from the AWS Marketplace and will be able to consolidate billing and payment under their existing AWS management account.

Simplicity combined with performance

This announcement dovetails off of recent enhancements with Databricks on AWS designed to bring flexibility and choice to customers - with the best price/performance possible.

Last month, we introduced the Public Preview of Databricks support for AWS Graviton2-based Amazon Elastic Compute Cloud (Amazon EC2) instances. Graviton processors deliver exceptional price-performance for workloads running in EC2. When used with Photon, Databricks’ high-performance query engine, this gives performance a whole new meaning. Our Engineering team ran benchmark tests and discovered that Graviton2-based Amazon EC2 instances can deliver 3x better price-performance than comparable Amazon EC2 instances for your data lakehouse workloads.

Our customers are our proofpoint

We’ve been working closely with AWS to deliver enhancements and GTM strategies that serve a common goal: helping our customers make a big impact with all of their data while reducing the complexities, cost and limitations of traditional data architectures. Our pay-as-you-go offering on AWS Marketplace and new support for Graviton are milestones in a long-term journey.

In honor of our partnership, we wanted to share some of our favorite joint customer stories that showcase the value of Lakehouse on AWS!

Comcast transforms home entertainment with voice, data and AI: Comcast connects millions of customers to personalized experiences, but previously they struggled with massive data, fragile data pipelines, and poor data science collaboration. With Databricks and AWS, they can build performant data pipelines for petabytes of data and easily manage the lifecycle of hundreds of models to create an award-winning viewer experience using voice recognition and ML. Read the full story here.

StrongArm preventatively reduces workplace injuries with data-driven insights: Industrial injury is a big problem that can have significant cost implications. StrongArm’s goal is to capture every relevant data point—roughly 1.2 million data points per day, per person—to predict injuries and prevent these runaway costs. These large volumes of time-series data made it hard to build reliable and performant ETL pipelines at scale and required significant resources. StrongArm turned to Databricks, with AWS as their cloud provider, and changed the speed with which they could go to clients with new insights, which meant making important injury prevention changes sooner. Read the full story here.

Edmunds democratizes data access for impactful data team collaboration:

Edmunds removed data siloes to ensure the inventory of vehicle listings on their website is accurate and up to date, improving overall customer satisfaction. With Databricks and AWS, they improved operational efficiencies resulting in millions of dollars in savings, and improved reporting speed by reducing processing time by 60 percent, or an average of 3-5 hours per week for the engineering team. Read the full story here.

Get started

We’re excited to further strengthen our AWS offering with Databricks’ pay-as-you-go experience on AWS Marketplace. To get started, visit the Databricks PAYGO on AWS marketplace.

Never miss a Databricks post

What's next?

News

December 11, 2024/4 min read