Use Databricks from anywhere with Databricks Connect “v2”

We are thrilled to announce the public preview of Databricks Connect "v2", which enables developers to use the power of Databricks from any application, running anywhere.

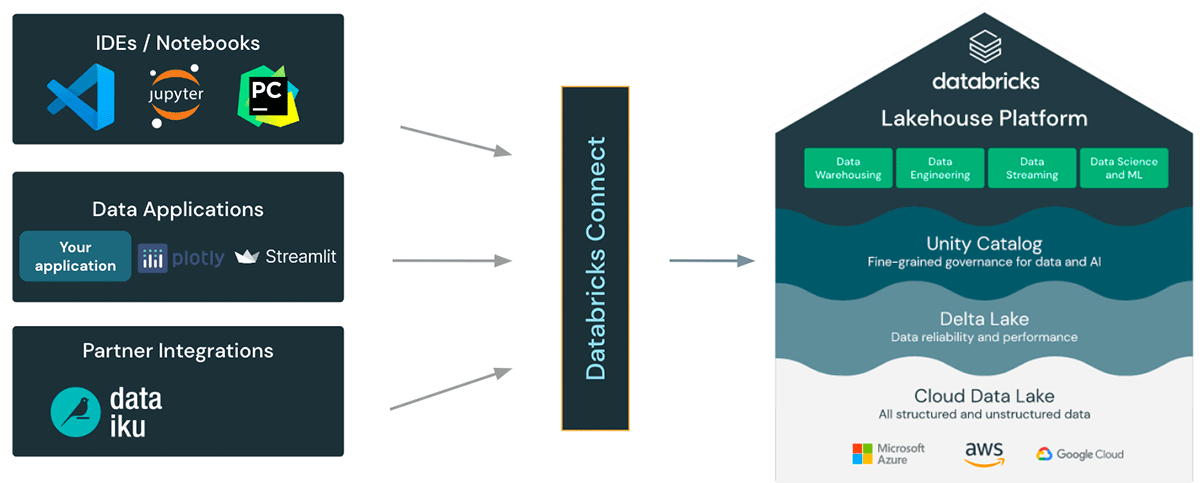

Until today, there was no easy way to remotely connect to Databricks from languages other than SQL. We now made it really easy: Users simply embed the Databricks Connect library into their applications and connect to their Databricks Lakehouse!

Databricks Connect unlocks several new capabilities and use cases for data practitioners: Developers can use their IDE of choice to interactively develop and debug their code in any Databricks workspace. Partners can easily integrate with Databricks, and use the full capabilities of the Databricks Lakehouse. And anyone can build applications on top of Databricks with just a few lines of code!

Built on open-source Spark Connect

From DBR 13 onwards, Databricks Connect is now built on open-source Spark Connect. Spark Connect introduces a decoupled client-server architecture for Apache Spark™ that allows remote connectivity to Spark clusters using the DataFrame API and unresolved logical plans as the protocol. With this "v2" architecture based on Spark Connect, Databricks Connect becomes a thin client that is simple and easy to use! It can be embedded everywhere to connect to Databricks: in IDEs, Notebooks and any application, allowing customers and partners alike to build new (interactive) user experiences based on your Databricks Lakehouse!

Building Interactive Data Apps on Databricks with a few lines of code

Just like a JDBC driver, the Databricks Connect library can be embedded in any application to interact with Databricks.

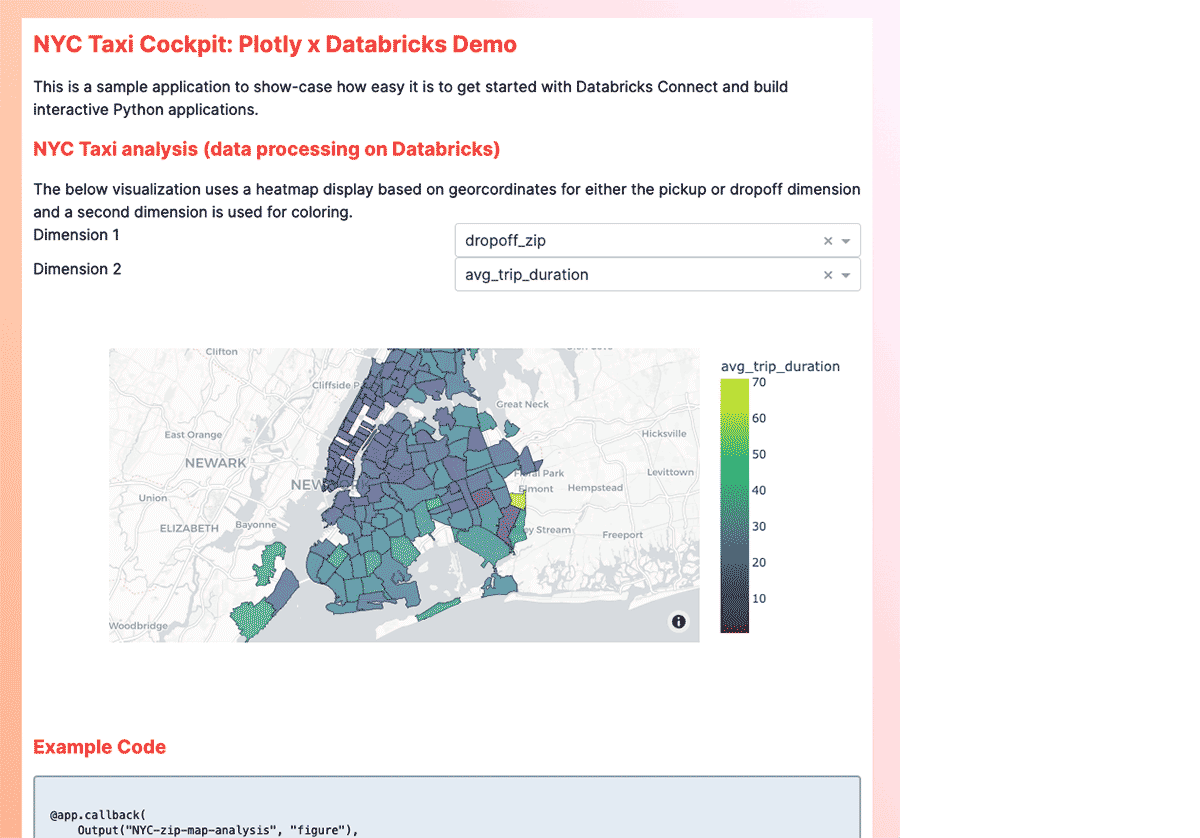

For example, you can build interactive data apps based on frameworks such as Plotly or Streamlit with just a few lines of code. We built an example data application to interactively query and visualize NYC taxi trips based on the Databricks NYC Taxi dataset (github project).

To get started, the following code snippet can be used to retrieve NYC trip data from Databricks and visualize it using a Dash app.

Interactive development and debugging from any IDE

IDEs let developers use software engineering best practices for large codebases, including source code control, modular code layouts, refactoring support, and integrated unit testing. Databricks Connect lets developers interactively develop and debug their code on Databricks clusters using the IDE's native running and debugging functionality, making sure that code is developed more efficiently and with higher quality.

"Databricks Connect "v2" simplifies and improves how Shell's data engineers and data scientists interact with their Databricks Environments and Unity Catalog. It speeds up developing Spark code in users' preferred integrated development environments (IDEs) and enables them to debug faster and easier by stepping through each line of code. Due to the simplicity of installation of DB Connect "v2", even more exciting are the possibilities it enables to use Spark from anywhere, whether that be on edge devices needing to offload parts of an AI workload to Databricks, or incorporating the scalability of Databricks within business users everyday tools." - Bryce Bartmann, Chief Digital Technology Advisor at Shell

The new Databricks VS Code Extension uses Databricks Connect to provide built-in debugging of user code on Databricks. Databricks Connect can also be used from any other IDE. Developers simply pip install 'databricks-connect>=13.0' and configure the connection string to their Databricks cluster!

Partner integration made easy with Databricks Connect

Our partners can easily embed the Databricks Connect library into their products to build deep integrations and new experiences with the Databricks Lakehouse.

For example, our partner Dataiku (a low-code platform for visually defining and scripting workflows using SQL and Python) uses Databricks Connect to run PySpark recipes directly on the Databricks Lakehouse.

"Dataiku's integration with Databricks provides an easy-to-use analytics and AI solution for both business and technical users. With the launch of Databricks Connect "v2", our customers can now use Databricks to run both visual and code-based workflows built in Dataiku to accelerate time to value with AI." - Paul-Henri Hincelin, VP of Field Engineering

Get Started with Databricks Connect today!

The Databricks Connect client library is available today for download. Connect to your DBR 13 cluster, and get started!

Take a look at our Databricks Connect documentation for AWS and Azure, and give it a try: debug your code from your favorite IDE or build an interactive data app! We would also love to hear your feedback at the Databricks Community about Databricks Connect.

Stay tuned for further updates and improvements to Databricks Connect, such as support for Scala and Streaming!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read