How ActionIQ Integrates with Databricks Lakehouse Part Two: Step-by-Step Workflow to Activate Propensity Modeling

In our previous blog post, we discussed how ActionIQ partners with Databricks to address the key challenge organizations face in achieving their personalization goals: finding the balance between business self-service and data governance. ActionIQ, powered by its HybridCompute technology, offers a unique integration pattern that allows organizations to deploy their composable CDP directly within the Databricks Lakehouse environment. This integration offers several advantages over the traditional bundled CDP architecture:

- It reduces operational burden by removing the need to copy data across systems

- It centralizes data governance and ensures data consistency

- It accelerates time to insights through a no-code interface for non-technical users

We also outlined an example workflow in which a retail brand seamlessly leverages ActionIQ and Databricks, via the HybridCompute integration, to activate personalized content and offers for target customers based on their individual purchase propensity scores for various product categories.

Integrating ActionIQ with Databricks via HybridCompute, Enabled by Query Pushdown

Now, let's explore the key steps required to complete the workflow with ActionIQ's composable CDP deployed within the Databricks Lakehouse via a Connected Application. In this integration pattern, ActionIQ connects to a customer's Databricks account to process data but doesn't persist data outside of the Databricks account.

The four high-level steps in establishing this connection are:

- Ensure data readiness in Databricks

- Set up the Databricks connection within ActionIQ

- Generate SQL queries from ActionIQ

- Use the query results to drive customer engagement

For each step, we will present both the concept and the implementation details using the retail use case outlined above. Please note that the data utilized in the use case is synthetic and has been created solely for the purpose of demonstration and illustration.

Step 1: Ensure Data Readiness in Databricks

As a Customer Data Platform (CDP), ActionIQ implements a customer-centric data model that is purpose-built around one dimension: your definition of a customer. The queries generated by ActionIQ result in a list, or audience, of distinct user IDs of customers that meet certain criteria, as well as profile attributes and aggregated attributes that describe those customers. Translated to SQL, these queries all contain a GROUP BY non-customer-centric query.

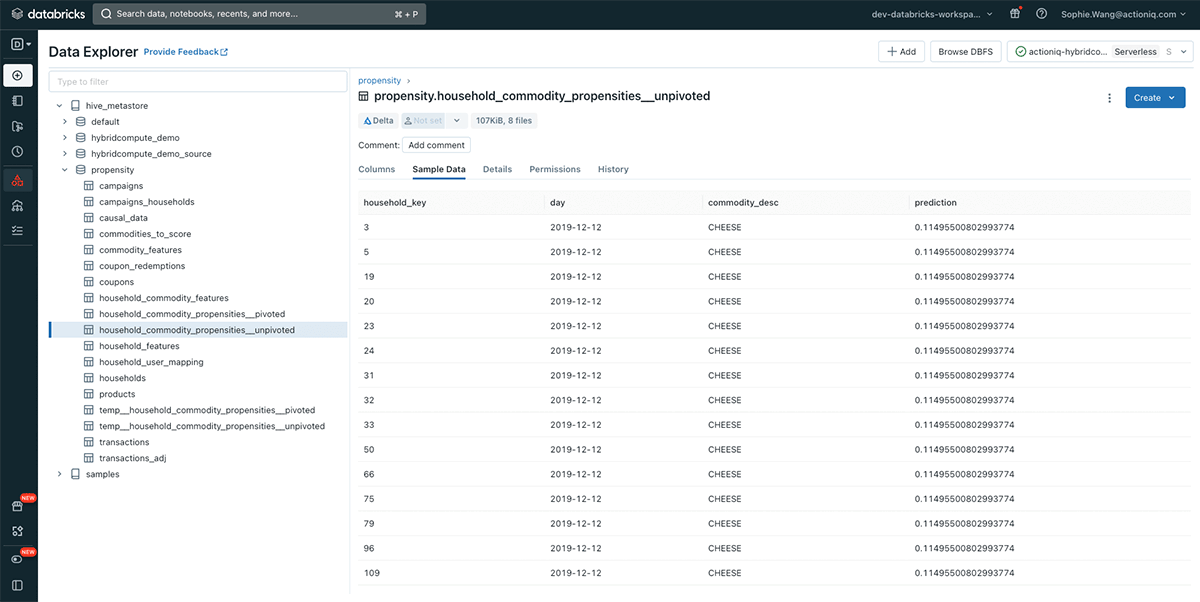

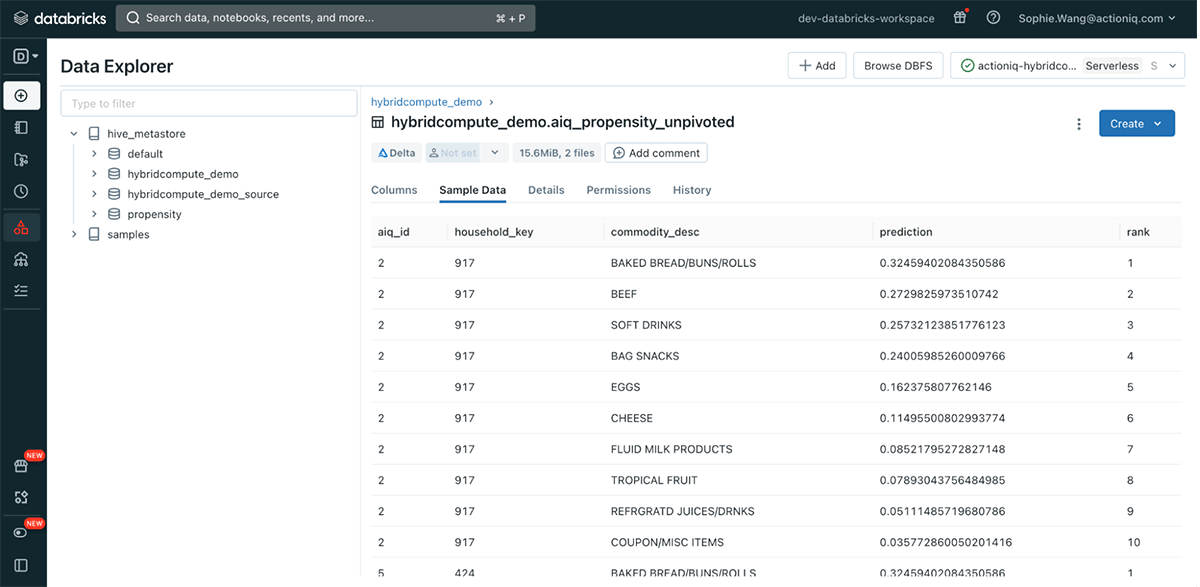

Let's assume the propensity scores are already calculated and recorded in a table residing in the Databricks Lakehouse (Figure 1). Before connecting Databricks with ActionIQ, your IT team needs to model the propensity scoring data to make it query-ready in ActionIQ. This can be achieved by creating another view of the table, mapping the Databricks identifier household_key to the aiq_id expected by ActionIQ (Figure 2).

Step 2: Set up the Databricks Connection within ActionIQ

Once the propensity scoring table is properly modeled, your IT team, specifically the CDP administrator, can proceed with setting up ActionIQ's HybridCompute integration with Databricks.

To begin, you will collaborate with your Databricks administrator to establish proper permission control, ensuring a secure connection between the two systems. It is recommended to create a separate Databricks service principal and grant ActionIQ access to your Databricks resources. Additionally, you will set up a separate SQL warehouse for query pushdown workloads. To allow ActionIQ to use the newly created warehouse, you will grant the ActionIQ service principal "Can Use" permissions on the warehouse and access to the propensity scoring table you wish to use in ActionIQ.

Leveraging the Unity Catalog in Databricks Data Explorer, you can set granular permissions at the table or column level, specifying what users can access and utilize later within ActionIQ. Furthermore, you have the flexibility to configure read-access-only permissions for the propensity scoring table in Databricks. This means that users connecting through ActionIQ can only push down queries to data from the table and are restricted from making any modifications.

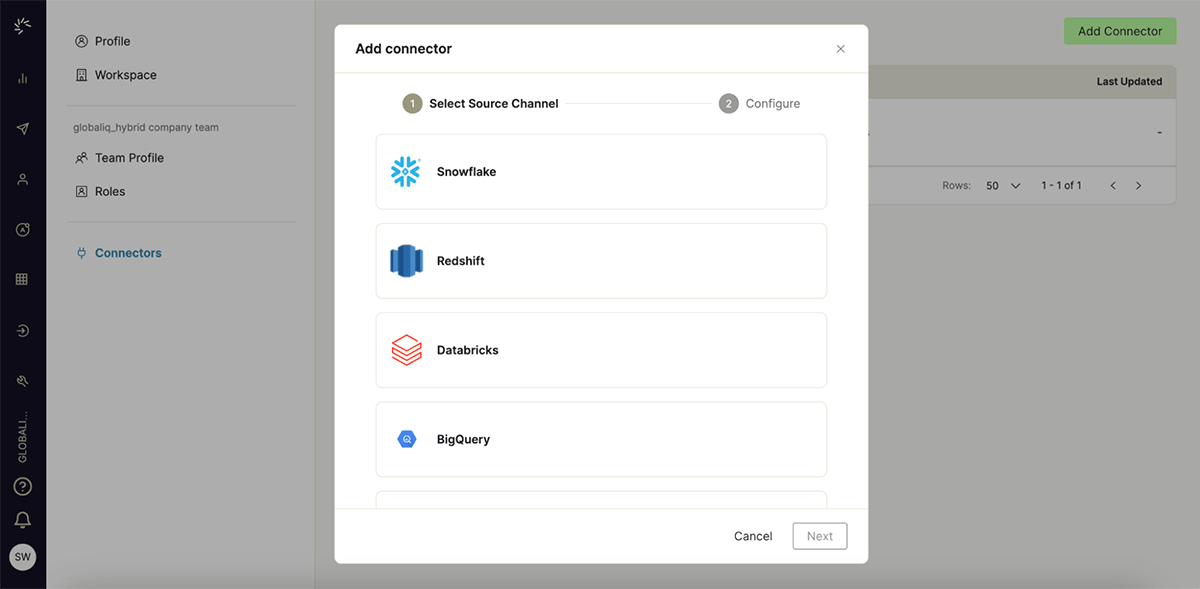

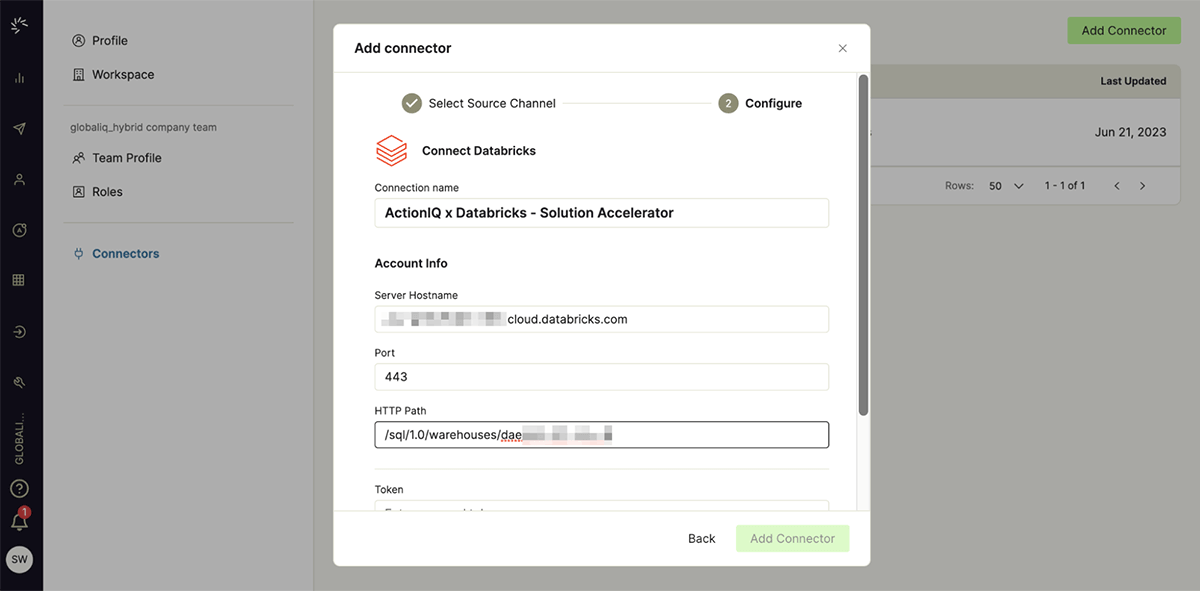

Following the permission set-up, your CDP administrator will start configuring the HybridCompute integration directly within ActionIQ's self-service UI:

- Create a new Databricks connector in ActionIQ (Figure 3)

Figure 3. Create a Databricks connector in ActionIQ - Provide your Databricks account information, including Server Hostname, Token, Port and HTTP Path (Figure 4). These details can be accessed under Connection Details in your Databricks workspace.

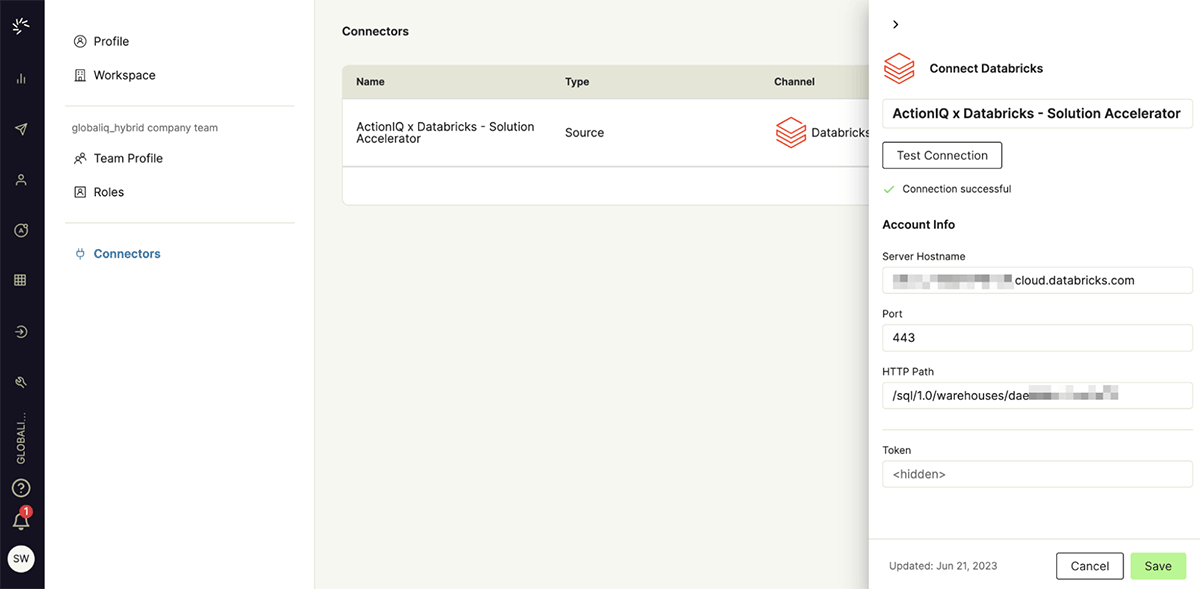

Figure 4. Configure the Databricks connector in ActionIQ - After entering the required information, test the Databricks connection (Figure 5). In case of a test failure, an error message will be displayed to assist with troubleshooting. Upon seeing the "connection successful" message, finalize the configuration process by clicking on "Add Connector" or "Save".

Figure 5. Test the Databricks connector in ActionIQ

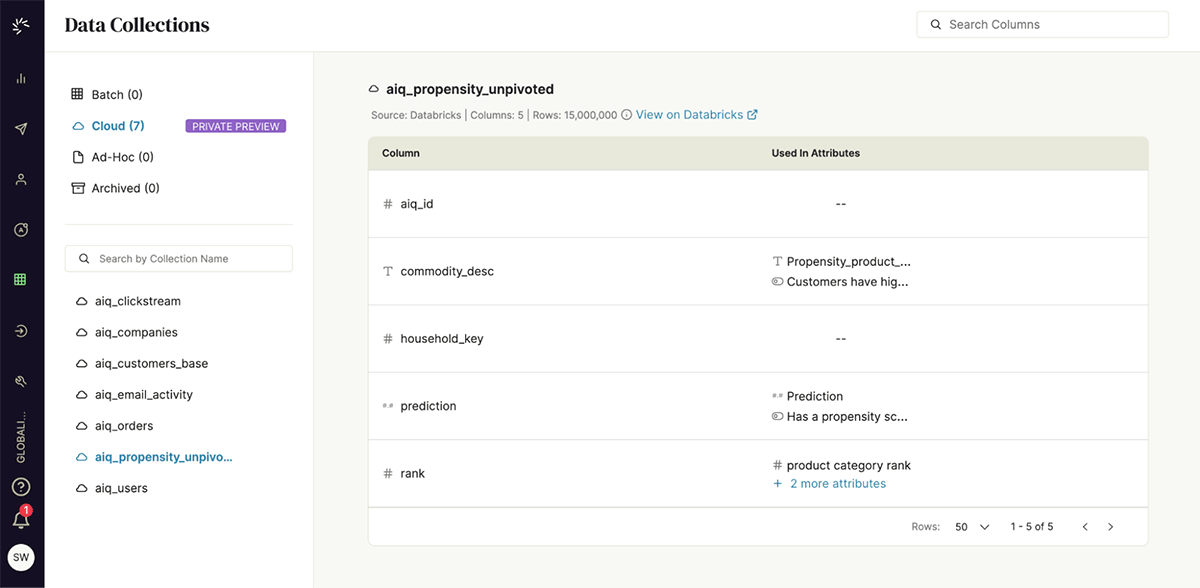

Congratulations! You have now successfully set up and configured ActionIQ's HybridCompute integration with Databricks Lakehouse via native query pushdown. By pulling only the table metadata (schema, row count) from Databricks Lakehouse, the propensity scoring table is now discoverable from the ActionIQ user interface (Figure 6).

Step 3: Generate SQL Queries from ActionIQ

Now, your marketing team can start implementing the use case they envisioned. The primary objective is to engage customers who exhibit a high propensity score for bread and a moderate propensity score for soft drinks. The strategy involves targeting these customers with a bundled offer designed to incentivize purchases in both product categories. The communication will be delivered through either email in a newsletter format or paid media channels, depending on the customers' preferred channel.

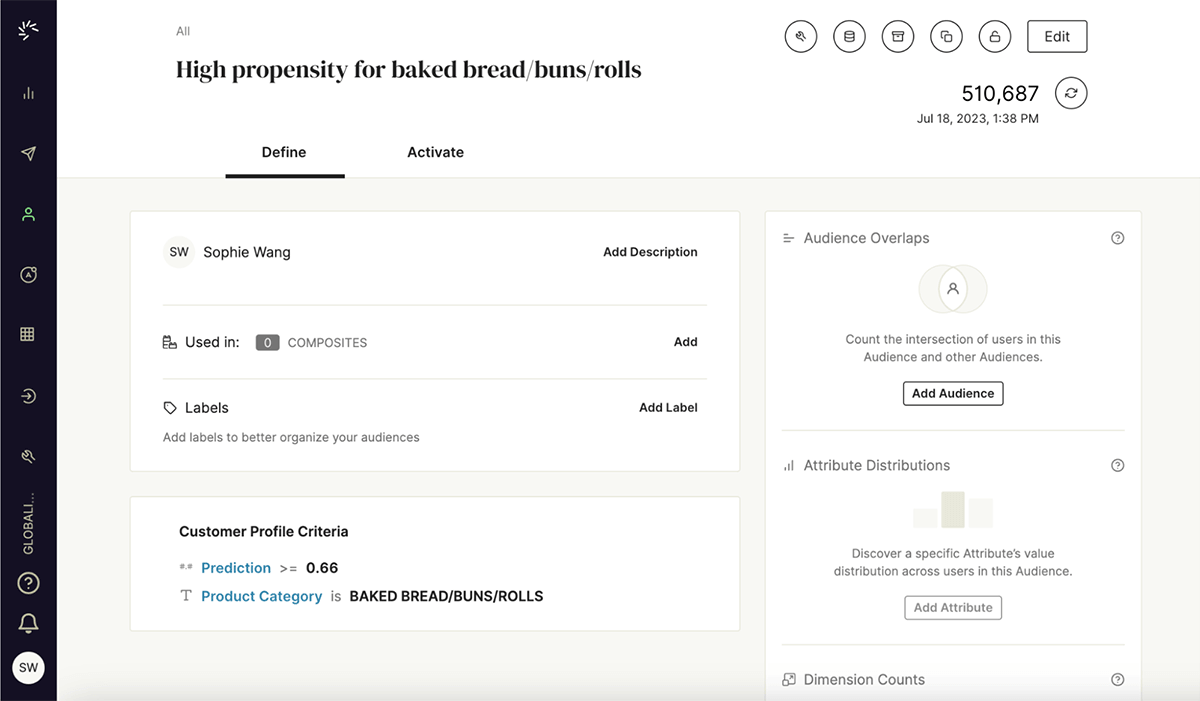

Audience Creation

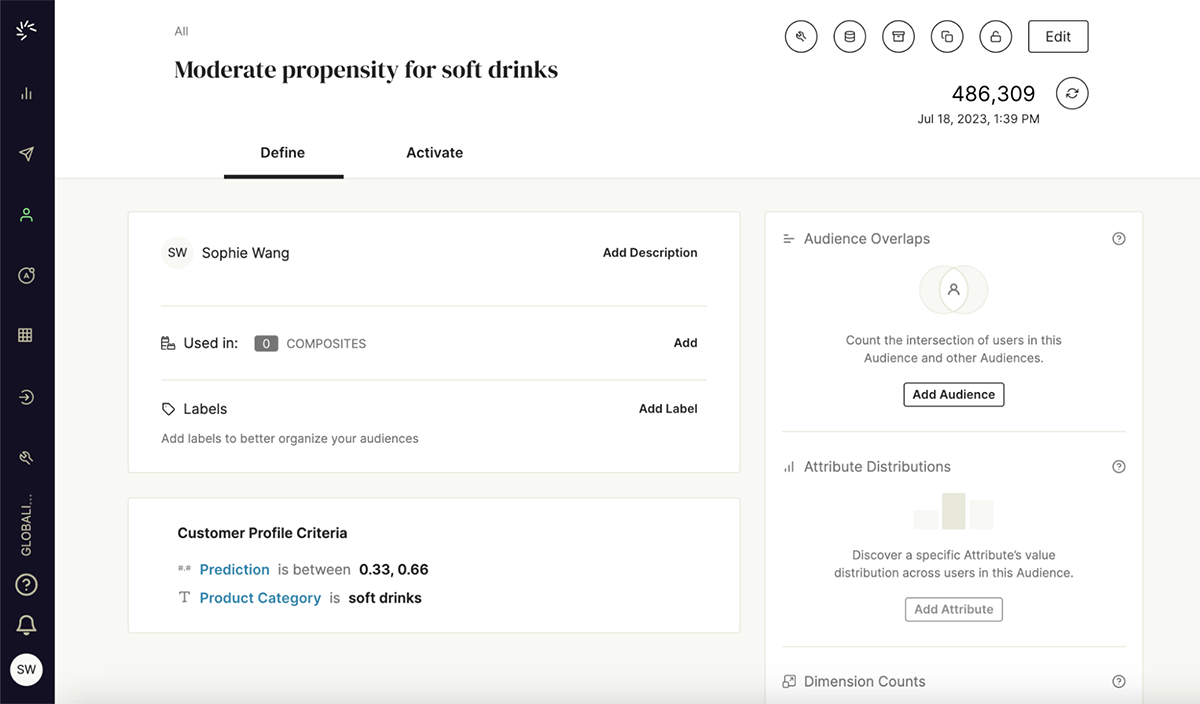

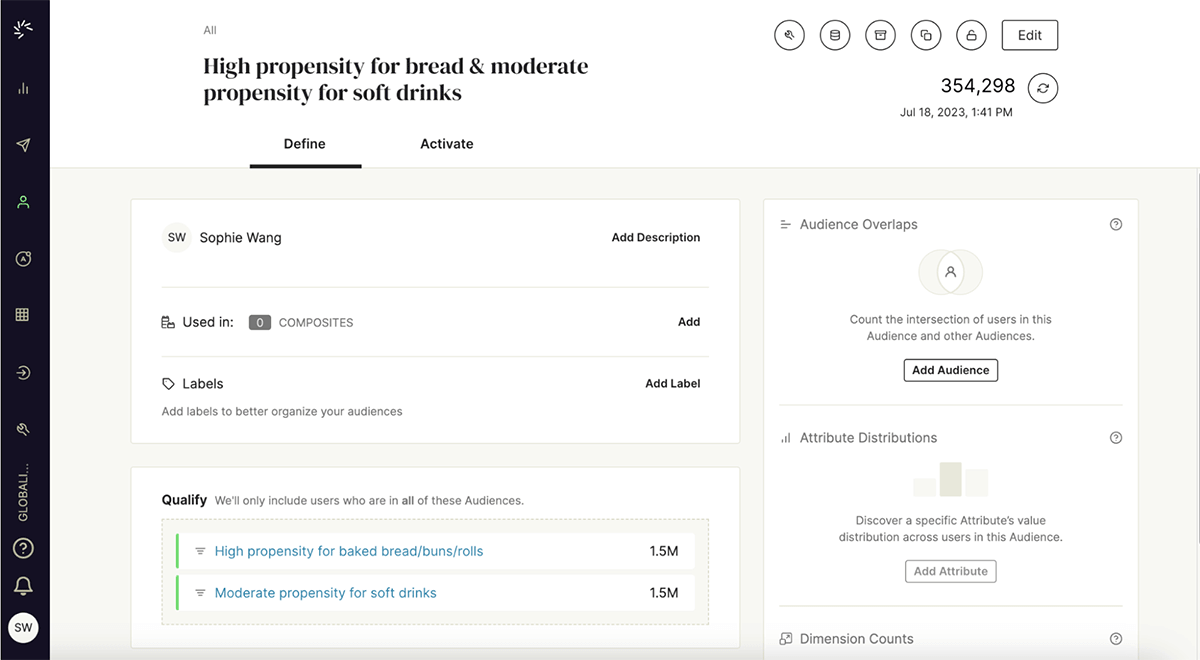

Within ActionIQ's Audience Center module, your marketing team can create the target audience segment instantly using the intuitive no-code UI. This can be accomplished by combining two basic audiences (figure 9) —— customers with high propensity score (>= 0.66) for bread (figure 7) AND customers with moderate propensity score (in between 0.33 and 0.66) for soft drinks (figure 8).

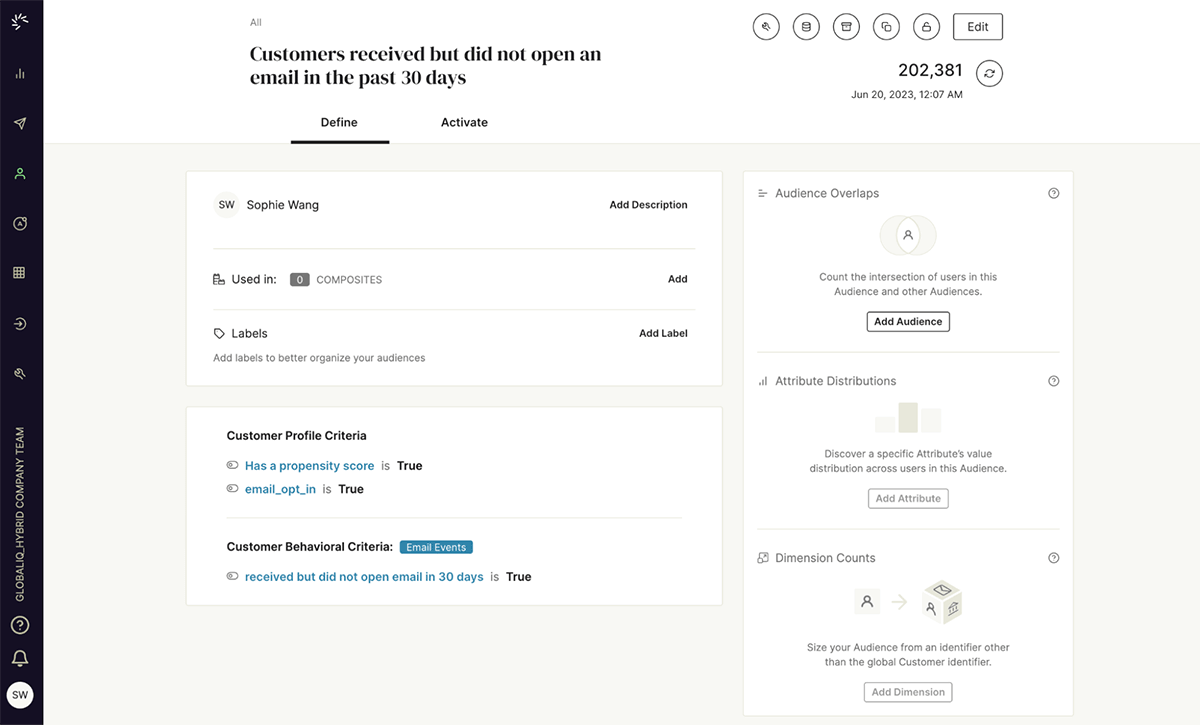

At this step, you will also create additional audience segments necessary for the cross-channel customer journeys you intend to build later. For example, one of these segments will be specifically designed for email communications, determining the recipients of the personalized email newsletter. Additionally, you will create a separate segment for paid media advertising. All of these audience segments can be easily created with the ActionIQ Audience Center UI (Figure 10). The connected application seamlessly translates these user actions into SQL queries that are ready to be executed in the Databricks Lakehouse.

Step 4: Use the Query Results to Drive Customer Engagement

When it's time to engage customers using this data, ActionIQ automatically pushes the query down to the Databricks Lakehouse via JDBC to return the relevant subset of information. As the results flow into ActionIQ, the query processor performs further transformations to arrive at a final result set that's aligned with the user's needs. It's important to note that only the data required to fulfill the user's action and use case is returned, and it will not be stored for longer than the job execution.

Journey Orchestration

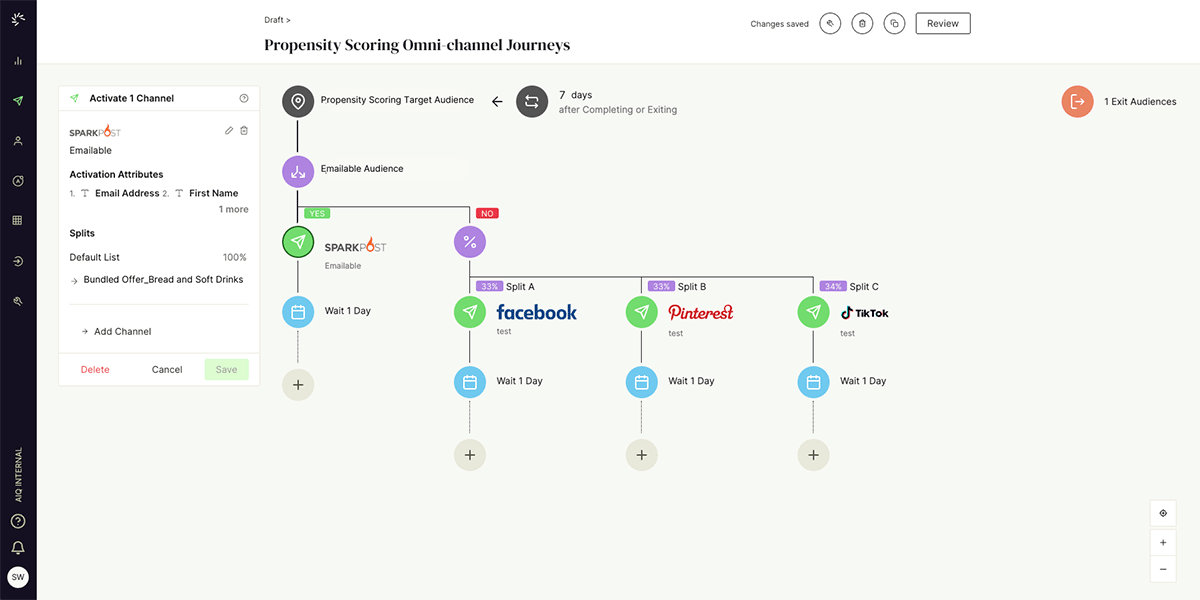

Following the execution of the queries, the audience segment results are returned back to the ActionIQ user interface. From there, your marketing team can start constructing multi-stage customer journeys to activate the bundled offer, featuring bread and soft drinks, targeted at the intended audience. Within the target audience —— customers with a high propensity for bread AND moderate propensity for soft drinks —— the journeys can be further split based on customers' channel preferences as follows (Figure 11):

- If the customer is part of the emailable audience segment, they will receive the bundled offer through the email newsletter.

- For customers not included in the emailable segment, the bundled offer will be delivered via paid media advertising. Additionally, this group can be evenly distributed across different paid media channels for testing purposes.

Once you're ready to activate the designed journey, the target audience segments and their associated attributes will be written to the designated channel destination (such as Sparkpost, Facebook, Pinterest, TikTok), via File transfer or API.

Real-Time Personalization

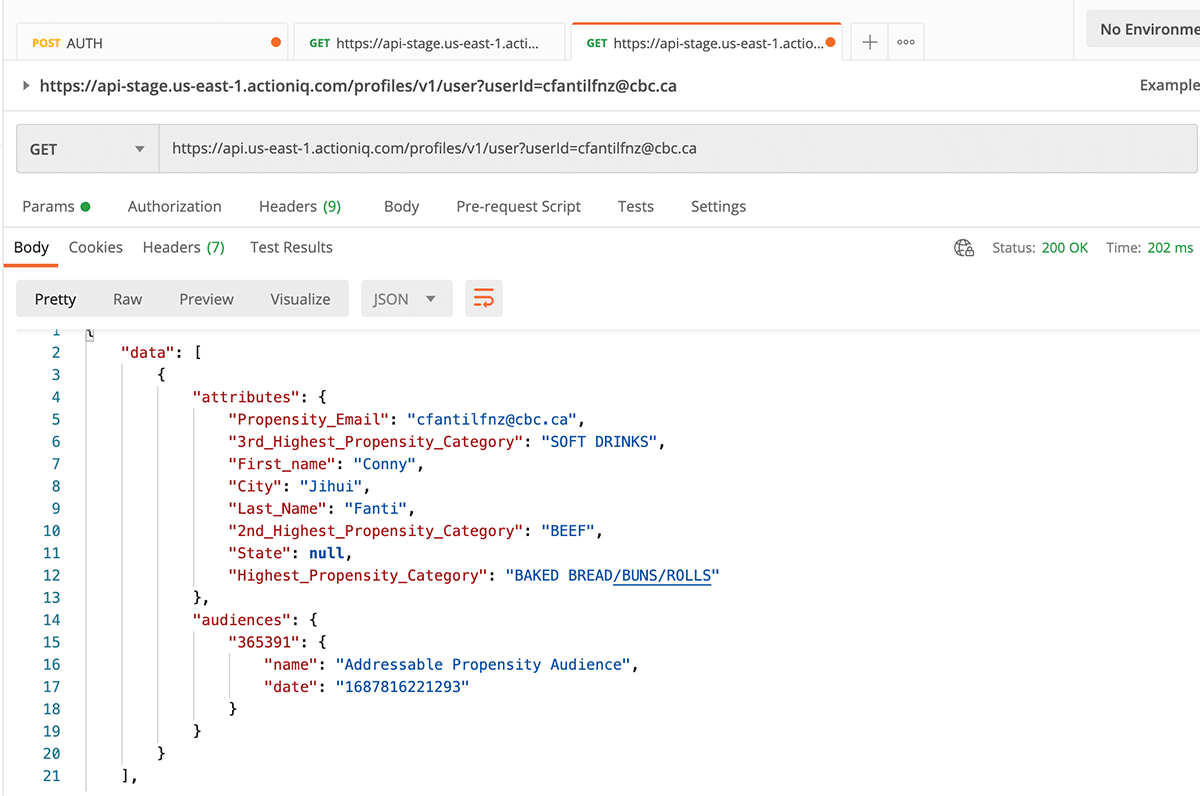

Besides orchestrating personalized customer experience on outbound channels, your marketing team can extend the same level of personalization in real time to visitors on your website. By leveraging the ActionIQ Profile API, your personalization engine can retrieve the comprehensive customer profile, encompassing all attributes and audience membership, within milliseconds (Figure 12). This empowers you to personalize the web banner offer for each customer, presenting the relevant product category aligned with their high propensity score.

Conclusion

As demonstrated in the step-by-step workflow above, this retail brand successfully achieved personalization without data replication by leveraging ActionIQ's HybridCompute integration with the Databricks Lakehouse. In this scenario, marketers efficiently operationalize the propensity model developed and trained in Databricks, without having to move or copy data into ActionIQ. They can seamlessly discover audiences and orchestrate consistent, personalized experiences across channels using ActionIQ's user-friendly interface. The benefits for the business are substantial, as the activation of personalized offers leads to increased customer engagement, improved conversion rates, and ultimately, revenue growth.

Interested to learn more about how a Composable CDP can help you scale your customer data operations? Reach out to the ActionIQ team.

Never miss a Databricks post

What's next?

Retail & Consumer Goods

September 20, 2023/11 min read

How Edmunds builds a blueprint for generative AI

Retail & Consumer Goods

September 9, 2024/6 min read