Mosaic AI: Build and Deploy Production-quality AI Agent Systems

Announcing new products to simplify agent and RAG development, model fine-tuning, AI evaluation, tools governance, and more

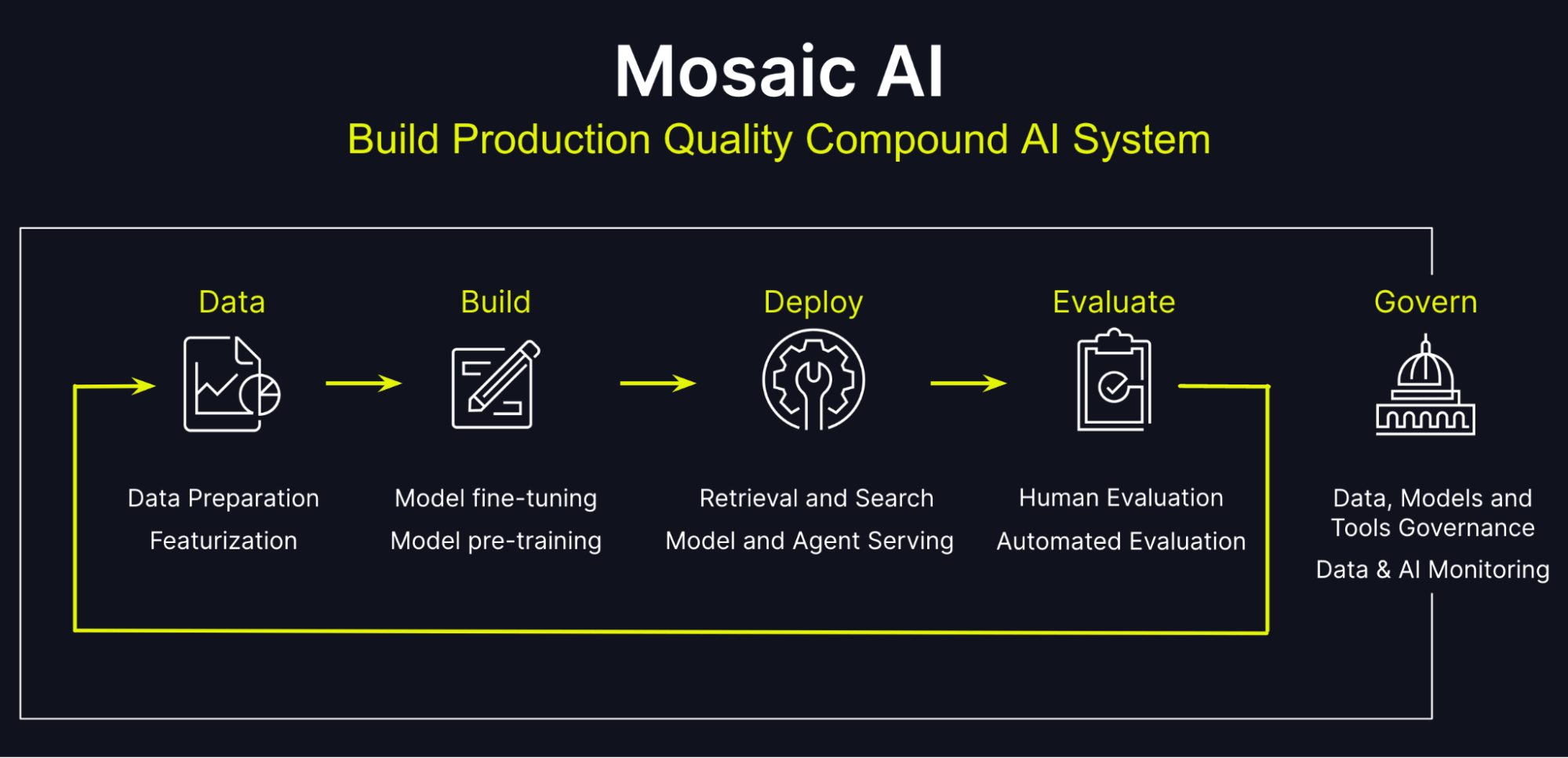

Over the last year, we have seen a surge of commercial and open-source foundation models showing strong reasoning abilities on general knowledge tasks. While general models are an important building block, production AI applications often employ Compound AI Systems, which leverage multiple components such as tuned models, retrieval, tool use, and reasoning agents. These AI agent systems augment foundation models to drive much better quality and help customers confidently take these GenAI apps to production.

Today at the Data and AI Summit, we announced several new capabilities that make Databricks Mosaic AI the best platform for building production-quality AI agent systems. These features are based on our experience working with thousands of companies to put AI-powered applications into production. Today’s announcements include support for fine-tuning foundation models, an enterprise catalog for AI tools, a new SDK for building, deploying, and evaluating AI agents, and a unified AI gateway for governing deployed AI services.

With this announcement, Databricks has entirely integrated and substantially expanded the model-building capabilities first included in our MosaicML acquisition one year ago.

Building and Deploying Compound AI Systems

The evaluation of monolithic AI models to compound systems is an active area of both academic and industry research. Recent results have found that “state-of-the-art AI results are increasingly obtained by compound systems with multiple components, not just monolithic models.” These findings are reinforced by what we see in our customer base. Take for example financial research firm FactSet – when they deployed a commercial LLM for their Text-to-Financial-Formula use case, they could only get 55% accuracy in the generated formula, however, modularizing their model into a compound system allowed them to specialize each task and achieve 85% accuracy. Databricks Mosaic AI supports building AI systems through the following products:

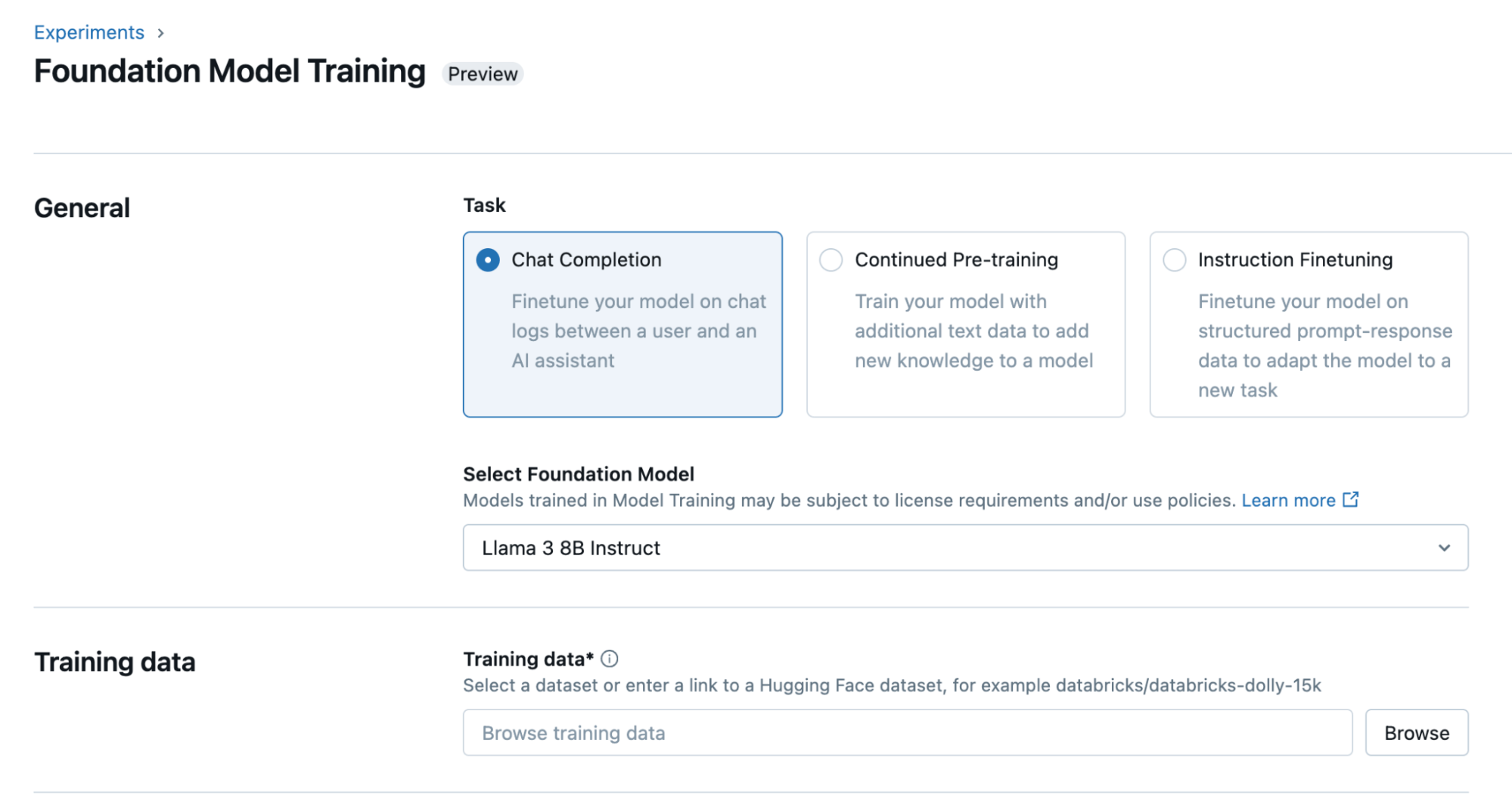

- Fine-tuning with Mosaic AI Model Training: Whether you're fine-tuning a model on a small dataset or pre-training a model from scratch (like DBRX) with trillions of tokens on 3,000+ GPUs, we provide an easy-to-use, managed API for model training, abstracting away the underlying infrastructure. We’re seeing our customers find success with fine-tuning smaller open source models for system components to reduce cost and latency while matching GPT-4 performance on enterprise tasks with proprietary data. Model Training empowers customers to fully own their models and their data, allowing them to iterate on quality.

Users only have to select a task and base model and provide training data (as a Delta table or a .jsonl file) to get a fully fine-tuned model that they own for their specialized task

- Shutterstock ImageAI, Powered by Databricks: Our partner Shutterstock today announced a new text-to-image model trained exclusively on Shutterstock’s world-class image repository using Mosaic AI Model Training. It generates customized, high-fidelity, trusted images that are tailored to specific business needs.

- Mosaic AI Vector Search, now with support for Customer Managed Keys and Hybrid Search: We recently made Vector Search generally available. Additionally, Vector Search now supports GTE-large embedding model which has good retrieval performance and supports 8K context length. Vector Search now also supports Customer Managed Keys to provide more control on the data and supports hybrid search to improve the quality of retrieval.

- Mosaic AI Agent Framework for fast development: RAG applications are the most popular GenAI application we see on our platform, and today we’re excited to announce the Public Preview of our Agent Framework. This makes it very easy to build an AI system that is augmented by your proprietary data–safely governed and managed in Unity Catalog.

- Mosaic AI Model Serving support for agents; Foundation Model API general availability: In addition to real-time serving models, customers can now serve agents and RAG with Model Serving. We are also making Foundation Model APIs generally available—customers can easily use foundation models, both accessible as pay-per-token and provisioned throughput for production workloads.

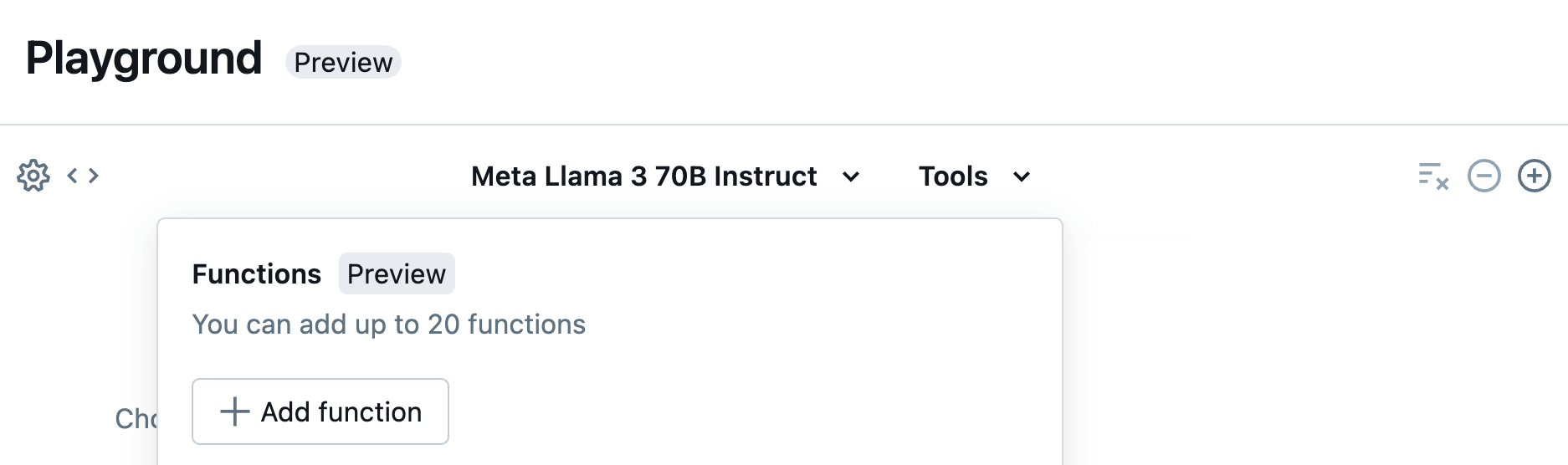

- Mosaic AI Tool Catalog and Function-Calling: Today we announced the Mosaic AI Tool Catalog, which lets customers create an enterprise registry of common functions, internal or external, and share these tools across their organization for use in AI applications. Tools can be SQL functions, Python functions, model endpoints, remote functions, or retrievers. We’ve also enhanced Model Serving to natively support function-calling, so customers can use popular open source models like Llama 3-70B as their agent’s reasoning engine.

Mosaic AI Model Serving now supports function-calling and users can quickly experiment with functions and base models in the AI Playground

Evaluating AI Systems

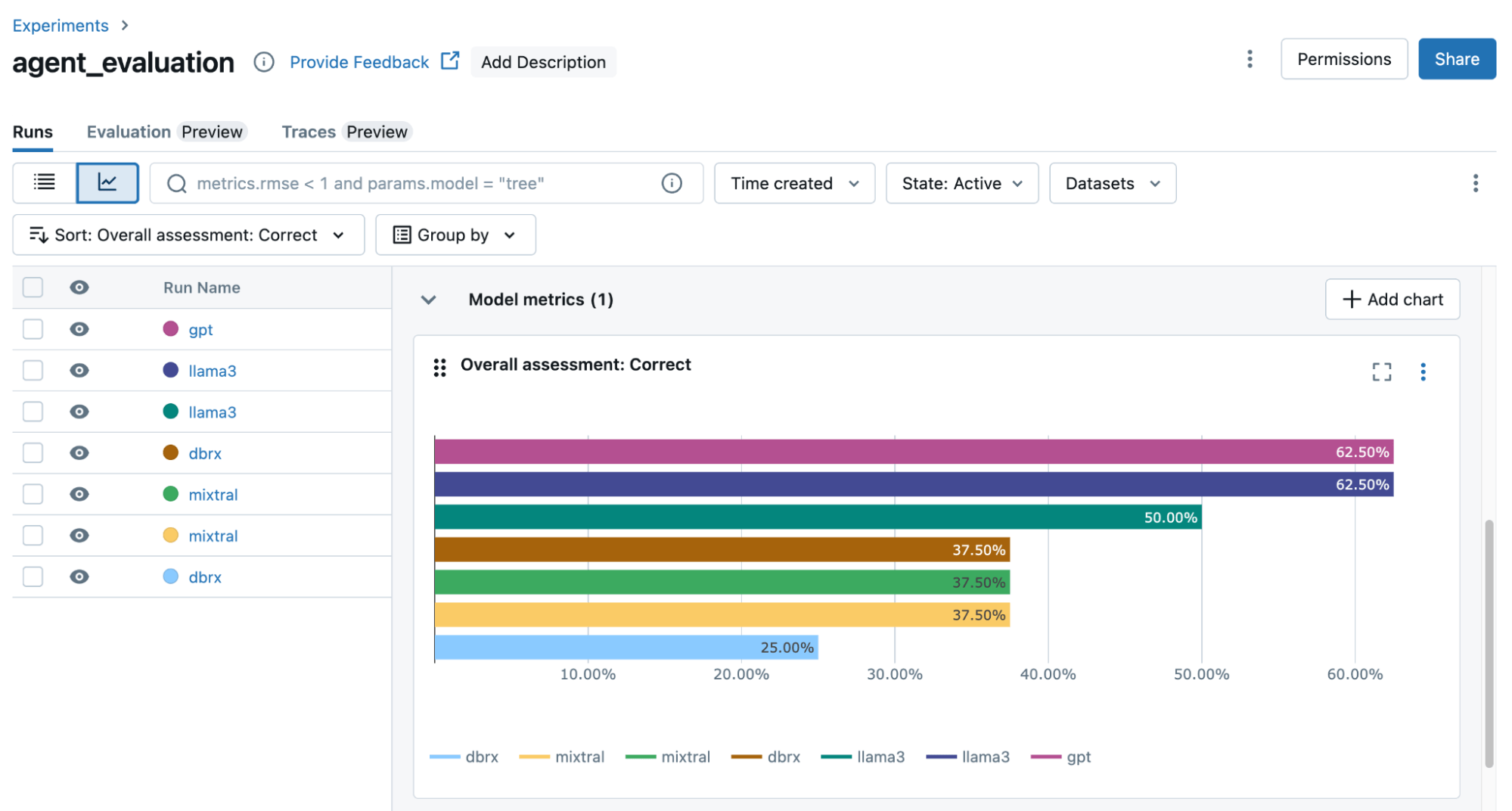

General-purpose AI models optimize for benchmarks, such as MMLU, but deployed AI systems are instead designed to solve specific user tasks as part of a broader product (such as, answering a support ticket, generating a query, or suggesting a response). To make sure these systems work well, it’s important to have a robust evaluation framework for defining quality metrics, gathering quality signals, and iterating on performance. Today we’re excited to announce several new evaluation tools:

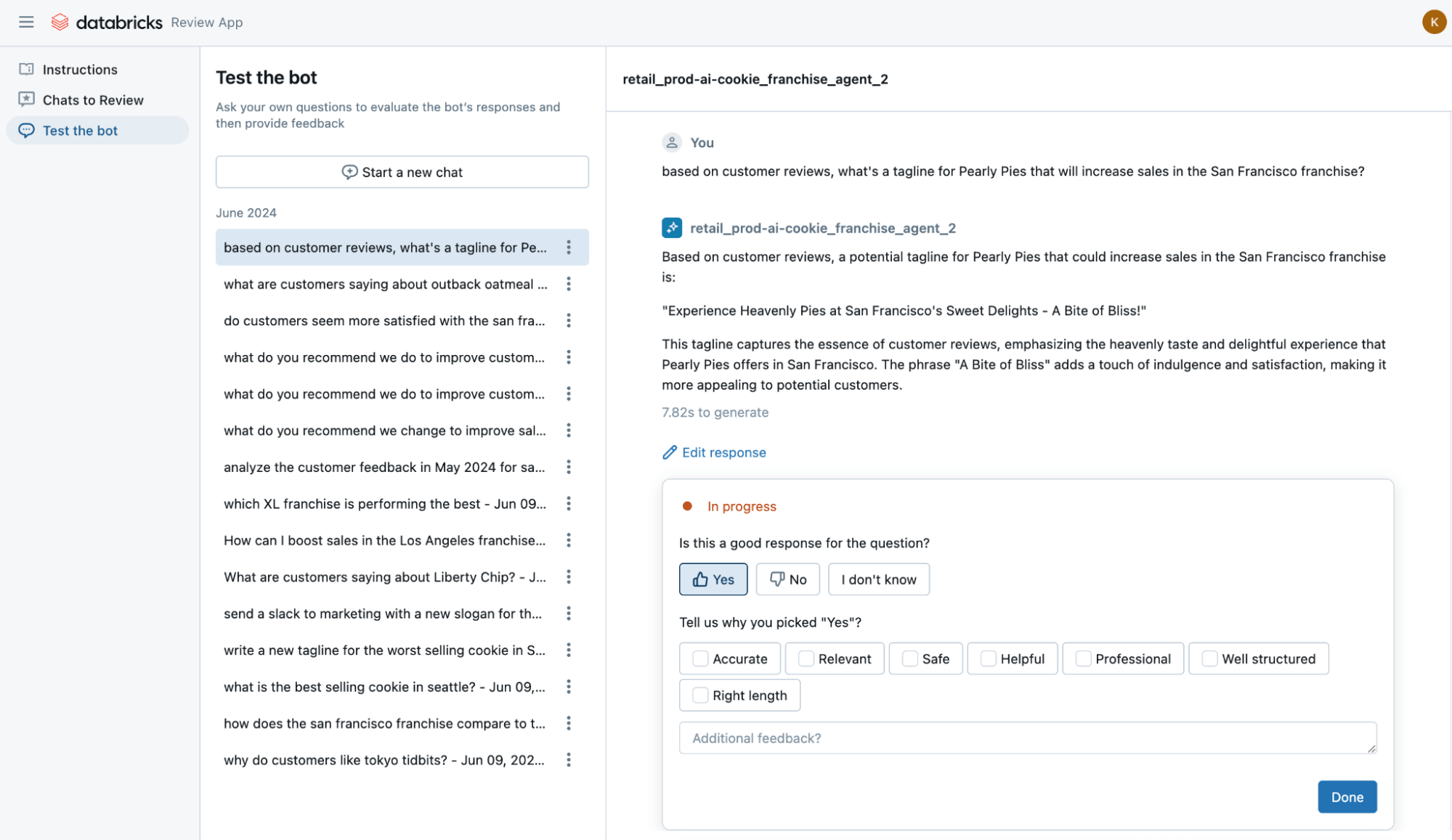

- Mosaic AI Agent Evaluation for Automated and Human Assessments: Agent Evaluation lets you define what high-quality answers look like for your AI system by providing “golden” examples of successful interactions. Once this quality yardstick exists, you can explore permutations of the system, tuning models, changing retrieval, or adding tools, and understand how system changes alter quality. Agent Evaluation also lets you invite subject matter experts across your organization - even those without Databricks accounts - to review and label your AI system output to do production quality assessments and build up an extended evaluation dataset. Finally, system-provided LLM judges can further scale the collection of evaluation data by grading responses on common criteria such as accuracy or helpfulness. Detailed production traces can help diagnose low-quality responses.

Mosaic AI Agent Evaluation provides AI-assisted metrics to help developers form quick intuitions

Mosaic AI Agent Evaluation allows stakeholders, even those outside the Databricks Platform, to assess model outputs and provide ratings to help iterate on quality

- MLflow 2.14: MLflow is a model-agnostic framework for evaluating LLMs and AI systems, allowing customers to measure and track parameters at each step. With MLflow 2.14, we are excited to announce MLflow Tracing. With Tracing, developers can record each step of model and agent inference to debug performance issues and build evaluation datasets to test future improvements. Tracing is tightly integrated with Databricks MLflow Experiments, Databricks Notebooks, and Databricks Inference Tables, providing performance insights from development through production.

Corning is a materials science company - our glass and ceramics technologies are used in many industrial and scientific applications, so understanding and acting on our data is essential. We built an AI research assistant using Databricks Mosaic AI Agent Framework to index hundreds of thousands of documents including US patent office data. Having our LLM-powered assistant respond to questions with high accuracy was extremely important to us - that way, our researchers could find and further the tasks they were working on. To implement this, we used Databricks Mosaic AI Agent Framework to build a Hi Hello Generative AI solution augmented with the U.S. patent office data. By leveraging the Databricks Data Intelligence Platform, we significantly improved retrieval speed, response quality, and accuracy. — Denis Kamotsky, Principal Software Engineer, Corning

Governing Your AI Systems

In the explosion of state-of-the-art foundation models, we’ve seen our customer base rapidly adopt new models: DBRX had a thousand customers experimenting with it within two weeks of launch, and we’re seeing multiple hundreds of customers experimenting with the recently released Llama3 models. Many enterprises find it difficult to support these newer models in their platform within a reasonable timeframe, and changes in prompt structures and querying interfaces make them difficult to implement. Furthermore, as enterprises open access to the latest and greatest models, people get excited and build a bunch of stuff, which can quickly snowball into a mess of governance issues. Common governance issues are rate limits being hit and impacting production applications, exploding costs as people run GenAI models on large tables, and data leakage concerns as PII is sent to third-party model providers. Today, we’re excited to announce new capabilities in AI Gateway for governance and a curated model catalog to enable model discovery. Features included are:

- Mosaic AI Gateway for centralized AI governance: Mosaic AI Gateway enables customers to have a unified interface to easily manage, govern, evaluate, and switch models. It sits on Model Serving to enable rate limiting, permissions, and credential management for model APIs (external or internal). It also provides a single interface for querying foundation model APIs so that customers can easily swap out models in their systems and do rapid experimentation to find the best model for a use case. Gateway Usage Tracking tracks who calls each model API and Inference Tables capture what data was sent in and out. This allows platform teams to understand how to change rate limits, implement chargebacks, and audit for data leakage.

- Mosaic AI Guardrails: Add endpoint-level or request-level safety filtering to prevent unsafe responses, or even add PII detection filters to prevent sensitive data leakage.

- system.ai Catalog: We’ve curated a list of state-of-the-art open source models that can be managed in Unity Catalog. Easily deploy these models using Model Serving Foundation Model APIs or fine-tune them with Model Training. Customers can also find all supported models on the Mosaic AI Homepage by going to Settings > Developer > Personalized Homepage.

Databricks Model Serving is accelerating our AI-driven projects by making it easy to securely access and manage multiple SaaS and open models, including those hosted on or outside Databricks. Its centralized approach simplifies security and cost management, allowing our data teams to focus more on innovation and less on administrative overhead. — Greg Rokita, AVP, Technology at Edmunds.com

Databricks Mosaic AI empowers teams to build and collaborate on compound AI systems from a single platform with centralized governance and a unified interface to train, track, evaluate, swap, and deploy. By leveraging enterprise data, organizations can move from general knowledge to data intelligence. This evolution empowers organizations to get to more relevant insights faster.

We’re excited to see what innovations our customers build next!

Explore more

Never miss a Databricks post

What's next?

Data Science and ML

October 1, 2024/5 min read

Build Compound AI Systems Faster with Databricks Mosaic AI

Healthcare & Life Sciences

November 14, 2024/2 min read