Apache Spark™ Tutorial: Getting Started with Apache Spark on Databricks

Overview

This tutorial module helps you to get started quickly with using Apache Spark. We discuss key concepts briefly, so you can get right down to writing your first Apache Spark job. In the other tutorial modules in this guide, you will have the opportunity to go deeper into the topic of your choice.

In this tutorial module, you will learn:

- Key Apache Spark interfaces

- How to write your first Apache Spark job

- How to access preloaded Databricks datasets

We also provide sample notebooks that you can import to access and run all of the code examples included in the module.

Spark interfaces

There are three key Spark interfaces that you should know about.

Resilient Distributed Dataset (RDD)

Apache Spark’s first abstraction was the RDD. It is an interface to a sequence of data objects that consist of one or more types that are located across a collection of machines (a cluster). RDDs can be created in a variety of ways and are the “lowest level” API available. While this is the original data structure for Apache Spark, you should focus on the DataFrame API, which is a superset of the RDD functionality. The RDD API is available in the Java, Python, and Scala languages.

DataFrame

These are similar in concept to the DataFrame you may be familiar with in the pandas Python library and the R language. The DataFrame API is available in the Java, Python, R, and Scala languages.

Dataset

A combination of DataFrame and RDD. It provides the typed interface that is available in RDDs while providing the convenience of the DataFrame. The Dataset API is available in the Java and Scala languages.

In many scenarios, especially with the performance optimizations embedded in DataFrames and Datasets, it will not be necessary to work with RDDs. But it is important to understand the RDD abstraction because:

- The RDD is the underlying infrastructure that allows Spark to run so fast and provide data lineage.

- If you are diving into more advanced components of Spark, it may be necessary to use RDDs.

- The visualizations within the Spark UI reference RDDs.

When you develop Spark applications, you typically use DataFrames and Datasets.

Write your first Apache Spark job

To write your first Apache Spark job, you add code to the cells of a Databricks notebook. This example uses Python. For more information, you can also reference the Apache Spark Quick Start Guide.

This first command lists the contents of a folder in the Databricks File System:

The next command uses spark, the SparkSession available in every notebook, to read the README.md text file and create a DataFrame named textFile:

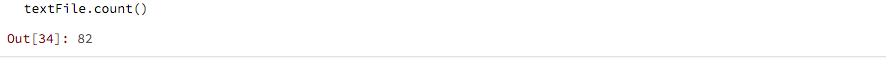

To count the lines of the text file, apply the count action to the DataFrame:

One thing you may notice is that the second command, reading the text file, does not generate any output while the third command, performing the count, does. The reason for this is that the first command is a transformation while the second one is an action. Transformations are lazy and run only when an action is run. This allows Spark to optimize for performance (for example, run a filter prior to a join), instead of running commands serially. For a complete list of transformations and actions, refer to the Apache Spark Programming Guide: Transformations and Actions.

Databricks datasets

Databricks includes a variety of datasets within the Workspace that you can use to learn Spark or test out algorithms. You’ll see these throughout the getting started guide. The datasets are available in the /databricks-datasets folder.

We also provide sample notebooks that you can import to access and run all of the code examples included in the module.