Skip to main content![Prakash Chockalingam]()

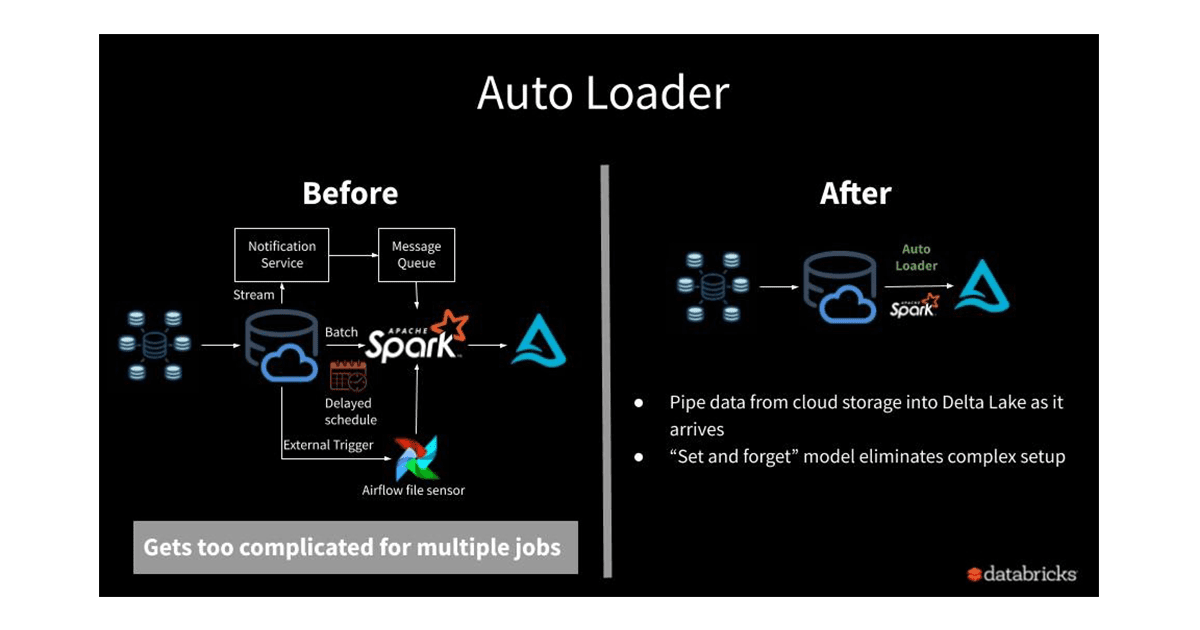

![Achieving exactly-once data ingestion with low SLAs requires manual setup of multiple cloud services. Auto Loader handles all these complexities out of the box.]()

![Company Blog]()

Prakash Chockalingam

Prakash Chockalingam's posts

Solutions

February 24, 2020/7 min read

Introducing Databricks Ingest: Easy and Efficient Data Ingestion from Different Sources into Delta Lake

Announcements

June 28, 2019/3 min read

Getting Data Ready for Data Science: On-Demand Webinar and Q&A Now Available

Showing 1 - 12 of 14 results