Managing AI Security Risks: A workshop for CISOs

An overview of the security risks associated with AI systems for CISOs

Adopting AI is existentially vital for most businesses

Machine Learning (ML) and generative AI (GenAI) are revolutionizing the future of work. Organizations understand that AI is helping build innovation, maintain competitiveness, and improve the productivity of their employees. Equally, organizations understand that their data provides a competitive advantage for their AI applications. Leveraging these technologies presents opportunities but also potential risks to organizations, as embracing them without proper safeguards can result in significant intellectual property and reputational loss.

In our conversations with customers, they frequently cite risks such as data loss, data poisoning, model theft, and compliance and regulation challenges. Chief Information Security Officers (CISOs) are under pressure to adapt to business needs while mitigating these risks swiftly. However, if CISOs say no to the business, they are perceived as not being team players and putting the enterprise first. On the other hand, they are perceived as careless if they say yes to doing something risky. Not only do CISOs need to keep up with the business' appetite for growth, diversification, and experimentation, but they have to keep up with the explosion of technologies promising to revolutionize their business.

In this blog, we will discuss the security risks CISOs need to know as their organization evaluates, deploys, and adopts enterprise AI applications.

Your data platform should be an expert on AI security

At Databricks, we believe data and AI are your most precious non-human assets, and that the winners in every industry will be data and AI companies. That's why security is embedded in the Databricks Data Intelligence Platform. The Databricks Platform allows your entire organization to use data and AI. It's built on a lakehouse to provide an open, unified foundation for all data and governance, and is powered by a Data Intelligence Engine that understands the uniqueness of your data.

Our Databricks Security team works with thousands of customers to securely deploy AI and machine learning on Databricks with the appropriate security features that meet their architecture requirements. We work with dozens of experts internally at Databricks and in the larger ML and GenAI community to identify security risks to AI systems and define the controls necessary to mitigate those risks. We have reviewed numerous AI and ML risk frameworks, standards, recommendations, and guidance. As a result, we have robust AI security guidelines to help CISOs, security leaders, and data teams understand how to deploy their organizations' ML and AI applications securely. However, before discussing the risks to ML and AI applications, let's walk through the constituent components of an AI system used to manage the data, build models, and serve applications.

Understanding the core components of an AI system for effective security

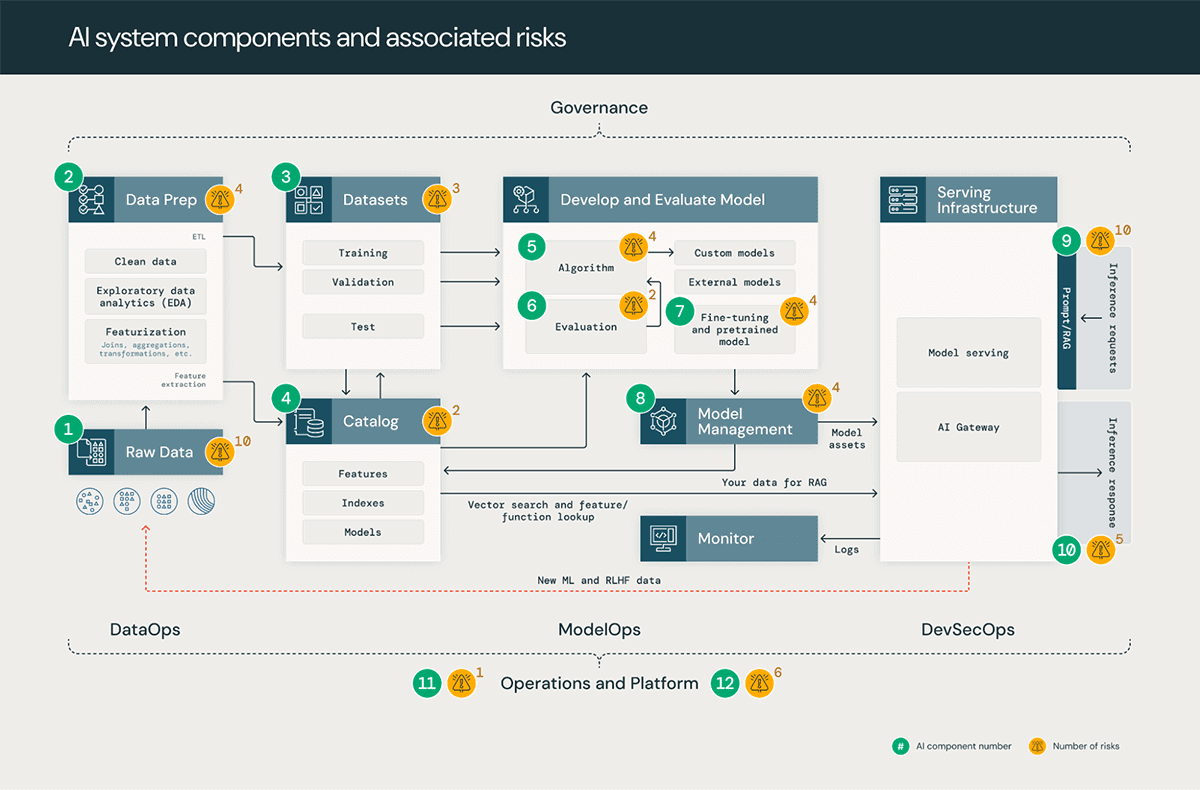

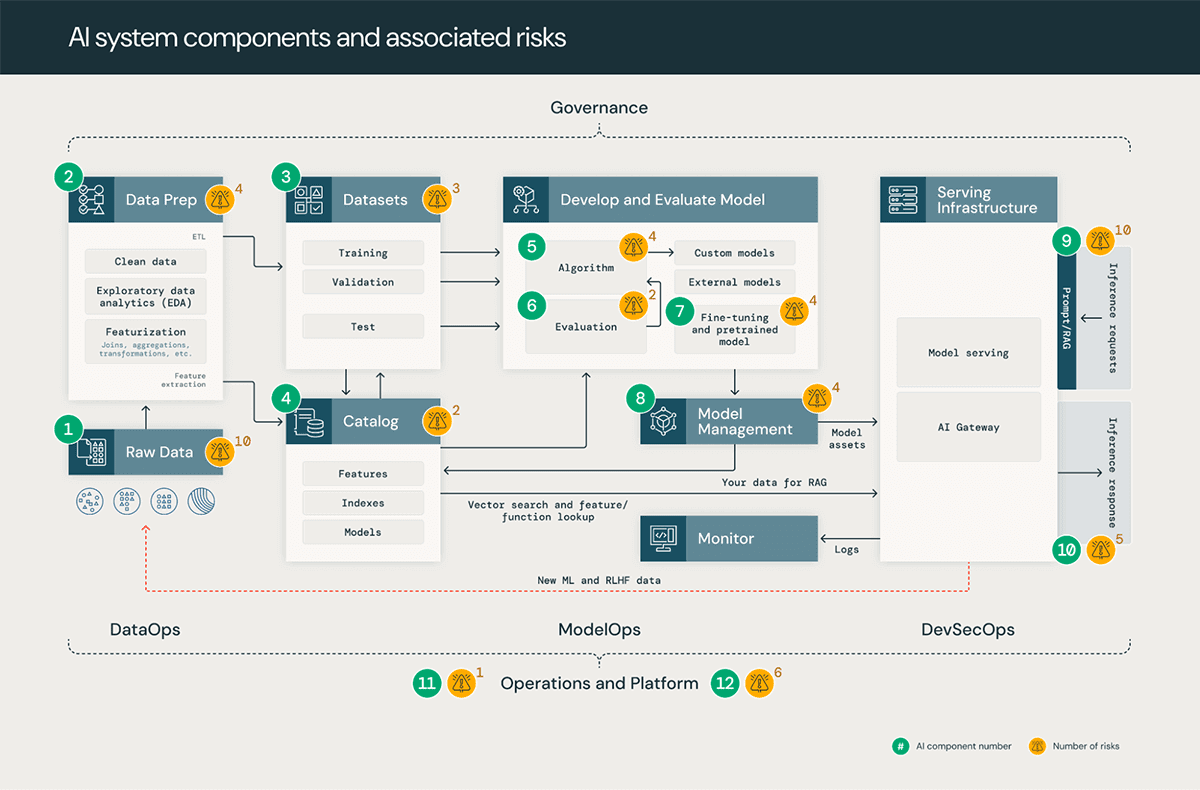

AI systems are comprised of data, code, and models. A typical system for such a solution has 12 foundational architecture components, broadly categorized into four major stages:

- Data operations consist of ingesting and transforming data and ensuring data security and governance. Good ML models depend on reliable data pipelines and secure infrastructure.

- Model operations include building custom models, acquiring models from a model marketplace, or using SaaS LLMs (like OpenAI). Developing a model requires a series of experiments and a way to track and compare the conditions and results of those experiments.

- Model deployment and serving consists of securely building model images, isolating and securely serving models, automated scaling, rate limiting, and monitoring deployed models.

- Operations and platform include platform vulnerability management and patching, model isolation and controls to the system, and authorized access to models with security in the architecture. It also consists of operational tooling for CI/CD. It ensures the complete lifecycle meets the required standards by keeping the distinct execution environments - development, staging, and production for secure MLOps.

MLOps is a set of processes and automated steps to manage the AI system's code, data, and models. MLOps should be combined with security operations (SecOps) practices to secure the entire ML lifecycle. This includes protecting data used for training and testing models and deploying models and the infrastructure they run on from malicious attacks.

Top security risks of AI systems

Technical Security Risks

In our analysis of AI and ML systems, we identified 55 technical security risks across the 12 components. In the table below, we outline these basic components that align with steps in any AI and ML system and highlight the types of security risks our team identified:

| System stage | System components (Figure 1) | Potential security risks |

|---|---|---|

| Data operations |

|

19 specific risks, such as

|

| Model operations |

|

14 specific risks, such as

|

| Model deployment and serving |

|

15 specific risks, such as

|

| Operations and Platform |

|

7 specific risks, such as

|

Databricks has mitigation controls for all of the above-outlined risks. In our Databricks AI Security Framework, we will walk through the complete list of risks as well as our guidance on the associated controls and out-of-the-box capabilities like Databricks Delta Lake, Databricks Managed MLflow, Unity Catalog, and Model Serving that you can use as mitigation controls to the above risks.

Organizational Security Risks

In addition to the technical risks, our discussions with CISOs have highlighted the necessity of addressing four organizational risk areas to ease the path to AI and ML adoption. These are key to aligning the security function with the needs, pace, outcomes and risk appetite of the business they serve.

- Talent: Security organizations can lag the rest of the organization when adopting new technologies. CISOs need to understand which roles in their talent pool should upskill to AI and enforce MLSecOps across departments.

- Operating model: Identifying the key stakeholders, champions, and enterprise-wide processes to securely deploy new AI use cases is critical to assessing risk levels and finding the proper path to deploying applications.

- Change management: Finding hurdles for change, communicating expectations, and eroding inertia to accelerate by leveraging an organizational change management framework, e.g., ADKAR, etc., helps organizations to enable new processes with minimal disruption.

- Decision support: Tying technical work to business outcomes for prioritizing which technical efforts or business use cases is key to arriving at a single set of organization-wide priorities.

Mitigate AI security risks effectively with our CISO-driven workshop

CISOs are instinctive risk assessors. However, this superpower fails most CISOs when it comes to AI. The primary reason is that CISOs don't have a simple mental model of an AI system that they can readily visualize to synthesize assets, threats, impacts, and controls.

To help you with this, the Databricks Security team has designed an AI security workshop for CISOs and security and data leaders. These workshops are built around our Databricks AI Security Framework, offering an interactive experience that facilitates structured discussions on security threats and mitigations. They are designed to be accessible, requiring no deep expertise in machine learning concepts.

As a sneak peek, here's the top-line approach we recommend for managing the technical security risks of ML and AI applications at scale:

- Identify the ML Business Use Case: Make sure there is a well-defined use case with stakeholders you are trying to secure adequately, whether already implemented or in planning phases.

- Determine ML Deployment Model: Choose an appropriate model (e.g., Custom, SaaS LLM, RAG, fine-tuned model, etc.) to determine how shared responsibilities (especially for securing each component) are split across the 12 AI system components between your organization and any partners involved.

- Select Most Pertinent Risks: From our documented list of 55 risks, pinpoint the most relevant to your organization based on the outcome of step #2.

- Enumerate Threats for Each Risk: Identify the specific threats linked to each risk and the targeted ML/GenAI component for every threat.

- Choose and Implement Controls: Select controls that align with your organization's risk appetite. These controls are defined generically for compatibility with any data platform. Our framework also provides guidelines on tailoring these controls specifically for the Databricks environment.

- Operationalize Controls: Determine control owners, many of whom you might inherit from your data platform provider. Ensure controls are implemented, monitored for effectiveness, and regularly reviewed. Adjustments may be needed based on changes in business use cases, deployment models, or evolving threat landscapes.

Learn more and connect with our team

If you're interested in participating in one of our upcoming AI Security workshops - or hosting one for your organization - contact [email protected].

If you are curious about how Databricks approaches security, please visit our Security and Trust Center.

Never miss a Databricks post

What's next?

Product

August 30, 2024/6 min read