Build and deploy quality AI agent systems

Securely connect your data with any AI model to create accurate, domain-specific applications.

TOP TEAMS SUCCEED WITH MOSAIC AI

The only unified platform for agent systems

Stop relying on generic AI models. Databricks has the tools to build agent systems that deliver accurate, data-driven results.44% improvement in accuracy

$10M in productivity gains

96% accuracy of responses

Tools for end-to-end AI agent systems

Agent Bricks

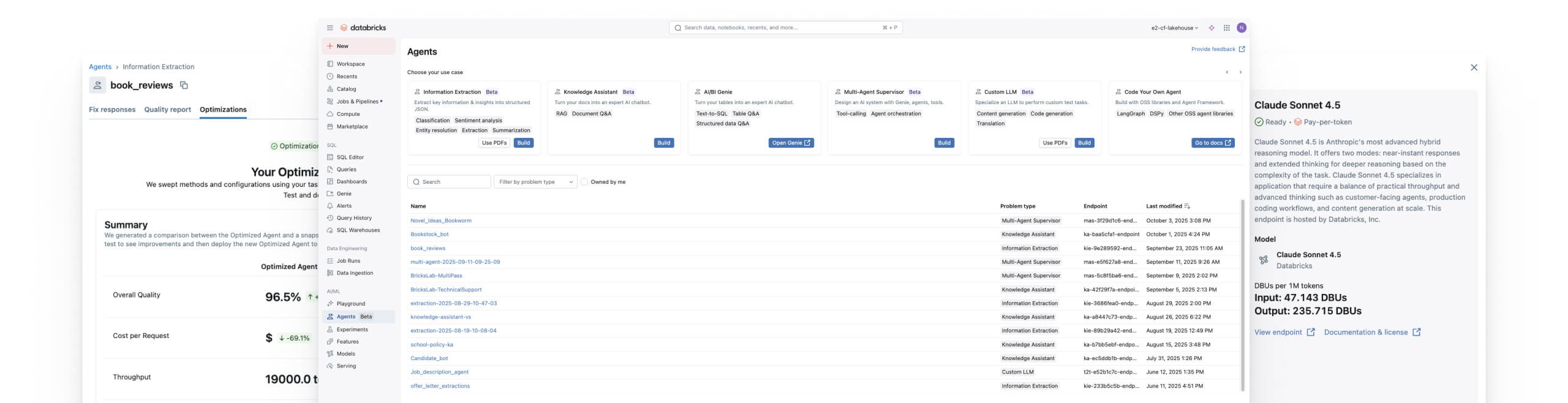

Build AI agents grounded in your enterprise data. Databricks Agent Bricks lets you optimize quality and cost with synthetic data, custom evaluation, and automated tuning.

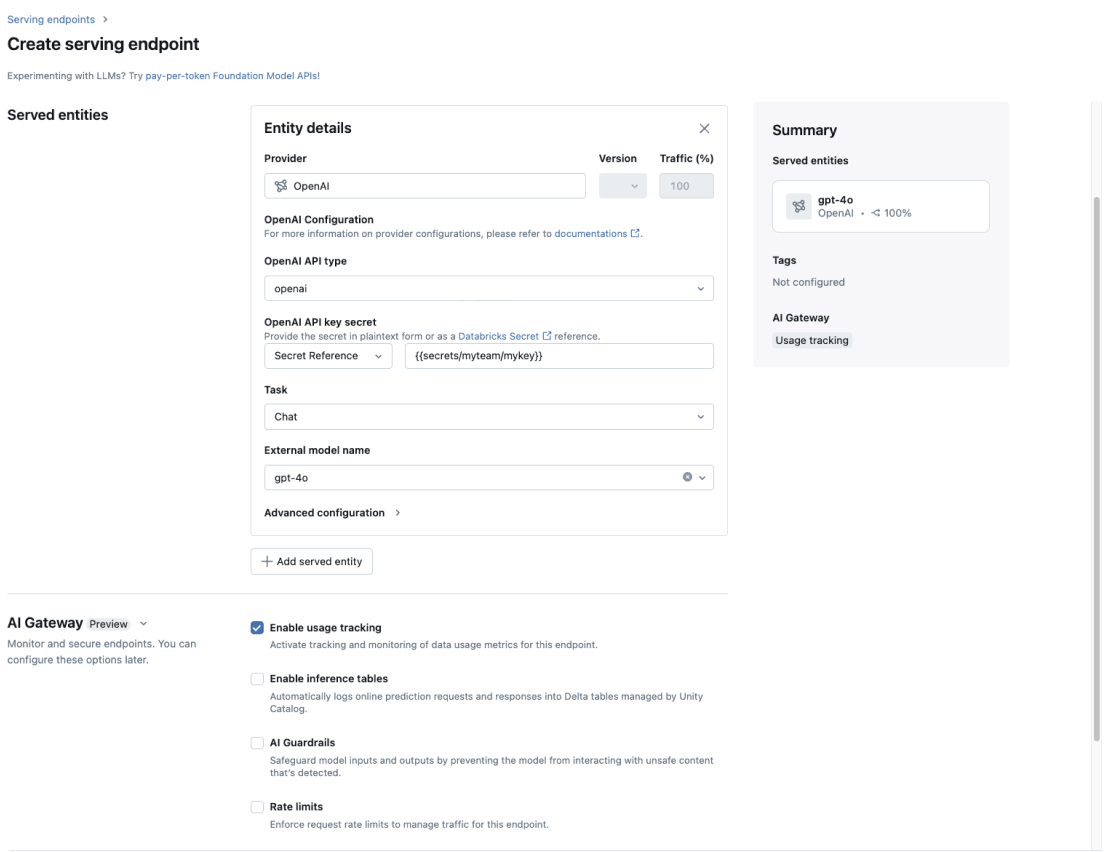

AI Gateway

Consistently apply data governance across every GenAI model in your enterprise.

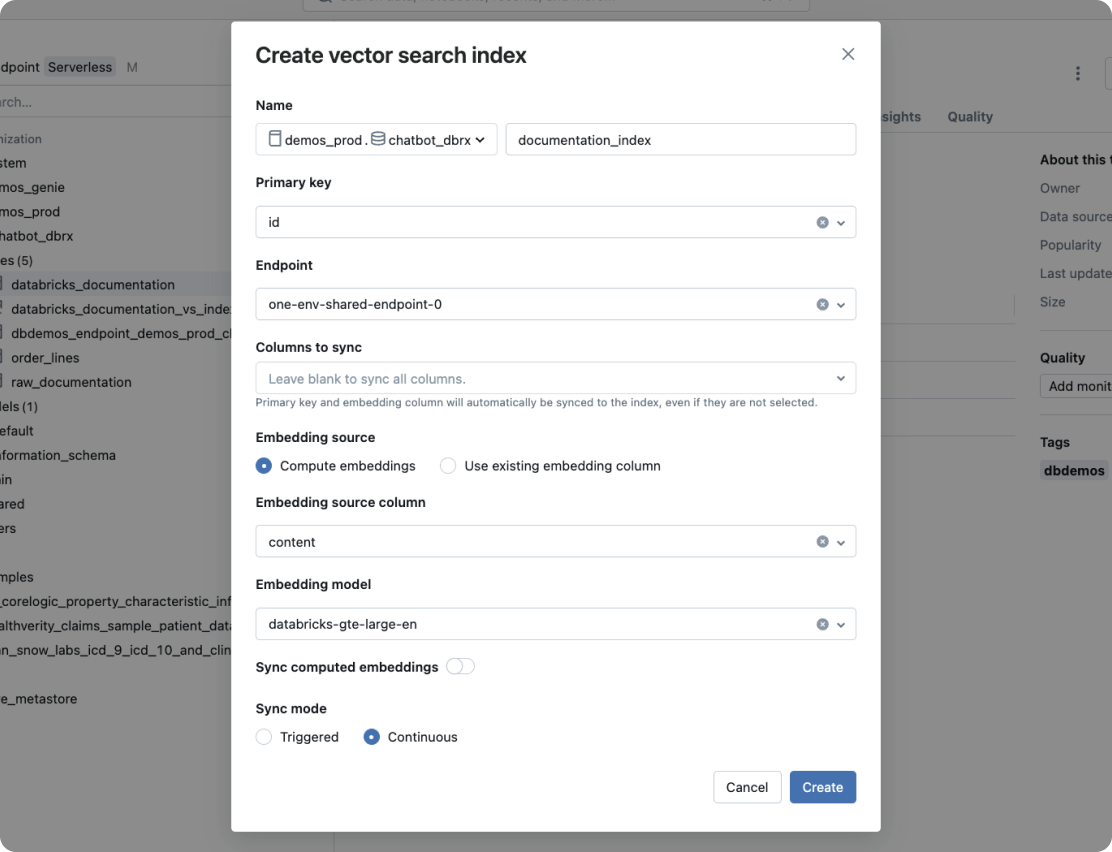

Vector Search

A highly performant vector database with real-time syncing of source data.

Agent Framework and Evaluation

Build production-quality AI agents with Agent Framework. Agent Evaluation, an integral feature of the framework, ensures agent output quality with AI-assisted assessments and offers an intuitive UI for feedback from human stakeholders.

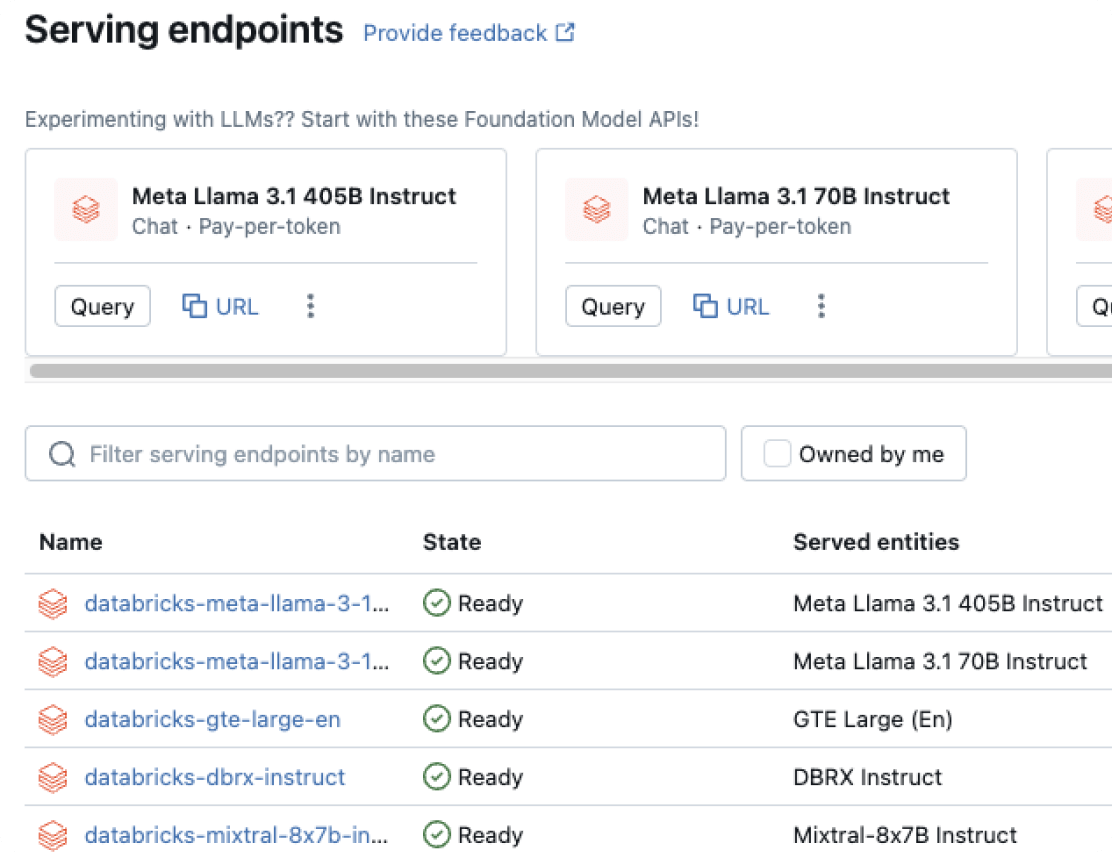

Model Serving

Unified deployment for agents, GenAI and classical ML models.

Model Training

Fine-tune open source LLMs, pretrain custom LLMs or build classical ML models.

Databricks Notebooks

Boost team productivity with Databricks Collaborative Notebooks, enabling real-time collaboration and streamlined data science workflows.

Managed MLflow

Extend open source MLflow, a unified MLOps platform for building better models and generative AI apps, with enterprise-grade reliability, security and scalability.

Data Quality Monitoring

Simple, scalable monitoring that detects anomalies, tracks freshness, and delivers consistent quality signals across your AI assets.

The Databricks Data Intelligence Platform

Explore the full range of tools available on the Databricks Data Intelligence Platform to seamlessly integrate data and AI across your organization.

Build high-quality agent systems

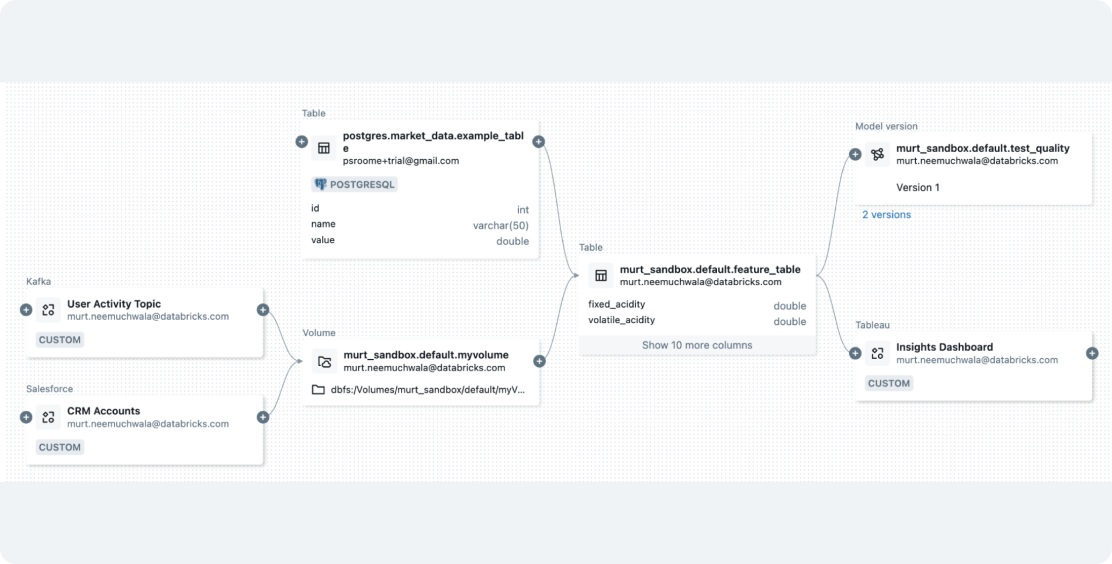

Transform your data effortlessly

Prepare data with seamless integration for GenAI and ML workflows

Databricks empowers you to ingest any data type and orchestrate jobs to prepare it for your GenAI or ML applications. With built-in governance, it simplifies data featurization and creates vector indexes for RAG using Mosaic AI Vector Search, unifying data and model pipelines to streamline workflows and cut costs.

Take the next step

Mosaic AI FAQ

Ready to become a data + AI company?

Take the first steps in your transformation