Security best practices for the Databricks Data Intelligence Platform

A defense-in-depth approach to stay ahead in data and AI security

At Databricks, we know that data is one of your most valuable assets. Our product and security teams work together to deliver an enterprise-grade Data Intelligence Platform that enables you to defend against security risks and meet your compliance obligations. Over the past year, we are proud to have delivered new capabilities and resources such as securing data access with Azure Private Link for Databricks SQL Serverless, keeping data private with Azure firewall support for Workspace storage, protecting data in-use with Azure confidential computing, achieving FedRAMP High Agency ATO on AWS GovCloud, publishing the Databricks AI Security Framework, and sharing details on our approach to Responsible AI.

According to the 2024 Verizon Data Breach Investigations Report, the number of data breaches has increased by 30% since last year. We believe it is crucial for you to understand and appropriately utilize our security features and adopt recommended security best practices to mitigate data breach risks effectively.

In this blog, we'll explain how you can leverage some of our platform's top controls and recently released security features to establish a robust defense-in-depth posture that protects your data and AI assets. We will also provide an overview of our security best practices resources for you to get up and running quickly.

Protect your data and AI workloads across the Databricks Data Intelligence Platform

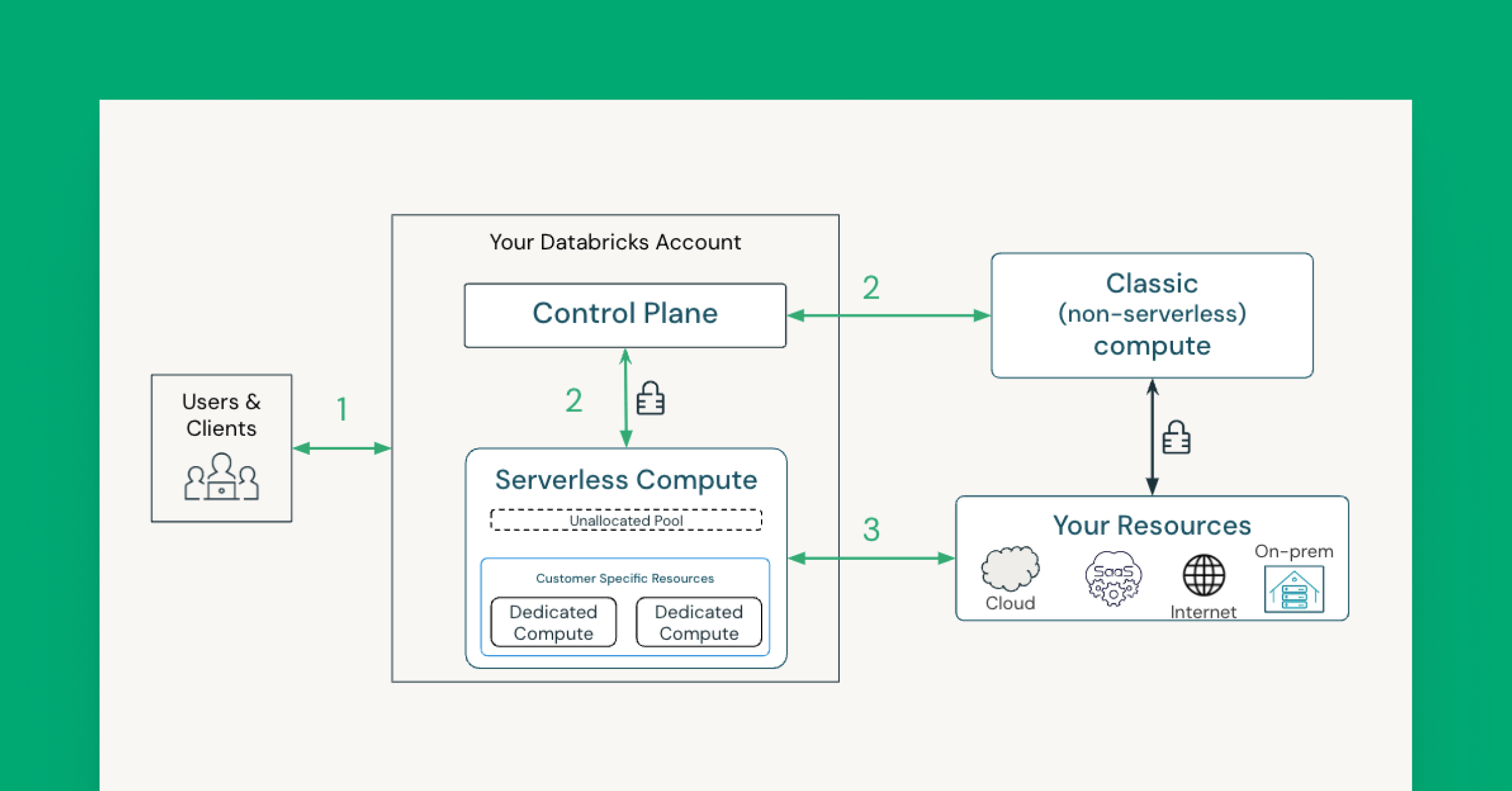

The Databricks Platform provides security guardrails to defend against account takeover and data exfiltration risks at each access point. In the below image, we outline a typical lakehouse architecture on Databricks with 3 surfaces to secure:

- Your clients, users and applications, connecting to Databricks

- Your workloads connecting to Databricks services (APIs)

- Your data being accessed from your Databricks workloads

Let’s now walk through at a high level some of the top controls—either enabled by default or available for you to turn on—and new security capabilities for each connection point. Our full list of recommendations based on different threat models can be found in our security best practice guides.

Connecting users and applications into Databricks (1)

To protect against access-related risks, you should use multiple factors for both authentication and authorization of users and applications into Databricks. Using only passwords is inadequate due to their susceptibility to theft, phishing, and weak user management. In fact, as of July 10, 2024, Databricks-managed passwords reached the end-of-life and are no longer supported in the UI or via API authentication. Beyond this additional default security, we advise you to implement the below controls:

- Authenticate via single-sign-on at the account level for all user access (AWS, SSO is automatically enabled on Azure/GCP)

- Leverage multi-factor authentication offered by your IDP to verify all users and applications that are accessing Databricks (AWS, Azure, GCP)

- Enable unified login for all workspaces using a single account-level SSO and configure SSO Emergency access with MFA for streamlined and secure access management (AWS, Databricks integrates with built-in identity providers on Azure/GCP)

- Use front-end private link on workspaces to restrict access to trusted private networks (AWS, Azure, GCP)

- Configure IP access lists on workspaces and for your account to only allow access from trusted network locations, such as your corporate network (AWS, Azure, GCP)

Connecting your workloads to Databricks services (2)

To prevent workload impersonation, Databricks authenticates workloads with multiple credentials during the lifecycle of the cluster. Our recommendations and available controls depend on your deployment architecture. At a high level:

- For Classic clusters that run on your network, we recommend configuring a back-end private link between the compute plane and the control plane. Configuring the back-end private link ensures that your cluster can only be authenticated over that dedicated and private channel.

- For Serverless, Databricks automatically provides a defense-in-depth security posture on our platform using a combination of application-level credentials, mTLS client certificates and private links to mitigate against Workspace impersonation risks.

Connecting from Databricks to your storage and data sources (3)

To ensure that data can only be accessed by the right user and workload on the right Workspace, and that workloads can only write to authorized storage locations, we recommend leveraging the following features:

- Using Unity Catalog to govern access to data: Unity Catalog provides several layers of protection, including fine-grained access controls and time-bound down-scoped credentials that are only accessible to trusted code by default.

- Leverage Mosaic AI Gateway: Now in Public Preview, Mosaic AI Gateway allows you to monitor and control the usage of both external models and models hosted on Databricks across your enterprise.

- Configuring access from authorized networks: You can configure access policies using S3 bucket policies on AWS, Azure storage firewall and VPC Service Controls on GCP.

- With Classic clusters, you can lock down access to your network via the above-listed controls.

- With Serverless, you can lock down access to the Serverless network (AWS, Azure) or to a dedicated private endpoint on Azure. On Azure, you can now enable the storage firewall for your Workspace storage (DBFS root) account.

- Resources external to Databricks, such as external models or storage accounts, can be configured with dedicated and private connectivity. Here is a deployment guide for accessing Azure OpenAI, one of our most requested scenarios.

- Configuring egress controls to prevent access to unauthorized storage locations: With Classic clusters, you can configure egress controls on your network. With SQL Serverless, Databricks does not allow internet access from untrusted code such as Python UDFs. To learn how we're enhancing egress controls as you adopt more Serverless products, please this form to join our previews.

The diagram below outlines how you can configure a private and secure environment for processing your data as you adopt Databricks Serverless products. As described above, multiple layers of protection can protect all access to and from this environment.

Define, deploy and monitor your data and AI workloads with industry-leading security best practices

Now that we have outlined a set of key controls available to you, you probably are wondering how you can quickly operationalize them for your business. Our Databricks Security team recommends taking a “define, deploy, and monitor” approach using the resources they have developed from their experience working with hundreds of customers.

- Define: You should configure your Databricks environment by reviewing our best practices along with the risks specific to your organization. We've crafted comprehensive best practice guides for Databricks deployments on all three major clouds. These documents offer a checklist of security practices, threat models, and patterns distilled from our enterprise engagements.

- Deploy: Terraform templates make deploying secure Databricks workspaces easy. You can programmatically deploy workspaces and the required cloud infrastructure using the official Databricks Terraform provider. These unified Terraform templates are preconfigured with hardened security settings similar to those used by our most security-conscious customers. View our GitHub to get started on AWS, Azure, and GCP.

- Monitor: The Security Analysis Tool (SAT) can be used to monitor adherence to security best practices in Databricks workspaces on an ongoing basis. We recently upgraded the SAT to streamline setup and enhance checks, aligning them with the Databricks AI Security Framework (DASF) for improved coverage of AI security risks.

Stay ahead in data and AI security

The Databricks Data Intelligence Platform provides an enterprise-grade defense-in-depth approach for protecting data and AI assets. For recommendations on mitigating security risks, please refer to our security best practices guides for your chosen cloud(s). For a summarized checklist of controls related to unauthorized access, please refer to this document.

We continuously enhance our platform based on your feedback, evolving industry standards, and emerging security threats to better meet your needs and stay ahead of potential risks. To stay informed, bookmark our Security and Trust blog, head over to our YouTube channel, and visit the Databricks Security and Trust Center.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read